Validation of YOLOv8 algorithm in detecting colon polyps in endoscopy videos

Highlight box

Key findings

• Our study applied an improved You Only Look Once (YOLO) object detection model—YOLOv8 to a Vietnamese colonoscopy video database. The YOLOv8 models detected 95.6% of polyps on 30 validation videos set and achieved an F1-score of 0.6 at an intersection over union threshold of 0.3. The algorithm processed at 72 frames per second (fps), exceeding requirements for real-time application (25 fps).

What is known and what is new?

• Artificial intelligence holds significant promise in the field of colonoscopy. Object detection algorithms can enhance the procedure quality by flagging potential polyps that may be missed by endoscopists, especially in challenging cases. However, these algorithms often have slow processing speed and high false positive rates, which hinder their real-time application.

• Our research applies YOLOv8 algorithm, which has outperformed all other previous YOLO models on both precision and speed. The innovative architecture of YOLOv8 reduces computational load, making it ideal for resource-constrained environments. Our images and videos dataset are collected and annotated by experts at Institute of Gastroenterology and Hepatology.

What is the implication, and what should change now?

• Our results show the potential of YOLOv8 in concurrent polyp detection. Besides a high polyp detection rate, it managed to detect polyps missed by endoscopists. However, further training and database expansion are required to reduce false positive rates and improve accuracy.

Introduction

Background

Colorectal polyps are abnormal proliferating masses that protrude into the colon’s lumen. According to GLOBOCAN 2022, colorectal cancer is the third most common cancer in the world and 95% of colorectal adenocarcinomas arise from polyps (1,2). Endoscopy is an important screening method for colorectal cancer. It helps detect, take biopsy specimens, and effectively remove precancerous lesions. However, a systematic review in 2018 indicated that the endoscopy missing rate of serrated polyps was 27% and adenomas was 26%, with nearly 80% of missed lesions were visible but not detected by endoscopists (3).

Colonoscopy integrated with artificial intelligence (AI) is a promising solution to increase the polyp detection rate. A meta-analysis of 14 randomized control trials in 2022 showed that AI reduced serrated adenoma missing rate by 78% and increased the number of adenomas >10 mm detected per colonoscopy by 93% (4). Currently, many AI-integrated endoscopy systems such as EndoBRAIN (Olympus, Tokyo, Japan), CAD-Eye (Fujifilm, Tokyo, Japan) or WiseVision (NEC, Tokyo, Japan) have been commercialized.

Knowledge gaps

However, applying AI in colorectal polyp detection still faces two major technical drawbacks: high false positive rate and high latency. Therefore, more clinical studies are necessary to identify factors affecting AI models’ accuracy as well as develop new models to improve latency (3). Among object detection algorithms, YOLOv8, launched in 2023, is the most prominent model with reduced latency and high accuracy (5). In addition, the You Only Look Once (YOLO) algorithm could run on low-end processors while maintaining high processing speeds. This feature is essential for applying AI in limited resources settings with overwhelming number of patients like in Vietnamese hospitals.

Objectives

We conducted our study using YOLOv8 algorithm on endoscopic videos with two objectives: (I) evaluate the accuracy of YOLOv8 algorithm in colorectal polyp detection in endoscopic videos, and (II) describe common false detections and misses made by YOLOv8 algorithm in colorectal polyp detection. We present this article in accordance with the TRIPOD reporting checklist (available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-338/rc).

Methods

The construction of YOLOv8

YOLOv8 model’s structure comprises 3 parts: backbone, neck, and head (Figure 1).

The algorithm’s backbone breaks down the input data (such as an image) into different levels of information, which is called hierarchical feature extraction. While low-level features represent simple large areas of basic color, high-level features are fine details of the images. Hierarchical feature extraction is achieved using convolutional layers, each layer captures different levels (5). In YOLOv8, Darknet neural network framework is used as the backbone. Compared to other convolutional layer extraction models, Darknet is designed to be lightweight and efficient. This is archived through Darknet’s innovative architecture, such as the use of global average pooling, batch normalization, or 1×1 convolutional kernel placement between 3×3 common convolution kernels (5). These features reduce computational load and make Darknet well-suited for real-time applications and resource-constrained environments.

The neck is an optional intermediate component between the backbone and the head. Its purpose is to further refine and aggregate features before passing them to the head for final predictions. YOLOv8’s neck part applied cross-stage partial (CSP) extraction module. CSP is a method of sharing information between different stages of feature extraction. By improving the information’s flow across stages of the convolutional network, CSP enhanced object detection, especially in scenarios where objects vary in size, scale, or complexity (6).

The head is responsible for making the final predictions based on the features extracted by the backbone and possibly refined by the neck. the head typically includes layers that predict bounding boxes, class probabilities, and other relevant information for each object in the input. YOLOv8’s head Loss function includes 3 components: Localization Loss, Confidence Loss, and Classification Loss (7). The Localization Loss measures how well the predicted bounding box coordinates match the ground truth bounding box. It ensures that the model learns accurately to predict bounding box positions and sizes. The Confidence Loss evaluates how well the model predicts the confidence score for each bounding box. The confidence score indicates the probability that the predicted bounding box contains an object. The Loss is calculated using binary cross-entropy loss (BCE) as the confidence scores are treated as probabilities. Finally, the Classification Loss assesses the accuracy of predicting class labels for each bounding box. YOLO uses categorical cross-entropy loss for Classification Loss, as it deals with predicting multiple class labels.

Compared to the previously released YOLO models, Ultralytics’ YOLOv8 has several improvements in the algorithm architecture. Instead of separating object classification and bounding box localization, YOLOv8 deploys Task-Aligned Assigner from the TOOD algorithm, which aims to align these 2 tasks in training to create a one-stage object detection model. Furthermore, YOLOv8 reduces the number of bounding boxes by speeding up non-maximum suppression (NMS), a method used to minimize overlapping bounding boxes to enhance the overall quality of detection (5). Mosaic augmentation is also incorporated into YOLOv8’s training process, which adjusts the bounding box coordinate in a mosaic image (8). When trained with Common Objects in Context (COCO) dataset, YOLOv8 outperforms all other previous YOLO models on both precision and speed.

Research process

Study design

A cross-sectional study using convenient sampling was conducted from December 2022 to August 2023 at the Institute of Gastroenterology and Hepatology, Hanoi, Vietnam. The study was conducted in accordance with the Declaration of Helsinki and its subsequent amendments. The study was approved by the Hanoi Medical University’s ethics committee, as per decision number 847/GCN-HDDDNCYSH-DHYHN May 5, 2023. The data is secondary data sourced from clinics and has been approved by these medical facilities and the ethic committee. Patients’ information in the database had been removed before being processed.

Inclusion criteria

Endoscopic videos and images were collected at Hanoi Medical University Hospital and Institute of Gastroenterology and Hepatology from December 2022 to June 2023. The image selection criteria included a resolution of at least 995×1,280 pixels, and representation of four lighting modes [white light imaging (WLI), flexible imaging color enhancement (FICE), blue light imaging (BLI), linked color imaging (LCI)] in the dataset. Regarding videos, eligible colonoscopy videos were taken during the withdrawal time (from the cecum to the anal canal) with a minimum length of 5 minutes.

Exclusion criteria

For images, exclusion criteria included images captured in magnified mode and the presence of blur, vibration, glare, excessive stool, blood, or foamy mucus. Colonoscopy videos were excluded from this study if patients had lesions suspected to be colon cancer, chronic inflammatory bowel disease (IBD), ongoing gastrointestinal bleeding, and a Boston Bowel Preparation Scale (BBPS) score below 2 in each segment or below 6 in total.

Sampling method

Deep learning models require large datasets to exploit their full potential; therefore, we collected as many videos and images as possible to ensure sufficient variability and representation (9). Endoscopists used the Computer Vision Annotation Tool (an online platform designed by the company Intel, see http://label.bkict.org:8080/auth/login) to manually track polyps by bounding box and label polyps on each video frame and image, without the assistance of AI. The labeling process included categorizing polyps into neoplastic or non-neoplastic based on NICE classification (10), and identifying polyp’s location, size, and morphological characteristics according to the Paris classification (Table 1) (11). The labeling process was performed by endoscopists with more than 3 years of experience and subsequently verified by experts with more than 10 years of experience. Afterwards, the dataset was divided as follows: 40% of the videos, randomly selected, along with all the images, were allocated for training, while the remaining 60% of the videos were used for validation.

Table 1

| Type | Subtype |

|---|---|

| 0–I: elevated or polypoid forms | 0–Ip: pedunculated |

| 0–Is: sessile, broad-based | |

| 0–II: flat or superficial forms | 0–IIa: flat and elevated |

| 0–IIb: completely flat | |

| 0–IIc: superficially depressed | |

| 0–III: excavated or ulcerated forms | – |

YOLOv8 model and configuration

The YOLOv8 model was obtained from the official Ultralytics GitHub repository. YOLOv8 comprises 5 different models (YOLOv8n, YOLOv8s, YOLOv8m, YOLOv8l, and YOLOv8x) that vary in terms of the numbers of parameters, trainable weight sizes, and computation time. In this study, we chose YOLOv8x as the target algorithm model. Before training, input images were resized to 1,380×1,080 pixels to maintain the aspect ratio and ensure consistency. The model was configured to detect a single class: “polyp”. In the postprocessing phase, NMS was applied for each individual intersection over union (IoU) threshold of 0.3 and 0.5 to eliminate overlapping bounding boxes, retaining only the most confident detections.

Statistical analysis

The metrics used to evaluate the algorithm’s accuracy were described in Table 2. IoU metric is used to prevent true positives misclassifications in scenarios where AI’s bounding box detects another object but overlaps with the ground truth in a polyp-containing image. Typically, the IoU threshold is set at 0.3 or 0.5 (13). In this research, we evaluate the algorithm at both IoU values of 0.3 and 0.5 and compare these results at a similar confidence score of 0.5. After validation, YOLOv8x returns a detailed analysis of its true positive rate, false positive rate, false negative rate, recall, precision, F1-score for each confidence score from 0 to 1 at an IoU value (12). Stata version 17 (StataCorp LLC) was used to calculate the statistical association between the training and validation dataset using Chi-squared and Fisher’s Exact test (P<0.05 was considered statistically significant).

Table 2

| Index | Formula | Definition |

|---|---|---|

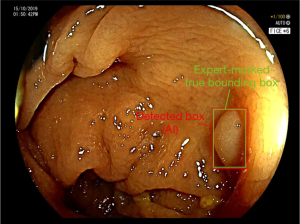

| IoU | – | The ratio between the overlapping area to the total area of the AI’s detected box and the ground-truth (Figures 2,3) |

| TP | – | Number of polyps’ regions correctly identified by AI |

| FP | – | Number of detected boxes that do not contain polyps |

| TN | – | Number of no-polyp frames without a detected box |

| FN | – | Number of regions with polyps that are not detected by AI |

| Confidence score | Pr(object)† * IoU | Confidence score reflects how likely the detected box contains a polyp and how accurate is the detected box (how well the predicted box intersects with the expert-marked true bounding box) |

| Recall (Se) | TP/(TP + FN) | Number of polyp regions correctly identified by the algorithm over the total number of polyp regions |

| Precision (PPV) | TP/(TP + FP) | Number of polyp regions correctly identified by the algorithm over the total number of polyp regions detected |

| F1-score | 2P * R/(P + R) | Harmonic means of precision and recall. It provides a single metric that balances precision and recall, overall measuring the algorithm’s performance |

†, probability that predicted bounding box contains object. AI, artificial intelligence; FN, false negative; FP, false positive; IoU, intersection over union; PPV, positive predictive value; Se, sensitivity; TN, true negative; TP, true positive.

The research process is illustrated in Figure 4.

Results

A total of 50 endoscopic videos and 20,616 endoscopy images were obtained, in which 20 videos and all the images were used to train the algorithm. In the image dataset, 10,557 images contain polyps. The lighting modes of the image dataset are shown in Table 3.

Table 3

| Type of image | Lighting mode | ||||

|---|---|---|---|---|---|

| WLI | FICE | BLI | LCI | Total | |

| Polyp image | 5,627 | 2,313 | 1,305 | 1,321 | 10,557 |

| Non-polyp image | 5,500 | 1,559 | 1,500 | 1,500 | 10,059 |

BLI, blue light imaging; FICE, flexible imaging color enhancement; LCI, linked color imaging; WLI, white light imaging.

In the video training data set, according to the Boston classification, the cleanliness of most videos reached BBPS 3. Most training videos contain polyps ≤5 mm in size (76.5%) with the most common location being the cecum (29.4%). Besides, neoplastic polyps constitute the majority (58.8%). However, in the training video set we collected, there are only 3 morphological types of polyps: Is, Ip, and IIa.

The algorithm was tested on 30 validation videos containing a total of 68 polyps and 468,821 frames, of which 69,003 frames had polyps (14.8%). The endoscopic characteristics of validation videos are described in Table 4.

Table 4

| Characteristics | Video training set, n (%) | Validation set, n (%) | P |

|---|---|---|---|

| Location | 0.17‡ | ||

| Cecum | 10 (29.4) | 12 (17.7) | |

| Ascending colon (including hepatic flexure) | 2 (5.9) | 2 (2.9) | |

| Transverse colon | 7 (20.6) | 8 (11.8) | |

| Descending (including splenic flexure) | 4 (11.8) | 15 (22.1) | |

| Sigmoid colon | 4 (11.8) | 20 (29.4) | |

| Rectum | 7 (20.6) | 11 (16.2) | |

| Neoplastic characteristic† | 0.26§ | ||

| Neoplasm | 20 (58.8) | 32 (47.1) | |

| Benign | 14 (41.2) | 36 (52.9) | |

| Morphology (based on Paris classification) | 0.03‡ | ||

| Ip | 13 (38.2) | 14 (20.6) | |

| Is | 18 (53.0) | 34 (50.0) | |

| IIa | 3 (8.8) | 20 (29.4) | |

| Size (mm) | 0.055‡ | ||

| ≤5 | 26 (76.5) | 59 (86.8) | |

| 6–10 | 5 (14.7) | 9 (13.2) | |

| >10 | 3 (8.8) | 0 (0.0) |

†, based on NICE (NBI International Colorectal Endoscopic) classification (10); §, Chi-square; ‡, Fischer exact test. NBI, narrow-band imaging.

The most common location that contained polyps was the sigmoid colon (29.4%), and the least common one was the ascending colon, including the hepatic flexure (2.9%). All polyps were under 10 mm in size, mostly ≤5 mm (86.8%). In terms of morphology, 50% was Paris Is; the proportion of type IIa and Ip polyps were 29.4% and 20.6%, respectively. There was no type IIb, IIc, or III polyps. The percentages of neoplastic and non-neoplastic polyps were similar (47.1% and 52.9%, respectively).

On the validation set, the trained algorithm achieved a speed of 72 frames per second (fps) when run on GPU RTX 3090. The algorithm was able to identify 65/68 polyps in 30 videos, achieving a recognition rate of 96%. The three polyps not identified by AI were in the rectum, small (≤5 mm), and Paris IIa in terms of morphology.

We compared the accuracy indications of different IoU values at a fixed confidence score of 0.5. With IoU set at 0.5, recall, precision, and F1-score were 0.695 [95% confidence interval (CI): 0.691, 0.698], 0.482 (95% CI: 0.479, 0.485), and 0.57 (95% CI: 0.566, 0.572), respectively. When IoU was adjusted to 0.3, recall, precision, and F1-score were 0.737 (95% CI: 0.734, 0.740), 0.512 (95% CI: 0.509, 0.515), and 0.604 (95% CI: 0.601, 0.607), respectively. Notably, lowering IoU setting to 0.3 improves the algorithm’s recall and F1-score.

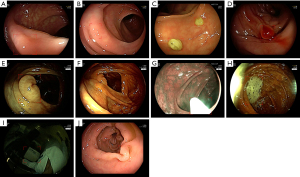

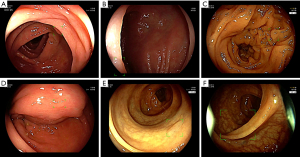

We also described characteristics of polyps which were missed or incorrectly detected in our study. We did a detailed analysis of every single frame at an IoU of 0.5. The AI incorrectly identified 54,408 regions (false positive) as polyps. These incorrect identifications included mucosal folds (42.5%), instruments (17.3%), polyp remnants (12.2%), bubbles and mucus (4.1%), stool (3.9%), and blurred areas (3.4%). Other reasons included low IoU (the bounding box overlaps £ 50% with the ground truth), normal mucosa, and blurry frames caused by endoscopic cameras’ movement (9.8%). The “delineation” errors were caused by the Annotation Tool having to work with excessive frames. Some frames had no polyp, but the bounding box automatically replicated from the previous frame was not deleted. AI failed to identify 22,264 regions containing polyps. Most of these polyps had vague surfaces (45.2%), were diminutive/distant (21.4%) or largely obscured (15.7%) (Table 5). Examples of misidentification errors and misdetection errors were demonstrated in Figures 5,6, respectively.

Table 5

| Misidentification and misdetection errors | Regions, n | % |

|---|---|---|

| Misidentification (false positives) (n=54,408) | ||

| Bubble, mucus | 2,224 | 4.1 |

| Stool | 2,108 | 3.9 |

| Polyp remnants after resection | 6,645 | 12.2 |

| Bauhin valve | 128 | 0.2 |

| Mucosal folds | 23,136 | 42.5 |

| Endoscopic instruments | 9,424 | 17.3 |

| Delineation errors | 3,580 | 6.6 |

| Blurred area | 1,860 | 3.4 |

| Others (low IoU, normal mucosa, blurry frame cause by endoscope movement ...) | 5,303 | 9.8 |

| Misdetection (false negative) (n=22,264) | ||

| Vague polyp surfaces | 10,071 | 45.2 |

| Diminutive or distant polyps | 4,760 | 21.4 |

| Obscured polyps | 3,496 | 15.7 |

| Delineation errors | 3,170 | 14.2 |

| Others (polyps’ remains after resection …) | 767 | 3.5 |

IoU, intersection over union.

Discussion

Key findings

Our study tested the YOLOv8 algorithm on 30 endoscopic videos containing 68 polyps with a total of 468,821 frames and 69,003 frames containing polyps. All 3 misdetected polyps are small, benign, and located in the rectum. With an IoU of 0.3, the model achieved a recall of 0.74, precision of 0.51, and F1-score of 0.6.

Despite 76.5% of polyps in the video training dataset being less than 5 mm, 21.4% of false negatives frames are attributable to diminutive and distant polyps, with all misdetected polyps classified as small.

Mucosal folds identified as polyps were the most common false positive error of the YOLOv8 algorithm, accounting for 42.5% false positives. Hassan’s research in Italy also found that mucosal folds were the most common error when developing their AI system, which comprised 73.6% of false positives (14).

Strengths and limitations

The YOLOv8 algorithm in our study was able to identify additional polyp regions missed by experts. This highlights YOLOv8 algorithm’s potential to be a “second observer” to assist doctors during colonoscopy. Such findings align with many studies on the application of AI in endoscopic procedure (15-17). A randomized controlled trial in China comparing an observer-assisted endoscopy group and a computer-aided detection (CAD)-assisted endoscopy found that the number of polyps detected by CAD-assisted endoscopy was significantly higher than in the group with additional human observers. The number of false positives in the CAD group was also lower, hence causing less disturbances to the physician (18). In addition to passive polyp detection, European Society of Gastrointestinal Endoscopy (ESGE) guidelines suggest an active role of CAD systems in assisting physicians with diagnostic confirmation upon request (19).

In addition to accuracy, image processing speed is an equally important factor in developing clinically applicable algorithms. The YOLOv8 algorithm in our study has a processing speed of 72 fps. Bernal et al. assessed that AI algorithms need a minimum processing speed of 25 fps for real-time applications. The processing speed of the polyp detection AI in studies similar to ours ranged from 5 to 46 fps (16). High processing speeds are needed to handle complex real-time frames with many disturbances. Moreover, low-latency AI models can easily be integrated with new endoscopic systems with modern cameras in the future.

Our research has limitations. Firstly, the number of endoscopic videos is relatively small, and lesion morphology in the video dataset is not diverse, which only includes type Ip, Is, and IIa lesions. In addition, the use of convenience sampling inherently limits the generalizability of the study. Secondly, the endoscopic videos were only collected from the Fujifilm endoscope system of the Institute of Gastroenterology and Hepatology. This lack of dataset diversity and size could affect the algorithm’s performance in real-world applications, especially given the variability in endoscopic equipment, image resolution, lighting, and quality across different institutions. Fine-grained features, such as surface texture, may become blurred, making it more difficult for the algorithm to distinguish polyps from the surrounding mucosa.

Comparisons with similar research

Studies using the YOLO algorithm in colorectal polyp detection around the world vary widely in their results. AI model based on YOLOv2 algorithm only achieves 14.7% precision, while Eixelberger’s research on YOLOv3 reported high recall and precision of 72.9% and 89.5% respectively (17). Our result was most similar to Tang et al.’s study on YOLOv5 in 2023. Tang’s research team also found that the YOLO algorithm was not effective in detecting and classifying polyps with comparable precision and recall to our study (0.76 and 0.67, respectively) (20). The main reason cited is that most polyps on the data set are small and bear similar characteristics, making it difficult for AI to distinguish.

Unlike other research, most of which calculated false positives by the number of incorrectly identified objects, we assigned false positives as misidentified regions on each frame. This method is better in reflecting the algorithm’s actual performance (14).

Explanation of findings and suggested technical changes

Despite achieving a high detection rate, YOLOv8 demonstrated a relatively low F1-score. Expanding the database and implementing advanced data augmentation techniques could enhance its performance. As for data augmentation, in this research, we instructed our endoscopists to perform various camera rotations around the polyps, hence training the AI to detect objects in different orientations. Tang et al. have suggested another data augmentation technique: utilizing generative adversarial networks (GANs) to create realistic synthetic images to address the scarcity of real-world data. Notably, their GAN model produced images of sessile serrated adenomas, one of the rarest polyp types, which led to an improvement in the average precision for detecting this specific polyp (20).

Small object detection, such as diminished polyps, is a known challenge for object detection algorithms employing convolutional neural network (CNN) backbones such as YOLO. Although these networks enhance detection speed, the size of small objects diminishes progressively as they pass through the layers, rendering them undetectable in the final output (21). Further training focused on small polyps is necessary to address this shortcoming and enhance the algorithm’s performance. In addition, several new mechanisms are proposed in models beyond YOLOv8 that help retain fine-grained details, such as Programmable Gradient Information (PGI) and Generalized Efficient Layer Aggregation Network (GELAN) (22). However, the effectiveness of these innovations remains to be validated in practical training scenarios.

To address the issue of false positives, we incorporated several background images into the training dataset (9). Half of the training images are devoid of polyps, and the inherent characteristics of the videos ensure that the majority of training frames do not contain polyps. Enhancing annotation quality is another crucial step to reduce model confusion and improve accuracy. Additionally, the over-representation of small, Ip polyps may bias the model toward this class; this limitation will be addressed as more diverse data becomes available for future training. Furthermore, employing Hard Negative Mining, a technique where false positive samples are reintroduced into training with increased weight, can help the model learn to correct its mistakes and further improve detection performance (23).

Implications and actions needed

Technical analysis of multi-scale object detection tasks, a small IoU threshold is more suitable for small object detection (24). Other studies investigating YOLO algorithms have chosen an IoU cutoff of 0.3. To be specific, two studies by Li and Eixelberger using YOLOv3 and YOLOv2 algorithms mark regions with an IoU >0.3 as correct identifications. By selecting an IoU of 0.3, these studies aim to enhance the algorithm’s ability to accurately identify objects of interest, particularly in complex or cluttered environments where higher IoU thresholds might miss relevant detections (25). Our study also indicates that a lower IoU setting may be more appropriate for screening colonoscopy to optimize polyp identification. For YOLOv8 algorithms specifically, our results suggest that setting IoU to 0.3 and confidence score to 0.7 achieves the optimal F1-score (Figure 7).

To increase the AI’s accuracy, the algorithm should be trained on endoscopic videos from different hospitals, as well as diversifying videos from multiple endoscopic systems with different resolutions, image quality, and lighting. Such efforts would also help encompass a broader range of polyp types and endoscopic conditions, ensuring the algorithm’s reproducibility and applicability across various medical facilities. In addition, we only implement YOLOv8 on videos, and the algorithm’s real-time detection capabilities have not yet been tested. We aim to conduct randomized controlled trial research to evaluate AI’s feasibility in clinical practice.

Conclusions

The YOLOv8 algorithm achieved a high polyp detection rate (96%) and processing speed (72 fps). Recall, precision, and F1-score at IoU =0.5 were 0.69, 0.48, and 0.57, respectively. Moreover, lowering the IoU threshold to 0.3 improved sensitivity. False positives were primarily due to mucosal folds and endoscopic instruments. Small polyp detection and dataset limitations remain challenges for applying YOLOv8 to real-time scenarios. Future research should diversify datasets across different endoscopic systems and assess the feasibility of integrating YOLOv8 into clinical practice.

Acknowledgments

None.

Footnote

Reporting Checklist: The authors have completed the TRIPOD reporting checklist. Available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-338/rc

Data Sharing Statement: Available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-338/dss

Peer Review File: Available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-338/prf

Funding: This research was supported in part by

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-338/coif). H.D.V. reports Honoraria for lectures, presentations, educational events from Abbott, Astrazeneca, Zuellig, Johnson and Johnson, Biocodex. H.D.V. participates on an Advisory Board for Gilead All4Liver Grant and Gilead Global Public Health Grant, and serves as Vice General Secretary of Vietnam Association of Gastroenterology, and Vice General Secretary of Vietnam Association for the Study of Liver Diseases. The other authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki and its subsequent amendments.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- World Health Organization. Global Cancer Observatory: Cancer Today. International Agency for Research on Cancer; 2022. Available online: https://gco.iarc.who.int/today/en/dataviz/pie?mode=cancer&group_populations=1&populations=704&cancers=39_7_8&types=0&group_cancers=1&multiple_cancers=1&multiple_populations=0

- Meseeha M, Attia M. Colon Polyps. Treasure Island, FL, USA: StatPearls Publishing; 2023.

- Zhao S, Wang S, Pan P, et al. Magnitude, Risk Factors, and Factors Associated With Adenoma Miss Rate of Tandem Colonoscopy: A Systematic Review and Meta-analysis. Gastroenterology 2019;156:1661-1674.e11. [Crossref] [PubMed]

- Shah S, Park N, Chehade NEH, et al. Effect of computer-aided colonoscopy on adenoma miss rates and polyp detection: A systematic review and meta-analysis. J Gastroenterol Hepatol 2023;38:162-76. [Crossref] [PubMed]

- Terven J, Córdova-Esparza DM, Romero-González JA. A Comprehensive Review of YOLO Architectures in Computer Vision: From YOLOv1 to YOLOv8 and YOLO-NAS. Mach Learn Knowl Extr 2023;5:1680-716. [Crossref]

- Zhang L, Ding G, Li C, et al. DCF-Yolov8: An Improved Algorithm for Aggregating Low-Level Features to Detect Agricultural Pests and Diseases. Agronomy 2023;13:2012. [Crossref]

- Li X, Wang W, Wu L, et al. Generalized Focal Loss: Learning Qualified and Distributed Bounding Boxes for Dense Object Detection. Advances in Neural Information Processing Systems 2020;33:21002-12.

- Bochkovskiy A, Wang CY, Liao HYM. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020. arXiv:2004.10934.

- Ultralytics. Tips for Best Training Results 2024. Available online: https://docs.ultralytics.com/yolov5/tutorials/tips_for_best_training_results/

- Iwatate M, Hirata D, Sano Y. NBI International Colorectal Endoscopic (NICE) Classification. In: Endoscopy in Early Gastrointestinal Cancers, Volume 1: Diagnosis. Singapore: Springer; 2021:69-74.

- Endoscopic Classification Review Group. Update on the paris classification of superficial neoplastic lesions in the digestive tract. Endoscopy 2005;37:570-8. [Crossref] [PubMed]

- Poon CCY, Jiang Y, Zhang R, et al. AI-doscopist: a real-time deep-learning-based algorithm for localising polyps in colonoscopy videos with edge computing devices. NPJ Digit Med 2020;3:73. [Crossref] [PubMed]

- Li JW, Chia T, Fock KM, et al. Artificial intelligence and polyp detection in colonoscopy: Use of a single neural network to achieve rapid polyp localization for clinical use. J Gastroenterol Hepatol 2021;36:3298-307. [Crossref] [PubMed]

- Hassan C, Badalamenti M, Maselli R, et al. Computer-aided detection-assisted colonoscopy: classification and relevance of false positives. Gastrointest Endosc 2020;92:900-904.e4. [Crossref] [PubMed]

- Aslanian HR, Shieh FK, Chan FW, et al. Nurse observation during colonoscopy increases polyp detection: a randomized prospective study. Am J Gastroenterol 2013;108:166-72. [Crossref] [PubMed]

- Bernal J, Tajkbaksh N, Sanchez FJ, et al. Comparative Validation of Polyp Detection Methods in Video Colonoscopy: Results From the MICCAI 2015 Endoscopic Vision Challenge. IEEE Trans Med Imaging 2017;36:1231-49. [Crossref] [PubMed]

- Eixelberger T, Wolkenstein G, Hackner R, et al. YOLO networks for polyp detection: A human-in-the-loop training approach. Current Directions in Biomedical Engineering 2022;8:277-80. [Crossref]

- Wang P, Liu XG, Kang M, et al. Artificial intelligence empowers the second-observer strategy for colonoscopy: a randomized clinical trial. Gastroenterol Rep (Oxf) 2023;11:goac081. [Crossref] [PubMed]

- Bisschops R, East JE, Hassan C, et al. Advanced imaging for detection and differentiation of colorectal neoplasia: European Society of Gastrointestinal Endoscopy (ESGE) Guideline - Update 2019. Endoscopy 2019;51:1155-79. [Crossref] [PubMed]

- Tang CP, Chang HY, Wang WC, et al. A Novel Computer-Aided Detection/Diagnosis System for Detection and Classification of Polyps in Colonoscopy. Diagnostics (Basel) 2023;13:170. [Crossref] [PubMed]

- Zheng X, Qiu Y, Zhang G, et al. ESL-YOLO: Small Object Detection with Effective Feature Enhancement and Spatial-Context-Guided Fusion Network for Remote Sensing. Remote Sens 2024;16:4374. [Crossref]

- Wang CY, Yeh IH, Liao HYM, editors. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. In: European Conference on Computer Vision 2024; Cham: Springer; 2025.

- Jin S, RoyChowdhury A, Jiang H, et al. Unsupervised Hard Example Mining from Videos for Improved Object Detection. In: European Conference on Computer Vision (ECCV) 2018. Cham: Springer; 2018.

- Yan J, Wang H, Yan M, et al. IoU-Adaptive Deformable R-CNN: Make Full Use of IoU for Multi-Class Object Detection in Remote Sensing Imagery. Remote Sens 2019;11:286. [Crossref]

- Wichakam I, Panboonyuen T, Udomcharoenchaikit C, et al. Real-Time Polyps Segmentation for Colonoscopy Video Frames Using Compressed Fully Convolutional Network. In: MultiMedia Modeling: 24th International Conference, MMM 2018. Bangkok, Thailand, February 5-7, 2018. Cham: Springer; 2018.

Cite this article as: Dao Viet H, Nguyen TT, Lam HN, Nguyen BP, Vu TQ, Nguyen HM, Pho VT, Dang HH, Sang DV, Nguyen TT. Validation of YOLOv8 algorithm in detecting colon polyps in endoscopy videos. J Med Artif Intell 2025;8:35.