An autoencoder-based approach for glaucoma anomaly detection in visual fields diagnosis

Highlight box

Key findings

• We proposed an autoencoder-based anomaly detection architecture call anomaly autoencoder that can effectively enhance the automatic detection of glaucoma from visual field (VF) images. The anomaly autoencoder achieved a high receiver operating characteristic area under the curve of 0.931 and an F1-score of 0.862, indicating strong performance in classifying glaucoma versus non-glaucoma cases.

What is known and what is new?

• In previous work, the exploration of unsupervised machine learning and deep learning techniques for glaucoma focus on reducing the noise in VF while identifying the pattern of vision loss and the process are more toward diagnosing the progression of glaucoma against time.

• The novelty of this study focus on the development of autoencoder-based approach following the architecture of anomaly detection on detecting early detection of glaucoma and identifying the progression of defect patterns cause by glaucoma inside VF images.

What is the implication, and what should change now?

• It is recommended to modify the pre-processing stages for VF dataset from image threshold given in x-axis and y-axis into images in order to enhance the performance of glaucoma identification. Besides that, the accuracy and sensitivity of the glaucoma detection model may be improved by altering or adding layers to fine-tune the autoencoder layers.

Introduction

Glaucoma (1-3) is one of eye disease that can cause blindness for the patient. A progressive visual neuropathy that causes changes to the optic disc and retinal nerve fiber layer is known as glaucoma. Damage cause by glaucoma will be remains irreversible, but the intervention of glaucoma can be prevent or slow the progression of functional impairment and vision loss (4,5). Approximately around 91 million individuals worldwide are affected by glaucoma, with 6.7 million experiencing bilateral blindness as a secondary consequence of the condition (6).

Visual fields (VFs) testing is one of testing method used to diagnose and monitor the progression of glaucoma (7-9). For VFs testing, the defect pattern will be mapped on a sheet paper to identify the progressive eye condition, the progression of eye defect condition may lead to vision loss and blindness. VFs tests assess the sensitivity of a patient’s peripheral and central vision, helping to identify any areas of visual impairment caused by glaucoma-related damage to the optic nerve (10,11). To identify glaucoma in VFs testing require expert and require more time to identify type of defect so many automation process had been develop by using supervised (12-14) and unsupervised (15,16) method to help ophthalmologist.

In previous work, several methods have been used to identify glaucoma and its progression. In Teng et al.’s study, over 63,000 pairs of eyes associations of VF defects in individuals with glaucoma. To precisely quantify the VF defects area, they applied the archetypal analysis for data pre-processing and predict using a logistic regression model. They obtain the AUC values of defect ranged from 69% for superior nasal step and 92% for near-total loss (17). Asaoka et al. utilized variational autoencoder (VAE) to enhance the sensitivity of Humphrey visual field (HVF) testing by reduced measurement noise in glaucomatous VFs on 82,433 VFs dataset. The VAE model reconstruct VFs based on the mean total deviation (mTD) that was computed by HVF, then, the reconstructed mTD of VFs using VAE and label as mTDVAE. They have obtain a significant relationship between the difference between mTD and mTDVAE by obtained false-positive and false-negative responses less than 33% (8,9).

Li et al. developed a smartphone application to detect glaucoma, by using modified ResNet-18 from 10,784 VF datasets. They obtain the best accuracy 99% accuracy in recognizing different patterns in pattern deviation probability plots region (18). Sugisaki et al. using a bilinear interpolation (IP) as image processing and past it to support vector regression (SVR) to predict the prediction error of open advance glaucoma from 219 eyes. They obtain result with error range of about 25% (16).

Kucur et al. (12) using a convolutional neural network (CNN) method to identify between glaucoma and non-glaucoma obtain accuracy 98.5% and Park et al. (13), they using recurrent neural network (RNN) method to identify the progression of glaucoma and obtain accuracy 88%. Tian et al. have proposed two vision transformer (ViT)-based deep learning (DL) network on “64K+” dataset; first by optimize a spatiotemporal ViT and second by develop a VF-to-VF generation architecture via a diffusion model with a ViT backbone. Their model had predict future VFs with pointwise mean absolute error (PMAE) as low as 2.15 decibels (dB) (19).

Huang et al. proposed a novel DL method based on self-supervised pre-training of a ViT model on a large and unlabeled dataset. They obtained the best accuracy 92% when identifying glaucoma progression and predict future progression in the eyes (3). For Thirunavukarasu et al., they develop a web-application call Glaucoma Field Defect Classifier (GFDC) using Hodapp-Parrish-Anderson for cross-sectional study (20). There are others study done by Mandal et al. (21) and Sathya and Balamurugan (22) using VAE to identify glaucoma in fundus image dataset and obtained accuracy 88.9% and 89% (21-23).

For glaucoma detection using DL, the process is frequently done using supervised method and the dataset type are focus on fundus image. The supervised method is mainly focus on training models using labelled data to improve the accuracy of glaucoma detection. However, when it comes to identifying the progression of glaucoma, there has been move toward unsupervised learning. This change is mostly caused by the complex and varied patterns of glaucoma development, which are difficult to diagnose and take a lot of time and experience to understand.

Additionally, the lack of datasets in the medical field is one of the variables that leads to the identification of glaucoma progression moving to an unsupervised method. Since, this study focus on both classifying glaucoma and identify the glaucoma progression an autoencoder-based anomaly detection (24) call anomaly autoencoder is utilize to detect glaucoma and identify the progression of glaucoma within VF images. The contribution of this study can be summarized as follows:

- To implement an autoencoder-based model designed by following the anomaly detection architecture called anomaly autoencoder to detect glaucoma and identify the progression of glaucoma within the VF dataset.

- To identify the most effective threshold for distinguish between glaucoma and non-glaucoma while analyses the progression of glaucoma along the anomaly scores based on MSE loss function.

- To evaluate the performance of the anomaly autoencoder by comparing its accuracy, precision, recall and F1-score with other unsupervised methods.

Methods

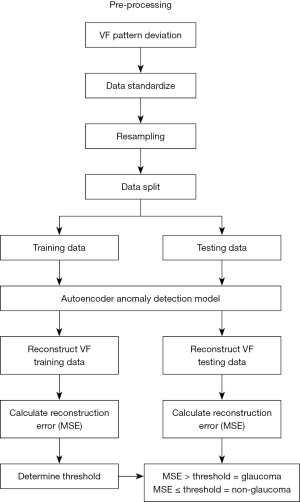

In this study, an unsupervised autoencoder-based approach for detecting glaucoma and identify the progression of glaucoma within VF dataset by following the anomaly detection process is proposed. For this study, public VF dataset that were tested using the Humphrey Field Analyzer II (HFA, Carl Zeiss Meditec AG, Jena, Germany) were obtained. Pre-processing will be performed on the VF dataset to apply consistency and standardization of the dataset. Then, the dataset is split 90:10 ratio into training and testing from each category to analyse the performance of anomaly autoencoder. The dataset is split into 90% training and 10% testing because for unsupervised method large training dataset is required to ensure the testing accuracy higher (25). A threshold to differentiate between glaucoma and non-glaucoma are obtain during training process. Then, the model will reconstruction errors in testing VF dataset to be identify whether the VF dataset is glaucoma or non-glaucoma and the MSE loss function use to identify the progression of glaucoma. The framework for early glaucoma detection in VF dataset is illustrated in Figure 1.

VFs dataset

In this study, public VF dataset obtained from the Rotterdam Eye Hospital in the Netherlands (26), which was tested using the Humphrey Visual Field Analyzer 2 (HFA, Carl Zeiss Meditec AG). The dataset comprises data from both eyes of 161 patients, out of 139 patients’ eye were diagnosed with glaucoma. For HFA testing, these patients need to underwent testing using a white-on-white 24-2 test pattern that already utilized in the HFA (27,28), as shows in Figure 2. For this dataset, the testing was conducted over a span of 5 to 10 years, resulting in a total of 5,108 VFs dataset. However, in this study, it only focused on a subset of 2,523 VFs dataset, which were categorized into two classes: 244 VFs classified as non-glaucoma and 2,279 VFs classified with glaucoma.

In the white-on-white 24-2 test pattern in HFA, total deviation quantifies the degree to which a patient’s visual sensitivity at various points in their VF deviates from the expected norms established through a database of healthy individuals. The visual sensitivity is expressed in dB, whereas the positive values indicate better-than-expected sensitivity, while negative values indicate worse sensitivity compared to age-matched norms. The results of total deviation are presented in a color-coded map, which is used to display the HVF plotting points. The plotting point will be convert into mapping images that will assists ophthalmologists in identifying potential abnormalities or defects in the VF (27,28). An example of plotting total deviation can be seen in Figure 2, marked within the red box.

Pre-processing

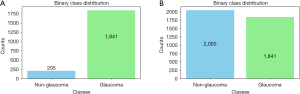

Initially, the VF dataset contained only 205 samples representing non-glaucoma cases, whereas the glaucoma class had a significantly larger number contain 1,841 samples. In order to create a balanced dataset and prevent the anomaly autoencoder model from exhibiting bias towards the majority class, oversampling method is applied to generate additional data points for the non-glaucoma class (29). Oversampling achieve the balance dataset either by duplicating existing samples or by generating synthetic ones that closely resembled the original non-glaucoma data (29). Consequently, the non-glaucoma class was expanded to 2,050 samples, while the glaucoma class remained at 1,841 samples. This balanced dataset facilitated more equitable training of the machine learning model, enhancing its ability to differentiate between glaucoma and non-glaucoma cases and thereby improving overall classification performance. The original and oversampling distribution graph is illustrated in Figure 3.

The VF testing collect patient total deviation numbers along with the x-axis and y-axis coordinates of the VF mapping points based on the patient vision area. Therefore after oversampling process is done, the VF dataset will be standardize using the z-score techniques (30). For accurate and efficient glaucoma identification and advancement utilizing the VF dataset, z-score standardization is an essential step in the data pre-processing procedure. Eq. [1] is used to determine the z-score of a total deviation number in the VF dataset.

where z = standardizes value (z-score) of the dataset point; x = the original value of the dataset point; µ = the mean (average) of the entire dataset; σ = the standard deviation of the entire dataset.

The VF dataset consists of a variety of tests or assessments, each of which may have a different scale and units for mapping the area affected by vision loss. Therefore, standardization is required since it involves in transforming the data to have a mean (average) of zero and a standard deviation of one. Z-score standardization offers several advantages in improving the accuracy of detection process (30). The first advantage of adopting z-score standardization is that it guarantees that every feature is analysed equally, regardless of how big or small it was originally, which keeps no one feature from controlling the learning process (30).

The second advantage of adopting z-score standardization is that it encourages improved model algorithm convergence, increasing the stability and efficiency of optimization. Additionally, by scaling the data in terms of standard deviations from the mean, it makes relative magnitudes easier to understand and improves interpretability (30). Additionally, it makes feature comparability easier, which helps with feature relevance assessment. Standardization may occasionally be required in order to satisfy the statistical presumptions of particular techniques (30,31).

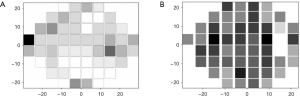

After the pre-processing process the mapping point in VF testing will be plotted as VF images based on the x-axis and y-axis points in the VF testing. The build plotting image of the VF dataset, will be plot into a grayscale color heatmap image incorporates total deviation information that had been undergoes pre-processing. The heatmap’s colors indicate the brighter regions that the patient can see and the darker parts that defects has occur. Figure 4 shows the heatmap plot of the VF defect image.

Autoencoder anomaly detection on glaucoma detection

Autoencoder architecture

Autoencoder architecture contained three part; an encoder, a latent space, and a decoder (32). For encoder (8), it is responsible to extract any relevant features from the input dataset, in this case VF image is the input dataset. The encoder’s role in autoencoder is to reduce the spatial dimensions from the input data while increasing the number of features (8).

Second part of autoencoder is latent space (8) which an important component of the autoencoder, it representing a compressed and abstract feature representation of the input VF dataset. The dimensionality of the latent space is set based on the hyperparameter inside the layers. To guarantee dimensionality reduction, the latent space parameter’s size should be less than the size of the original dataset. While a larger latent space may be less effective at capturing abstract representations, a smaller latent space may be better at capturing high-level properties at the expense of losing detail (8).

The last part of autoencoder is decoder (8) that design to reconstruct the input dataset from the latent representation. It often mirrors the architecture of the encoder but in reverse. The output of the decoder should ideally be as close as possible to the input image, effectively “learning” to recreate normal patterns present in the dataset (8).

The architecture of autoencoder framework as shown in Figure 5 involves designing the architecture with a bottleneck layer for latent space where it has a smaller number of neurons than the encoder and decoder part. This bottleneck layer forces the autoencoder to learn a compressed representation of the input data, capturing its essential features while discarding less important details.

The size of the bottleneck layer is defined by the hyperparameter inside the layers that determines the level of compression and should be chosen carefully based on the specific requirements of the problem at hand. The autoencoder is used to reconstruct the VF dataset to obtain the loss function for each VF image for calculate the anomaly score (33) to classify between the glaucoma and non-glaucoma, then identify the progression of defect in VF image based on the MSE value.

Anomaly detection

In term of glaucoma detection using anomaly autoencoder, anomaly scores (33) will be used to classify between glaucoma and non-glaucoma from VF dataset by selecting an appropriate threshold. Anomaly score are computed based on difference between input image and its generated counterpart (33). The anomaly score is calculated during training phases of the anomaly autoencoder, anomaly scores are computed to assess the degree of deviation from VF normal patterns and VF defect pattern by calculated the differences between the original VF dataset and reconstruct VF dataset.

To obtain the anomaly score as threshold to differentiate between glaucoma and non-glaucoma, one commonly used metric is the mean squared error (MSE) a loss function that used to calculates the average squared difference between pixel values in the original VF images and reconstructed VF images (5). When comparing the original VF images and the reconstructed VF images, a higher MSE score usually indicates a larger degree of abnormality.

Another valuable metric need to be analyze to obtain anomaly score is the Structural Similarity Index (SSI) (34), which assesses the structural similarity between the original and reconstructed images based on the images factors like luminance, contrast, and structure. But for this case, MSE is used for threshold in detecting between glaucoma and non-glaucoma and identifying it process since this output is frequently used by previous method (5,33).

Implementation

To implement anomaly autoencoder, it is important to carefully consider the layers and hyperparameters same as other DL method to ensure that the feature learning are effective and more accurate (35-37). Since autoencoder have reconstruction part in decoder so it is important to make sure during training process in encoder the feature learn help to improve the decoder part and reconstruct the best VF dataset and obtained the best threshold for glaucoma detection.

The model’s overall performance is dependent on its ability to capture significant features and generate correct reconstructions, both of which are controlled by these the model’s layers and hyperparameters. For autoencoder the architecture of encoder and decoder must be reverse with the same layers and suitable layers must be choose significantly to make sure the MSE threshold calculate the best anomaly score so the proposed method can obtain high accuracy in classifying glaucoma and non-glaucoma (33).

Therefore to build anomaly autoencoder, the layers of anomaly autoencoder is custom using sequential process by following the neural network (NN) process since based of DL is several layers of NN (12). In this work, the encoder has three layers, first is input and last two layers is dense layers. For input layers in encoder, the encoder expects input with a shape of 3,721 that indicating each input vector has 3,721 features that match with the number of VF mapping point after oversampling process is done.

For the next layers, first dense layer has 5,000 units and uses the hyperbolic tangent (Tanh) activation function (38,39) because Tanh have better normalization to centred data around zero since the VF dataset is in grey scale and the mapping point of image is divide in-quartile to identify which part the defect start to occur and the mapping point in VF dataset also constructed based on negative and positive points, aligning with the characteristics of the Tanh activation function as shown in Figure 6.

Then the second dense layer, has ‘latent_dim’ units so this layer will represent latent space for anomaly autoencoder that capturing the compressed representation of the input data. This layer also use same activation function as first dense layer (40,41). To reconstruct the feature that already being extract and compress by encoder and latent space, decoder network is create to reconstruct the VF dataset from feature that already extract from the encoder. For the output layer inside the decoder, this study configures it with 3,721 neurons since it need reconstruct same feature as the input. The activation function set for the output layer is linear activation function because it ensures accurate and straightforward reconstruction of real-valued VF defect data (38).

In this study, the optimizer set is Adam (42,43) with MSE as loss function to construct the threshold of anomaly score for glaucoma detection. The batch size is used to accelerate the training process by dividing the dataset into smaller groups. However, a larger batch size (44) requires more memory. As for epochs (45), they determine the learning process of the dataset. A higher number of epochs can lead to better performance, but if the training dataset is insufficient for the model, it can cause underfitting, where the MSE stop at its best performance.

In this study, a batch size of 32 is set, as previous VF analysis studies have shown that the VF dataset performs better with this batch size (37). Furthermore, 100 epochs are set to ensure that the minimum MSE is obtained, which helps to reduce reconstruction error for the VF dataset. Even though this study used the Keras framework (46) to construct the anomaly autoencoder, selecting the appropriate layers and hyperparameters for each layer for creating anomaly autoencoder architecture is important to ensure that the MSE obtained is minimized. This careful selection is important to achieve high accuracy in the chosen threshold for glaucoma detection and identifying its progression.

Validation

To evaluate an anomaly autoencoder model system’s effectiveness in VF defect detection, a 10% testing dataset will be used to compare the system’s ability in differentiate between glaucoma and non-glaucoma. The receiver operating characteristic (ROC) area under the curve (AUC) (47) score is a key metric for this evaluation, this key metric is commonly used for binary classification tasks. Based on the threshold value obtained during the training process, it is utilized to assess the model’s effectiveness in differentiating between patterns associated with glaucoma and those not, when reconstructing the VF image inside the decoder. The ROC AUC score is offers a comprehensive view of the model’s performance, allowing to assess its ability to strike a balance between correctly identifying true positives (glaucoma cases) and avoiding false positives (non-glaucoma cases) (47). The ROC AUC can be calculated using Eqs. [2-4].

where as in the Eq. [2]. The true positive result (TPR) also known as sensitivity, is calculated as Eqs. [3,4]:

False positive result (FPR) is calculated as:

where true positives (TP) = the number of correctly identified anomalies; false negatives (FN) = the number of actual anomalies that were not detected; false positives (FP) = the number of non-anomalies that were incorrectly classified as anomalies; true negatives (TN) = the number of correctly identified non-anomalies.

Statistical analysis

In the statistical analysis of glaucoma detection using anomaly autoencoder, the MSE score is utilized to obtain threshold (anomaly score) that will differentiate between glaucoma and non-glaucoma cases. The VF dataset is trained inside anomaly autoencoder model to minimize the reconstruction error on glaucoma and non-glaucoma images, produces MSE values that reflect the reconstruction from the original dataset. The MSE value is set as the anomaly score as threshold to classify the VF dataset for those with MSE values exceeding the threshold are categorized as glaucoma, while those below are categorized as non-glaucoma. The effectiveness of this classification is evaluated through several metrics; precision, recall, F1-score, and ROC AUC.

Results

In this section, the performance of anomaly autoencoder model applied to VF dataset. That focus on comparing its detection accuracy with other unsupervised methods. To conduct this evaluation, the VF datasets is divided into a 90% training and 10% testing split. The algorithm was implemented in python, utilizing the Keras framework with a TensorFlow backend for NN computations. The execution of the algorithm took place on a computing setup featuring an Intel Core i7-10 processor with 8 GB of RAM, bolstered by an RTX 2080 GPU for accelerated processing.

MSE value of anomaly detection

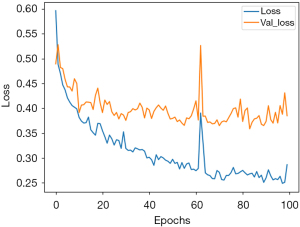

For this study MSE value will be used to identify the anomaly score in glaucoma cases and identify the progression of defect pattern in the VF images. Figure 7 shows plotting graph of MSE loss function against epoch for glaucoma anomaly detection in VF dataset during training process. To identify the best threshold to differentiate glaucoma, the MSE against epoch iterations serves as an importance indicator of anomaly autoencoder model convergence and the quality of VF image reconstruction.

When comparing the original VF images and the reconstructed VF images with various types of defect patterns, the MSE will compute the anomaly score by determining the threshold for classifying VF images as glaucoma. Typically, the process to calculate the anomaly score relies one statistical analysis of MSE against epoch, and the anomaly score can be adjusted to achieve the desired balance between minimizing false positives and false negatives in the context of defect pattern occur in VF images.

The statistical analyse of MSE loss function obtain during the training process is to find an appropriate threshold (anomaly score) for anomaly detection. This method comprises a thorough examination of the MSE distribution during training process, by includes the calculation of both the mean and standard deviation of the MSE from its iteration against epoch. The mean of MSE provides a measure of the average dissimilarity between the reconstructed VF images and their originals, while the standard deviation of MSE quantifies the spread or dispersion of these scores across the dataset. Then the threshold will be chosen from the mean and standard deviation of MSE.

The description of MSE during the training process is shown in Table 1. The table displays the reconstruction error and true class, including the mean, standard deviation, minimum, maximum, and quartiles. In the table, the minimum, maximum, and quartiles are 1 and 0 because they are labeled as glaucoma and non-glaucoma, respectively. The reconstruction error is represented in MSE values to identify the progression of glaucoma, with the maximum training MSE reaching 5.42, indicating the most severe defect. The mean, standard deviation, minimum, maximum, and quartiles are derived from 100 epochs during the training process.

Table 1

| Statistic | Reconstruction error | True class |

|---|---|---|

| Count | 3,502 | 3,501 |

| Mean | 0.30 | 0.47 |

| Standard deviation | 0.31 | 0.50 |

| Minimum | 0.05 | 0 |

| 25% quartile | 0.14 | 0 |

| 50% quartile | 0.21 | 0 |

| 75% quartile | 0.33 | 1 |

| Maximum | 5.42 | 1 |

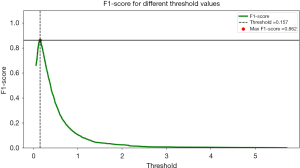

The threshold value of 0.157 was determined to be the best decision boundary for anomaly score in the glaucoma study scenario. If the VF images is above the threshold, it will be detected as glaucoma; if it is below the threshold, it will be detected as non-glaucoma. At this threshold, the model achieved a maximum F1-score of 0.862, indicating an exceptional result for recall and precision ratio. An F1-score of 0.862 indicates that the model accurately identifies a substantial proportion of glaucoma cases while maintaining a relatively low false positive rate. This means that the model is adept at distinguishing glaucoma from non-glaucoma cases, aligning with the specific objectives of glaucoma detection.

However, it is important to recognize that the threshold can be tuned to satisfy certain issue. In contrast, in situations where minimizing false alarms is critical, a higher threshold can be chosen to prioritize precision, with a potential trade-off in recall while the relationship between F1-score and threshold curve enables informed decision-making by providing a thorough understanding of the model’s performance at different threshold levels, catering to the specific needs of glaucoma detection as shown in Figure 8.

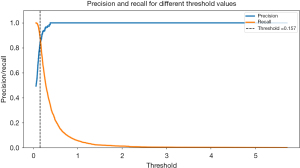

Performance evaluation of glaucoma detection can be visualized through precision-recall as shown in Figure 9, when precision and recall curves intersect at a particular threshold, typically around 0.9 of the testing dataset is signifies as a significant achievement in autoencoder-based anomaly detection model’s. In this study, the threshold obtain is 0.157, that will represent as anomaly score for autoencoder-based anomaly detection model to make critical decisions by classifying dataset as either glaucoma if the anomaly score is below the threshold point or non-glaucoma if the anomaly score above the threshold point. Precision, measuring the accuracy of positive predictions, stands at 0.9, indicating that when the model predicts glaucoma, it is correct at 90% of the time, instilling confidence in its positive classifications.

Meanwhile, recall a measure of the model’s capacity to recognize real cases of glaucoma is likewise at 0.9, indicating that it successfully captures 90% of all occurrences of glaucoma without missing many. This intersection demonstrates how well the model balance between precision (reducing false positives) and recall (minimizing false negatives), a highly regarded accomplishment, particularly in fields where reducing false alarms and guaranteeing thorough detection of positive cases. The results of this study have significant clinical implications, indicating that the model is highly effective in reliably and accurately diagnosing cases of glaucoma an essential characteristic in medical decision-making.

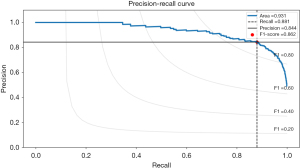

Besides that, the precision-recall curve provides valuable insights into the performance of an autoencoder model, particularly when dealing with anomaly detection, as in glaucoma classification. The autoencoder model exhibits outstanding abilities for striking a balance between precision and recall, as shown by its significant ROC AUC of 0.931. When the ROC AUC value is close to 1.0, it means that the model continuously reaches high recall and high precision across a range of threshold values. A recall of 0.881 implies that the model adeptly identifies 88.1% of all glaucoma cases, demonstrating sensitivity to positive cases.

Accompanying this, a precision of 0.844 indicates that when the model predicts glaucoma, it is accurate 84.4% of the time, minimizing false positives. Furthermore, the F1-score of 0.862 underscores the model’s robustness in correctly classifying glaucoma cases while mitigating both false positives and false negatives. Overall, these metrics highlight the model’s effectiveness in glaucoma detection, providing a balanced and reliable performance, which is critical in medical applications where accurate glaucoma identification and minimizing misdiagnosis are paramount. The overall performance of VF dataset in autoencoder is shown in Figure 10.

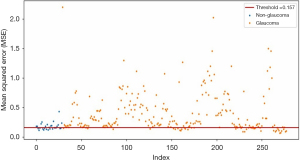

The progression of glaucoma is shown in the scatter plot of VF defect MSE against index ID, utilizing a threshold of 0.157 for glaucoma detection. The scatter plot displays the testing points for the VF dataset, providing a graphical representation of the model’s performance. Points below the threshold line indicate non-glaucoma cases, while points above the threshold line indicate glaucoma. The points above the threshold range from 0.157 to 2, representing the progression of glaucoma. From the scatter plot, it can be concluded that the higher the MSE value, the more severe the defect in the glaucoma.

Each point on the scatter plot corresponds to an individual data point within the VF dataset, with the x-axis indicating the index ID for each VF datasets or order of these data points and the y-axis representing the calculated MSE values by the anomaly autoencoder during reconstruction for each VF dataset. In conclusion, this scatter plot is an effective tool for testing and improving the autoencoder-based anomaly detection model, enabling a visual assessment of its capacity to distinguish between normal and glaucomatous VF patterns. The plotting point of glaucoma progression is shown in Figure 11.

Comparison analysis

In this study, the proposed method also is compared with previous study but most of previous study used autoencoder to improve the pre-processing part in classifying between glaucoma and non-glaucoma is usually use for fundus image dataset. Comparison between Mandal et al. (21) shows accuracy 88.5% and Sathya and Balamurugan (22) achieve accuracy 89%. Proposed method shows the highest accuracy because of the fundus dataset is more complex compare to VF dataset. The summarisation of comparison between previous study and propose study is shown in Table 2.

Therefore, the dataset used in proposed method is applied in previous study method and comparison between unsupervised method that already been applied for glaucoma study with proposed method is done. When comparing glaucoma detection techniques, it is critical to choose the best strategy based on the unique goals and assessment standards of the study. There are several methods had been used for glaucoma study for several purpose. Therefore, the unsupervised method choose will be train and test using this study dataset and compare with the proposed method autoencoder-based anomaly detection. The unsupervised method choose are principal component analysis (PCA), Gaussian mixture model (GMM), and VAE from previous method done on fundus dataset.

For PCA method where the pseudocode can be referred in Table S1, it is a linear dimensionality reduction technique, exhibits a precision score of 0.9919, highlighting its capability to accurately identify positive glaucoma cases. However, PCA encounters a challenge in recall, scoring at 0.494, indicating that it may miss certain instances of glaucoma. The trade-off between recall and precision is important, especially in medical applications where false negatives and positives have different implications. Since PCA is less expensive when missing glaucoma cases are involved, it may be used when accuracy is of the utmost importance.

With regard to GMM where the pseudocode can be referred in Table S2, it exhibits a strong ROC AUC of 0.7971, highlighting its ability to accurately discern between cases with glaucoma and non-glaucoma. More significantly, GMM produces a robust F1-score of 0.8520 by striking an excellent balance between precision (0.9645) and recall (0.7671). GMM is a suitable option in situations when a trade-off between recall and precision is desired because of its balanced performance. It is important in identifying the majority of glaucoma cases in patient eyes by obtaining high accuracy since it can help to reduce the time of glaucoma process.

For VAE where the pseudocode can be referred in Table S3, it delivers competitive performance with an ROC AUC of 0.7518 and a high precision score of 0.9781. However, its recall is comparatively lower at 0.5381, leading to an F1-score of 0.6943. Though not as well-balanced as GMM, this shows a compromise between precision and recall. When recall is somewhat compromised but accuracy is important, VAE may be the better option.

Lastly, for the “proposed method” autoencoder-based anomaly detection where the pseudocode can be referred in Table S4, it surpasses the others with the highest ROC AUC of 0.931. The method not only excels in class separation between glaucoma and non-glaucoma but also achieves an impressive balance between precision (0.844) and recall (0.881), that resulting in the highest F1-score of 0.862. This method obtained the highest performance when compare with PCA, GMM and VAE. The goal for detecting glaucoma and non-glaucoma is to strike a balance between high precision and recall, so that it particularly promising in glaucoma detection scenarios where both are desired.

In conclusion, the selection of the best method for glaucoma detection should be made with meticulous consideration of the specific objectives and the trade-offs between precision and recall. Depending on the application’s requirements and the consequences of false positives and false negatives, any of these methods may prove suitable. However, the “proposed method” emerges as a robust choice for achieving a desirable balance between precision and recall while maintaining strong overall performance in glaucoma detection. The comparison between PCA, GMM, VAE and autoencoder-based anomaly detection is shown in Table 3.

Table 3

| Method | ROC AUC | Precision | Recall | F1-score |

|---|---|---|---|---|

| PCA | 0.6730 | 0.9919 | 0.494 | 0.6595 |

| GMM | 0.7971 | 0.9645 | 0.7671 | 0.8520 |

| VAE (previous method) | 0.7518 | 0.9781 | 0.5381 | 0.6943 |

| Anomaly autoencoder (proposed method) | 0.9310 | 0.8440 | 0.8810 | 0.8620 |

AUC, area under the curve; GMM, Gaussian mixture model; PCA, principal component analysis; ROC, receiver operating characteristic; VAE, variational autoencoder; VF, visual field.

Discussion

The use of anomaly autoencoder model has demonstrated its superiority compared to PCA, GMM, and VAE, particularly in the context of VF defect analysis. Since anomaly autoencoder is based on autoencoder architecture is shows a better performance compare to others unsupervised method. This is because autoencoder excel in extracting complex representations and effectively capture both linear and non-linear patterns from input data, since, VF dataset is plot from the mapping point test using HVF that is composed from standard deviation.

Unlike PCA, which struggles with the linear constraints when dealing with intricate and gradual fault patterns in the VF dataset, the anomaly autoencoder can handle these complexities better. Similarly, the GMM has limitations in modeling the intricate structures within the VF dataset, as it assumes that the data is generated from a mixture of several Gaussian distributions. This assumption often falls short when dealing with the non-gaussian and complex nature of VF defects.

On the other hand, the VAE have tended to underperform in anomaly detection tasks since this model prioritize learning a smooth latent space representation, which might not be optimal for identifying subtle deviations in VF patterns. Therefore, the propose method is better suited for detecting anomalies in VF dataset since it focuses on minimizing reconstruction error during training process that making the model more sensitive to anomalies.

For anomaly autoencoders, the threshold is determined using reconstruction error, often requiring statistical techniques or ROC AUC curve analysis to balance precision and recall so the detection of glaucoma and non-glaucoma is balance and achieve higher accuracy. While others method like PCA relies on Mahalanobis distance, GMM models the normal data distribution, and VAE uses probabilistic frameworks for threshold setting. The choice of threshold affects the performance and balance between true positives and false positives, emphasizing the need for careful calibration based on the specific application and acceptable error rates.

Conclusions

This study highlights the effectiveness of the autoencoder-based anomaly detection approach call anomaly autoencoder, which achieved superior performance compared to PCA, GMM, and VAE. The proposed method demonstrated a high ROC AUC of 93.1%, reflecting its strong overall performance in differentiating between glaucoma and non-glaucoma cases. Additionally, the autoencoder approach achieved a notable balance between precision (84.4%) and recall (88.1%), resulting in the highest F1-score of 86.2% among the methods evaluated. These results underscore the potential of autoencoder-based anomaly detection in significantly enhancing glaucoma detection capabilities.

Acknowledgments

Fundamental Research Grant Scheme (FRGS900300646 1/2018) supported this work. We are grateful to our colleagues who have provided expertise that has greatly assisted the research, although they may or may not agree with all of the interpretations contained in this paper. We are also thankful to Center of Excellence for Advanced Computing under Faculty of Electronic Engineering & Technology, Universiti Malaysia Perlis, which provided laboratory facilities during this work.

Footnote

Peer Review File: Available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-179/prf

Funding: This research was funded by

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-179/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the study in ensuring that questions related to the accuracy or integrity of any part of the study are appropriately investigated and resolved. IRB approval and informed consent are not applicable since the data was obtained from public database.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Rusdi R, Isa MZA. Patterns of visual field defects in Malay population with myopic eyes. Malaysian Journal of Medicine and Health Sciences 2020;16:101-5.

- Ameen Ismail A, Sadek SH, Kamal MA, et al. Association of Postural Blood Pressure Response With Disease Severity in Primary Open Angle Glaucoma. J Glaucoma 2024;33:225-39. [Crossref] [PubMed]

- Huang J, Galal G, Mukhin V, et al. Prediction and Detection of Glaucomatous Visual Field Progression Using Deep Learning on Macular Optical Coherence Tomography. J Glaucoma 2024;33:246-53. [Crossref] [PubMed]

- Berchuck SI, Mukherjee S, Medeiros FA. Estimating Rates of Progression and Predicting Future Visual Fields in Glaucoma Using a Deep Variational Autoencoder. Sci Rep 2019;9:18113. [Crossref] [PubMed]

- Pham QTM, Han JC, Park DY, et al. Multimodal Deep Learning Model of Predicting Future Visual Field for Glaucoma Patients. IEEE Access 2022;11:19049-58.

- Allison K, Patel D, Alabi O. Epidemiology of Glaucoma: The Past, Present, and Predictions for the Future. Cureus 2020;12:e11686. [Crossref] [PubMed]

- Li Z, Dong M, Wen S, et al. CLU-CNNs: Object detection for medical images. Neurocomputing 2019;350:53-9. [Crossref]

- Asaoka R, Murata H, Asano S, et al. The usefulness of the Deep Learning method of variational autoencoder to reduce measurement noise in glaucomatous visual fields. Sci Rep 2020;10:7893. [Crossref] [PubMed]

- Asaoka R, Murata H. Prediction of visual field progression in glaucoma: existing methods and artificial intelligence. Jpn J Ophthalmol 2023;67:546-59. [Crossref] [PubMed]

- Purves D, Augustine GJ, Fitzpatrick D, et al. editors. Neuroscience. 2nd edition. Sunderland, MA, USA: Sinauer Associates; 2001.

- Bertacchi M, Parisot J, Studer M. The pleiotropic transcriptional regulator COUP-TFI plays multiple roles in neural development and disease. Brain Res 2019;1705:75-94. [Crossref] [PubMed]

- Kucur ŞS, Holló G, Sznitman R. A deep learning approach to automatic detection of early glaucoma from visual fields. PLoS One 2018;13:e0206081. [Crossref] [PubMed]

- Park K, Kim J, Lee J. Visual Field Prediction using Recurrent Neural Network. Sci Rep 2019;9:8385. [Crossref] [PubMed]

- Abu M, Zahri NAH, Amir A, et al. Classification of Multiple Visual Field Defects using Deep Learning. Journal of Physics: Conference Series 2021;1755:012014. [Crossref]

- Asaoka R, Murata H, Matsuura M, et al. Improving the Structure-Function Relationship in Glaucomatous Visual Fields by Using a Deep Learning-Based Noise Reduction Approach. Ophthalmol Glaucoma 2020;3:210-7. [Crossref] [PubMed]

- Sugisaki K, Asaoka R, Inoue T, et al. Predicting Humphrey 10-2 visual field from 24-2 visual field in eyes with advanced glaucoma. Br J Ophthalmol 2020;104:642-7. [Crossref] [PubMed]

- Teng B, Li D, Choi EY, et al. Inter-Eye Association of Visual Field Defects in Glaucoma and Its Clinical Utility. Transl Vis Sci Technol 2020;9:22. [Crossref] [PubMed]

- Li F, Song D, Chen H, et al. Development and clinical deployment of a smartphone-based visual field deep learning system for glaucoma detection. NPJ Digit Med 2020;3:123. [Crossref] [PubMed]

- Tian Y, Zang M, Sharma A, et al. Glaucoma Progression Detection and Humphrey Visual Field Prediction Using Discriminative and Generative Vision Transformers. In: Antony B, Chen H, Fang H, et al. editors. Ophthalmic Medical Image Analysis 2023. Cham: Springer; 2023:62-71.

- Thirunavukarasu AJ, Jain N, Sanghera R, et al. A validated web-application (GFDC) for automatic classification of glaucomatous visual field defects using Hodapp-Parrish-Anderson criteria. NPJ Digit Med 2024;7:131. [Crossref] [PubMed]

- Mandal S, Jammal AA, Medeiros FA. Assessing glaucoma in retinal fundus photographs using Deep Feature Consistent Variational Autoencoders. 2021 [cited 2024 January 20]. Available online: https://doi.org/

10.48550 /arXiv.2110.01534 - Sathya R, Balamurugan P. Glaucoma Identification in Digital Fundus Images using Deep Learning Enhanced Auto Encoder Networks (DL-EAEN) for Accurate Diagnosis. Indian Journal of Science and Technology 2023;16:4026-37. [Crossref]

- Makala BP, Kumar DM. An efficiet glaucoma prediction and classification integrating retinal fundus images and clinical data using DnCNN with machine learning algorithms. Results in Engineering 2025;25:104220. [Crossref]

- Aslam MM, Tufail A, De Silva LC, et al. An improved autoencoder-based approach for anomaly detection in industrial control systems. Systems Science & Control Engineering 2024;12:2334303. [Crossref]

- Sivakumar M, Parthasarathy S, Padmapriya T. Trade-off between training and testing ratio in machine learning for medical image processing. PeerJ Comput Sci 2024;10:e2245. [Crossref] [PubMed]

- Kucur SS. Early Glaucoma Identification (data set). GitHub 2018 [cited 2021 September 29]. Available online: https://github.com/serifeseda/early-glaucoma-identification

- Hashimoto Y, Kiwaki T, Sugiura H, et al. Predicting 10-2 Visual Field From Optical Coherence Tomography in Glaucoma Using Deep Learning Corrected With 24-2/30-2 Visual Field. Transl Vis Sci Technol 2021;10:28. [Crossref] [PubMed]

- Kahook MY, Noecker RJ. How Do You Interpret a 24-2 Humphrey Visual Field Printout? Glaucoma Today 2007;57-9. Available online.

- Deng M, Guo Y, Wang C, et al. An oversampling method for multi-class imbalanced data based on composite weights. PLoS One 2021;16:e0259227. [Crossref] [PubMed]

- E JY. Association Between Visual Field Damage and Gait Dysfunction in Patients With Glaucoma. JAMA Ophthalmol 2021;139:1053-60. [Crossref] [PubMed]

- Feldman K. Z-Score: A Handy Tool for Detecting Outliers in Data. 2023 [cited 2023 July 19]. Available online: https://www.isixsigma.com/dictionary/z-score/

- Esmaeili F, Cassie E, Nguyen HPT, et al. Anomaly Detection for Sensor Signals Utilizing Deep Learning Autoencoder-Based Neural Networks. Bioengineering (Basel) 2023;10:405. [Crossref] [PubMed]

- Jebril H, Esengönül M, Bogunović H. Anomaly Detection in Optical Coherence Tomography Angiography (OCTA) with a Vector-Quantized Variational Auto-Encoder (VQ-VAE). Bioengineering 2024;11:682. [Crossref] [PubMed]

- Kamimura R, Takeuchi H. Autoeconder-Based Excessive Information Generation for Improving and Interpreting Multi-layered Neural Networks. 2018 7th International Congress on Advanced Applied Informatics (IIAI-AAI). Yonago: IEEE; 2018:518-23.

- Kaur S, Aggarwal H, Rani R. Hyper-parameter optimization of deep learning model for prediction of Parkinson’s disease. Machine Vision and Applications 2020;31:32. [Crossref]

- Loey M, El-Sappagh S, Mirjalili S. Bayesian-based optimized deep learning model to detect COVID-19 patients using chest X-ray image data. Comput Biol Med 2022;142:105213. [Crossref] [PubMed]

- Abu M, Zahri NAH, Amir A, et al. Analysis of the Effectiveness of Metaheuristic Methods on Bayesian Optimization in the Classification of Visual Field Defects. Diagnostics (Basel) 2023;13:1946. [Crossref] [PubMed]

- Dubey SR, Singh SK, Chaudhuri BB. Activation functions in deep learning: A comprehensive survey and benchmark. Neurocomputing 2022;503:92-108. [Crossref]

- Liu X, Di X. TanhExp: A smooth activation function with high convergence speed for lightweight neural networks. IET Comput Vis 2021;15:136-50. [Crossref]

- Shaziya H, Zaheer R. Impact of Hyperparameters on Model Development in Deep Learning. In: Chaki N, Pejas J, Devarakonda N, et al. editors. Proceedings of International Conference on Computational Intelligence and Data Engineering. Singapore: Springer; 2021:57-67.

- Huang G, Liu Z, Van Der Maaten L, et al. Densely connected convolutional networks. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, HI, USA: IEEE; 2017:2261-9.

- Doshi S. Various Optimization Algorithms For Training Neural Network. Toward Data Science 2019 [cited 2023 July 20]. Available online: https://towardsdatascience.com/optimizers-for-training-neural-network-59450d71caf6

- Smith SL, Kindermans PJ, Ying C, et al. Don’t Decay the Learning Rate, Increase the Batch Size. ICLR 2018. 2018 [cited 2023 August 1]. Available online: https://doi.org/

10.48550 /arXiv.1711.00489 - Brownlee J. Difference Between a Batch and an Epoch in a Neural Network. Machine Learning Mastery 2022 [cited 2024 February 1]. Available online: https://machinelearningmastery.com/difference-between-a-batch-and-an-epoch/

- Chollet F. Introduction to Keras for Engineers. 2023 [cited 2023 February 1]. Available online: https://keras.io/getting_started/intro_to_keras_for_engineers/

- Hajian-Tilaki K. Receiver Operating Characteristic (ROC) Curve Analysis for Medical Diagnostic Test Evaluation. Caspian J Intern Med 2013;4:627-35. [PubMed]

- Ruia S, Tripathy K. Humphrey Visual Field. Treasure Island, FL, USA: StatPearls Publishing; 2023.

Cite this article as: Abu M, Zahri NAH, Amir A, Ismail MI, Yaakub A. An autoencoder-based approach for glaucoma anomaly detection in visual fields diagnosis. J Med Artif Intell 2025;8:31.