Evaluating the utility of using ChatGPT 3.5 to generate research ideas for non-operative spine medicine

Highlight box

Key findings

• Chat generative pretrained transformer (ChatGPT) was able to produce some new research ideas with clinical relevance in the non-operative spine medicine literature.

What is known and what is new?

• ChatGPT and artificial intelligence is an evolving entity and has been demonstrated to assist in different elements of the research process including manuscript writing, editing, and generating research ideas in fields such as otolaryngology.

• This manuscript shows that ChatGPT can produce research ideas for non-operative spine medicine and shows that it has equal ability to generate review articles, retrospective reviews, and prospective research ideas.

What is the implication, and what should change now?

• At present, investigators should be cautious when using ChatGPT for research idea generation within non-operative spine medicine. Future studies may demonstrate improvement with ChatGPT and investigate the benefit of providing additional context to ChatGPT to guide research idea generation.

Introduction

Back pain is the most common musculoskeletal complaint and can be debilitating and deleterious to quality of life (1). The practice of spine medicine involves diagnosing the etiology of back pain, and providing treatment to improve symptoms. Treatments include medications, physical treatments, and invasive procedures.

Ongoing research into these conditions is pivotal to determine the best clinical practices for diagnosis, minimizing risk, and optimizing treatment in order to improve patient outcomes. Traditionally, new research ideas have been generated through clinical expertise, literature reviews, educational conferences, and expert opinions to identify gaps in the knowledge base. However, it can be difficult to generate new research ideas given the complexity of the conditions and the breadth of potential avenues for advancing the science. These complexities stem from the heterogeneous patient populations affected, the inherently difficult nature of back pain, and the challenges of outcome measurement due to the subjective nature of most measurement scales.

Research examining other disease processes, such as dysphagia and cosmetic plastic surgery, has explored the use of artificial intelligence (AI) and natural language processing systems such as chat generative pretrained transformer (ChatGPT) to develop new research ideas with some success (2,3). Nachalon et al. showed that ChatGPT, a large language model (LLM) AI system developed by Open AI (OpenAI, San Francisco, California, USA) could generate ideas for novel, feasible studies relevant to clinical practice (2). Furthermore, reviews have highlighted that ChatGPT can save time, check for errors, assist with literature review, and generate other research ideas (3-5). What is not known, however, is if ChatGPT can be applied to other fields such as spine medicine as a useful tool to generate innovative research questions and craft clinically impactful studies. Secondarily, it is not known if ChatGPT is more adept at designing a certain type of research, such as a review article, as compared to something requiring more clinical insight such as a retrospective or prospective study. This study aims to understand if ChatGPT 3.5 is capable of generating different types of spine medicine research and if it has a better ability to generate one research type over another. We hypothesize that ChatGPT is able to generate impactful research ideas for non-operative spine medicine, and that it preferentially provides stronger ideas for review articles as compared to prospective and retrospective study designs. Of note, for the purposes of this research, ChatGPT 3.5 was used because when the prompts were originally provided to ChatGPT, version 3.5 was free and readily accessible to the public. Since this study was completed, further iterations and versions of ChatGPT have been released and are now readily available to the public.

Methods

This study was conducted in accordance with the Declaration of Helsinki and its subsequent amendments. This study was approved by Stanford University Institutional Review Board (IRB-73931). Informed consent was taken from all individual participants. In order to investigate how well ChatGPT, an LLM based on the GPT 3.5 architecture, could generate research study ideas, 15 study ideas were compiled using simple prompts to the system. The study also aimed to compare different study types. To accomplish these tasks, the following prompts were entered into ChatGPT without any previous context: “Please design 5 prospective non-operative spine medicine clinical research studies”, “Please design 5 retrospective non-operative spine medicine clinical research studies”, and “Please design 5 review article ideas studying non-operative spine medicine clinical research studies”. A zero-shot prompting approach was utilized in this study so as not to bias the LLM with user input and rely solely on its prior knowledge (6). These questions were asked to ChatGPT on December 16, 2023.

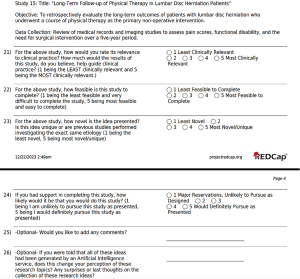

Once the study prompts had been retrieved, ChatGPT was asked to randomize the order of the prompts. This randomization acted to shuffle the ideas so respondents would have a sample of all study types throughout the survey and limit bias based upon the order of the study types. A survey was then made using Research Electronic Data Capture (REDCap) and was sent to multiple academic non-operative spine centers across the United States (7). The survey asked a repeated sequence of questions per study idea asking participants to rank each study from 1 to 5, with 1 being defined as “Poor”, 2 as “Below Average”, 3 as “Average”, 4 as “Above Average”, and 5 as “Excellent”, on clinical relevance, novelty, feasibility, and overall interest to pursue this idea, with an optional section for comments regarding each study idea. The overall interest score was a separate entity and not a composite of the other three measures. While this is not a previously validated system for evaluating literature, this scale was communicated to respondents prior to their responses and throughout the survey. The responses were provided based on the respondents’ existing clinical and research knowledge, and respondents were not expected to conduct a spine literature search to prepare for this study. After the repeated sequence of survey questions was asked, a final question notified participants that the research prompts were generated using AI software and asked if that changed perceptions of the previous ideas. An example of the last page of questions including this wrap up question is shown in Figure 1.

Statistical analysis

Data from this survey was coded into REDCap and descriptive statistics including means and standard deviations were applied. Statistical analysis including Kruskal-Wallis tests with post-hoc Dunn’s tests using Holm-adjusted P values and Fisher’s exact test were completed to assess the differences among the types of studies. Analyses were completed in RStudio version 2023.12.1+402 using a two-sided level of significance of 0.05 (8).

Results

ChatGPT generated 15 research ideas (Figure 2), including 5 prospective studies, 5 retrospective studies, and 5 review articles. The research ideas spanned different topics such as telemedicine, biopsychosocial models to approach back pain, yoga, bracing, epidural steroid injections, and physical therapy interventions on populations ranging from pediatric patients to adults.

Surveys were sent over a span of 6 months from April 2024 to October 2024 to 12 academic spine physiatrists at six different institutions as the initial contact points. The six institutions were selected based upon research production as leaders in spine medicine research. Recipients were encouraged to forward the survey to colleagues at other academic institutions. Given this “word of mouth” approach, it is unclear exactly how many physicians received an invitation to participate in our study. There were 17 respondents to the survey, with 13 respondents completing the entire survey. The data from partial responses were included within the analysis to have as much data and representation as possible. Missing data was not used in any analysis. The scores for relevance, novelty, feasibility, and the interest score were averaged amongst respondents.

For the entire group of studies, the median overall interest score was 3/5 [interquartile range (IQR) =2–4], indicating that on average, studies were graded as Average. The median overall interest score was 3/5 (IQR =2–4) for prospective studies, retrospective studies, and review article ideas, with no significant difference between the study types (P=0.58). Additionally, there were no differences in the number of studies that scored ≥3 in the overall interest score amongst the three categories of studies (Table 1).

Table 1

| Study type | Interest ≥3/5 | Interest <3/5 | P value |

|---|---|---|---|

| Prospective | 3 (60%) | 2 (40%) | 0.80 |

| Retrospective | 2 (40%) | 3 (60%) | |

| Review articles | 1 (20%) | 4 (80%) |

For the interest score, a threshold of ≥3/5 signified that the respondent would in fact pursue the study if they had the requisite support. Of the 15 studies, there were six studies with an average interest score ≥3/5. Of these six studies, three were prospective, two were retrospective, and one was a review article (P=0.80). The highest interest score was 3.57/5 for a prospective study titled “Long-Term Outcomes of Non-Operative Management in Elderly Patients with Spinal Stenosis”. The lowest score was 2.07/5 for a retrospective study titled “Comparison of Outcomes in Non-Operative Management of Spondylolisthesis: Bracing vs. Physical Therapy”.

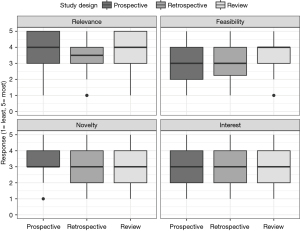

The scores for each study and for each category of studies are shown in Table 2 and Figure 3, respectively. There were no differences between the study categories in achieving a score of ≥3, and there were no differences in the clinical relevance and interest scores (Table 3). There were, however, differences in the feasibility and novelty scores with review studies having a distribution with significantly higher-ranked feasibility scores than prospective and retrospective studies (P=0.01; P=0.049, respectively), and prospective studies having a distribution with significantly higher-ranked novelty scores than review studies (P=0.02) (Table 3).

Table 2

| Study title | Average relevance | Average feasibility | Average novelty | Average overall interest |

|---|---|---|---|---|

| “Comparison of Outcomes in Non-Operative Management of Spondylolisthesis: Bracing vs. Physical Therapy” | 2.86 | 2.43 | 2.86 | 2.07 |

| “Impact of Biopsychosocial Interventions on Work-related Outcomes in Workers with Non-specific Back Pain” | 3.77 | 2.69 | 3.15 | 2.38 |

| “Comparative Effectiveness of Yoga and Mindfulness Meditation for Lumbar Disc Degeneration” | 2.92 | 2.92 | 3.15 | 2.46 |

| “Retrospective Analysis of Non-Operative Interventions in Pediatric Scoliosis Patients” | 3.23 | 3.08 | 2.85 | 2.46 |

| “Psychosocial Factors in Non-Operative Spine Care: A Critical Review” | 3.79 | 3.21 | 3.00 | 2.64 |

| “Current Trends in Non-Operative Management of Degenerative Disc Disease: A Systematic Review” | 3.59 | 3.41 | 2.41 | 2.65 |

| “Impact of Psychosocial Factors on Non-Operative Management in Patients with Failed Back Surgery Syndrome” | 3.59 | 3.35 | 2.88 | 2.76 |

| “Evaluating the Efficacy of Interventional Pain Procedures in Non-Operative Lumbar Spine Care: A Comprehensive Review” | 3.88 | 3.41 | 2.41 | 2.82 |

| “Non-Operative Management of Spinal Stenosis: A Comprehensive Literature Review” | 4.18 | 3.71 | 2.71 | 2.94 |

| “Utilization and Efficacy of Epidural Steroid Injections in Chronic Neck Pain Patients” | 3.59 | 3.41 | 2.53 | 3.00 |

| “Effectiveness of Multidisciplinary Pain Management in Chronic Low Back Pain Patients” | 4.08 | 3.00 | 3.08 | 3.08 |

| “Advancements in Telemedicine for Non-Operative Spine Care: A Systematic Review” | 3.71 | 3.64 | 3.79 | 3.21 |

| “Efficacy of Telehealth Rehabilitation in Cervical Radiculopathy” | 3.69 | 3.23 | 3.69 | 3.23 |

| “Long-Term Follow-up of Physical Therapy in Lumbar Disc Herniation Patients” | 4.31 | 3.31 | 3.46 | 3.46 |

| “Long-Term Outcomes of Non-Operative Management in Elderly Patients with Spinal Stenosis” | 4.29 | 3.43 | 3.43 | 3.57 |

Table 3

| Measure | Study type | N | Median | IQR | P value | Significant pairwise comparison | Holm-adjusted P value |

|---|---|---|---|---|---|---|---|

| Relevance | Prospective | 66 | 4.0 | 3.0–5.0 | 0.14 | ||

| Retrospective | 74 | 3.5 | 3.0–4.0 | ||||

| Review | 79 | 4.0 | 3.0–5.0 | ||||

| Feasibility | Prospective | 66 | 3.0 | 2.0–4.0 | 0.008* | Review vs. prospective | 0.01* |

| Retrospective | 74 | 3.0 | 2.3–4.0 | Review vs. retrospective | 0.049* | ||

| Review | 79 | 4.0 | 3.0–4.0 | ||||

| Novelty | Prospective | 66 | 3.0 | 3.0–4.0 | 0.02* | Review vs. prospective | 0.02* |

| Retrospective | 74 | 3.0 | 2.0–4.0 | ||||

| Review | 79 | 3.0 | 2.0–4.0 | ||||

| Interest | Prospective | 66 | 3.0 | 2.0–4.0 | 0.58 | ||

| Retrospective | 74 | 3.0 | 2.0–4.0 | ||||

| Review | 79 | 3.0 | 2.0–4.0 |

*, significance, alpha =0.05. IQR, interquartile range.

Fifteen of the 17 participants added comments on the studies. The 13 who completed the entire survey were asked “If you were told that these ideas had been generated by artificial intelligence, does your perception of these ideas change?”. Nine of the 13 stated that their perceptions did not change or thought that it was believable that the research ideas were generated by AI. Three of the remaining four made comments that AI may have some utility in research idea generation. Other interesting comments included one respondent who thought that the research ideas were generated by a medical student, others who stated that the topics were either too broad, general, or had already been completed, and a common theme in the comments with concerns about the use of the terminology “degenerative disc disease and lumbar disc degeneration”, nonspecific terms that are often used colloquially, but typically not used in the scientific literature.

Discussion

The objective of this study was to investigate the ability of ChatGPT to generate quality research ideas in the field of non-operative spine medicine, and a secondary aim of assessing the type of study that ChatGPT was most adept at creating. The ideas that ChatGPT created spanned a range of topics including telehealth, conservative treatments, and spinal injections, both in pediatric and adult patients. These ideas appear similar to previous work demonstrating that an “AI Scientist” is capable of developing scientific research ideas (5).

Our findings suggest that ChatGPT was successful in suggesting a broad range of topics, and that 40% of the suggested topics were viewed by the academic spine physiatrists as ideas potentially worth pursuing. Prospective studies had the highest interest scores; however, the differences were not statistically significant.

The majority of respondents stated that their perceptions about the research ideas did not change after being informed that the ideas were generated by AI. However, several respondents felt that many of the research ideas were simplistic and used colloquial terminology instead of more accepted scientific language. This may be due to the algorithm that ChatGPT uses to generate responses, possibly basing its results off data from popular search engines instead of academic search engines. As a result, while ChatGPT may generate some insightful research ideas, it also appears to generate many rudimentary ones.

Additionally, while 40% of the study ideas were considered to be potentially worthwhile of pursuing (≥3 in overall interest score), none of the studies scored >4 (a score that would have indicated “Above Average”). Given the lack of robust overall interest scores, it is unclear whether any of the researchers would have actually chosen to pursue research projects based on these ideas.

This study sampled different research types including prospective studies, retrospective studies, and review articles. While other papers have shown that ChatGPT is adept at creating each of these types of studies, we are unaware of previous work that sought to compare these different research types (2-4). In our study, there was no statistical difference in the overall interest scores based on study type, but we did show that review articles had higher feasibility scores and prospective studies had higher novelty scores. These deviations are likely intrinsic to the types of research, with review articles being easier to accomplish and less resource intensive than prospective studies, and prospective studies being able to explore novel ideas.

While the use of AI in research is increasing and has gained attention over recent years, our investigation shows that much work remains before nonoperative spine physicians will rely on AI to develop research ideas. Although some AI generated ideas have shown promise, clinical expertise remains the most common method for research development at the current time.

Limitations of our study include the use of non-validated metrics to grade research ideas, the “word of mouth” recruitment, and the small sample size of each study type. With only five studies per study type, it is difficult to generalize the findings to prospective, retrospective, and review articles as a whole. This approach was employed to minimize the length of the survey to ensure higher completion rates. Lastly, as an ever-evolving entity, AI will likely continue to improve over time. As a result, future studies may produce better results.

In this study, ChatGPT was not given any guidance or context prior to generating the 15 research prompts. This study was performed using a zero-shot prompting strategy to focus purely on the prior knowledge of ChatGPT 3.5 to provide a snapshot of ChatGPT’s capabilities at that time. While this strategy may not be as realistic given that researchers and medical professionals alike often ask pointed questions, this study does suggest an overall positive finding that even without proper context, ChatGPT was able to generate studies that professionals may consider. Given that ChatGPT and other LLM’s are currently only as strong as their training data, it stands to reason that with proper context or understanding of recent studies that have answered important questions, the content ChatGPT can provide may improve. Future studies could look at how providing key words, prompts, context, landmark papers, or other user-approved research ideas to ChatGPT ahead of time may lead to improving the research ideas it can produce. This “few-shot prompting approach” could be considered in future studies as it has previously shown benefit (9). Future studies could also consider direct comparison between user-generated and AI-generated research ideas.

Conclusions

ChatGPT appears to be able to generate some research ideas with clinical relevance in non-operative spine medicine. However, at the current time, its utility under a zero-shot prompting strategy appears to be limited. Future studies could evaluate the addition of prompts, and the use of updated versions of ChatGPT to attempt to improve outcomes. Additionally, future work could compare human vs. AI-generated research ideas.

Acknowledgments

None.

Footnote

Data Sharing Statement: Available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-480/dss

Peer Review File: Available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-480/prf

Funding: None.

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-480/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki and its subsequent amendments. This study was approved by Stanford University Institutional Review Board (IRB-73931). Informed consent was taken from all individual participants.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Gibbs D, McGahan BG, Ropper AE, et al. Back Pain: Differential Diagnosis and Management. Neurol Clin 2023;41:61-76. [Crossref] [PubMed]

- Nachalon Y, Broer M, Nativ-Zeltzer N. Using ChatGPT to Generate Research Ideas in Dysphagia: A Pilot Study. Dysphagia 2024;39:407-11. [Crossref] [PubMed]

- Gupta R, Park JB, Bisht C, et al. Expanding Cosmetic Plastic Surgery Research With ChatGPT. Aesthet Surg J 2023;43:930-7. [Crossref] [PubMed]

- Sallam M. ChatGPT Utility in Healthcare Education, Research, and Practice: Systematic Review on the Promising Perspectives and Valid Concerns. Healthcare (Basel) 2023;11:887. [Crossref] [PubMed]

- Castelvecchi D. Researchers built an ‘AI Scientist’ - what can it do? Nature 2024;633:266. [Crossref] [PubMed]

- Sivarajkumar S, Kelley M, Samolyk-Mazzanti A, et al. An Empirical Evaluation of Prompting Strategies for Large Language Models in Zero-Shot Clinical Natural Language Processing: Algorithm Development and Validation Study. JMIR Med Inform 2024;12:e55318. [Crossref] [PubMed]

- Harris PA, Taylor R, Thielke R, et al. Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform 2009;42:377-81. [Crossref] [PubMed]

- RStudio Team (2020). RStudio: Integrated Development for R. RStudio, PBC, Boston, MA [Internet]. Available online: http://www.rstudio.com/

- Brown TB, Mann B, Ryder N, et al. Language Models are Few-Shot Learners [Internet]. arXiv; 2020 [cited 2025 Mar 19]. Available online: https://arxiv.org/abs/2005.14165

Cite this article as: Kaufman M, Pham N, Levin J. Evaluating the utility of using ChatGPT 3.5 to generate research ideas for non-operative spine medicine. J Med Artif Intell 2025;8:30.