The future of anatomic pathology: deus ex machina?

This editorial is in response to the article on digital pathology published by Van Es (1), which is in turn a response to the articles published by Eric F. Glassy (2) and Thomas James Flotte (3). Our goal is to add what we feel are pertinent historical details and offer our perspective concerning the emerging role of digital pathology in anatomic pathology.

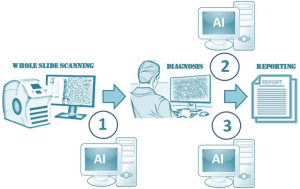

Digital pathology is the study and practice of pathology primarily based on whole slide images (WSI), but also on static and dynamic (live-feed) images. WSI (sometimes referred to as virtual microscopy or eSlides) are acquired by stitching together hundreds to thousands of individual microscopic pixel-based images generated by a tiled- or line-based whole slide scanner (4). These images are organized to form a pyramidal structure with low-magnification images at the tip of the pyramid and high-magnification images at the base. WSI can either be viewed directly or with the enhancement of computer software. When software is used to enhance the experience of the pathologist viewing WSI including image manipulation, it is referred to as computer assisted diagnosis (CAD). CAD involves different image processing techniques (e.g., segmentation, feature extraction, dimension reduction) and when coupled with artificial intelligence (AI) can reduce the time needed to arrive at a diagnosis, improve accuracy, as well as decrease intra- and interobserver variability (5). As Van Es noted, digital pathology has been accepted as equal (or at least non-inferior) to traditional light microscopy for diagnostic accuracy, and may even be better than traditional microscopy for taking some microscopic measurements (1). However, with the introduction of disruptive tools such as deep learning algorithms in the near future AI is anticipated to do much more with WSI than merely provide precise measurements.

CAD can use several AI techniques, depending on the type of problem. Generally, AI uses computer systems to mimic natural human intelligence (6). Machine learning represents a branch of AI that involves building mathematical models on training data and applying these models on newly observed data to better understand the new data and accordingly make predictions. Deep learning, also a branch of AI, uses neural networks as estimators to determine feature extraction strategies from data, rather than relying on user intuition (7). Researchers have demonstrated much success to date applying both techniques to WSI (8,9). Mass generation of large WSI datasets has been essential for applying AI in digital pathology. The use of AI in digital pathology will no doubt increase as WSI gets used more by many labs around the world for primary diagnosis and more imaging data is generated.

The concept of applying AI to solve problems in pathology is not new. Indeed, almost two decades ago computer-assisted technology was adopted by the cytopathology community to automate screening of Pap tests. Although such an automated workflow for cytopathology had been desired since the early 1950’s, it was not until 1995 that the Papnet and AutoPap automated Pap test screening systems employing digital imaging technology were approved by the U.S. Food and Drug Administration (FDA) (10). A key lesson to be learned from these early computer-assisted automated Pap test screening systems is that standardizing pre-imaging steps (e.g., uniform specimen fixation and staining and creating a flat monolayer from liquid-based cytology samples) using equipment and reagents from the same vendor that performs image acquisition and analysis is important. A lack of uniformity in the workflow and too much heterogeneity will result in variability that can hamper digital pathology image analysis results (11). Recently the College of American Pathologists (CAP) and National Society for Histotechnology (NSH) have started a Whole Side Quality Improvement Program. In this program participating laboratories are asked to subject their paired glass and digital slides for quality grading (12). While this is a step in the right direction for ensuring quality of digital images in surgical pathology, there is currently no standardized approach worldwide for preprocessing (e.g., tissue fixation time) and postprocessing steps (i.e., AI algorithm analysis) in anatomic pathology.

Several pathology laboratories have adopted WSI technology and already gone “fully digital” for primary diagnosis (13). However, the transformation to WSI is not yet economically feasible for all labs. Newer commercial systems are also attempting to improve the throughput, scalability, interoperability and accuracy of digital pathology platforms including plug-in image algorithms that facilitate automation in the laboratory. It is unclear how many laboratories are actually using AI for routine case sign-out apart from some that have adopted AI for cost-efficiency (e.g., Lumea) and quantitative image analysis of immunohistochemical stains (14). If pathologists do not embrace innovative ways to improve their methods such as is offered by digital pathology, in a way that is palatable to all pathology groups, we will likely get left behind and at worst marginalized by our clinical colleagues in the emerging era of AI.

AI is a tool, and like most tools works best in certain situations and in the hands of trained users. AI works best for identifying patterns in large, high-dimensional datasets that meet certain criterion standards. Like any other laboratory test, AI should be clinically validated against current quality standards to ensure clinical effectiveness and safety in practice (15). Expert clinical support tools have been in development since at least the 1970’s, including MYCIN, CASNET, CADUCEUS, and INTERNIST-1 (16,17). These expert systems were designed to encode the diagnostic clinical expertise of physicians and quickly produce diagnoses or treatment options based on input data. The diagnostic performance of INTERNIST-1 was found to be qualitatively similar to clinicians at an academic teaching hospital. Despite having proven performance and more than a 30-year head start in their field of automated diagnosis, today not many “robot” clinicians can be found rounding the wards (16). Even after the clinical effectiveness of an AI system is proven, AI systems still face the challenge of integration into clinical care. This may prove to be difficult considering that most AI algorithms process data in a “black box” in which developers and users do not know how computers arrive at conclusions (15). Several other challenges to implementing AI solutions in digital pathology have been described, which must be weighed against their benefits when considering their potential application in clinical practice (Table 1) (18).

Table 1

| Pro or Con | Explanation |

|---|---|

| Challenges | |

| Lack of labeled data | High-quality labelled images are essential for training AI algorithms |

| Pervasive variability | Histologic patterns of basic tissue types are variable and thus difficult for AI to learn |

| Non-boolean nature of diagnostic tasks | Pathology diagnoses are complex, requiring contextual knowledge and experience. AI algorithms are better with binary (yes/no) decisions |

| Dimensionality obstacle | Large pathology images need to be broken down into small “patches” or image tiles, which can cause a loss of crucial information |

| Turing test dilemma | Human pathologists should have the final word, even with AI assistance |

| Uni-task orientation of weak AI | Current “weak AI” can only work on single highly specific tasks, and is not able to multitask or function at a level of human intelligence |

| Affordability of required computational expenses | Expensive graphics processing units required to train deep learning algorithms could be a limiting factor for many laboratories |

| Adversarial attacks | Deep artificial neural networks can be fooled by a targeted manipulation of a very small number of pixels (the adversarial attack), misleading AI |

| Lack of transparency and interpretability | Neural networks are considered to be a “black box” |

| Realism of AI | AI is difficult to implement in pathology. Successful AI tools should by easy to use, financially feasible, and performance-proven |

| Opportunities | |

| Deep features | Transfer learning from other domains can provide features for medical images |

| Handcrafted features | Computer vision can still be helpful |

| Generative Frameworks | Naïve Bayes, restricted Boltzmann machines, and generative adversarial networks are generative methods that focus on learning to produce data without making any decisions |

| Unsupervised learning | May be possible to extract data from images that are not annotated |

| Virtual peer review | Systems can find similar cases in an archive for pathologist to compare |

| Automation | Laborious and complex tasks can be simplified |

| Re-birth of the hematoxylin and eosin (H&E) image | The ability to extract complex information from scanned H&E stained slides, coupled with other laboratory tests, could lead to new diagnostic, theranostic and prognostic information |

While laboratories have long sought automated methods for anatomic pathology, there was only relatively recently renewed excitement with the 2017 FDA approval of the Philips IntelliSite Pathology Solution (PIPS) for primary review and interpretation of formalin fixed surgical pathology specimens (19). This long-awaited approval encouraged a global uptick in the number of whole slide scanning operations and AI start-up companies to process the massive amount of data being generated by slide digitization. Pathology AI start-up companies have focused not only on making diagnoses (20), but also on screening, quality assurance, prognostication, and even discovery. For example, patterns of lymphocyte infiltration have been shown to provide prognostic and therapeutic information for patients. Applications that are easy to use, financially sustainable, perform well and make a positive impact are more likely to be successfully adopted by pathologists (18). A suite of “killer” AI applications with proven clinical utility is needed to promote the adoption of digital pathology and associated AI in anatomic pathology (Figure 1).

We agree with Thomas James Flotte that surgical pathologists will need to play a critical role in the development of successful AI applications (3). In addition to curating data and providing annotations, pathologists need to be responsible for validating that these applications are necessary and that they work, verifying their accuracy and safety, as well as encourage their integration into routine workflow. The vast majority of practicing anatomic pathologists will not need to be experts in computer science or informatics, but when clinically using AI tools should understand their limitations and the implementation process required for them to be used in daily practice. Implementing an AI program that is later found to have done harm to patients could drastically set back the field of AI (15), which is why we must proceed cautiously during this dawn of AI in digital pathology.

The title of this editorial follows the theme of Latin phrases used in the titles of previous articles. “Deus ex machina” is a story plot event in which the author invents a contrived solution that resolves a main conflict and abruptly ends the story. We do not think that digital pathology and AI are the deus ex machina for anatomic pathology. In the next decade we will probably only see augmented/assisted computer diagnosis with restrained independent or so-called strong AI that completely replaces pathologists. We anticipate that regulatory bodies will in the near future approve deep learning techniques that arrive at a diagnosis through a “black box.” Although plausible, it would be difficult and somewhat strange to reduce the whole of anatomic pathology to a point-of-care test where any type of specimen is put into a single machine to instantly yield the best possible diagnosis. We will have to wait and see and, in the interim, learn from our colleagues in other clinical fields who are also struggling to deploy AI for clinical work.

Acknowledgments

Funding: None.

Footnote

Provenance and Peer Review: This article was commissioned by the editorial office, Journal of Medical Artificial Intelligence. The article did not undergo external peer review.

Conflicts of Interest: Both authors have completed the ICMJE uniform disclosure form (available at http://dx.doi.org/10.21037/jmai.2019.02.03). LP reports medical advisory board for Ibex, past medical advisory board for Leica and consultant for Hamamatsu. RLD has no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Van Es SL. Digital pathology: semper ad meliora. Pathology 2019;51:1-10. [Crossref] [PubMed]

- Glassy EF. Digital pathology: quo vadis? Pathology 2018;50:375-6. [Crossref] [PubMed]

- Flotte TJ, Bell DA. anatomical pathology is at a crossroads. Pathology 2018;50:373-4. [Crossref] [PubMed]

- Farahani N, Parwani A, Pantanowitz L. Whole slide imaging in pathology: advantages, limitations, and emerging perspectives. Pathology and Laboratory Medicine International 2015;7:23-33.

- He L, Long LR, Antani SK, et al. Computer-assisted diagnosis in cervical histopathology. SPIE Newsroom 2010; [Crossref]

- Stead WW. Clinical implications and challenges of artificial intelligence and deep learning. JAMA 2018;320:1107-8. [Crossref] [PubMed]

- VanderPlas J. Python data science handbook: essential tools for working with data. 1st ed. Sebastopol, CA: O’Reilly, 2017:513.

- Gurcan MN, Boucheron LE, Can A, et al. Histopathological image analysis: a review. IEEE Rev Biomed Eng 2009;2:147-71. [Crossref] [PubMed]

- Janowczyk A, Madabhushi A. Deep learning for digital pathology image analysis: a comprehensive tutorial with selected use cases. J Pathol Inform 2016;7:29. [Crossref] [PubMed]

- Icho N. The automation trend in cytology. Lab Med 2000;31:4. [Crossref]

- Pantanowitz L, Liu C, Huang Y, et al. Impact of altering various image parameters on human epidermal growth factor receptor 2 image analysis data quality. J Pathol Inform 2017;8:39. [Crossref] [PubMed]

- Newitt VN. Program zeroes in on histology digital scan connection. CAP Today. 2018;4.

- Fraggetta F, Garozzo S, Zannoni GF, Pantanowitz L, Rossi ED. Routine Digital Pathology Workflow: The Catania Experience. J Pathol Inform 2017;8:51. [Crossref] [PubMed]

- MSN. LUMEA Technology Overview by Dr. Matthew O. Leavitt, Founder. Available online: https://www.msn.com/en-us/health/medical/lumea-technologyoverview-by-dr-matthew-o-leavitt-founder/vi-BBRUSen

- Maddox TM, Rumsfeld JS, Payne PRO. Questions for artificial intelligence in health care. JAMA 2019;321:31-2. [Crossref] [PubMed]

- Miller RA, McNeil MA, Challinor SM, et al. The INTERNIST-1/QUICK MEDICAL REFERENCE project--status report. West J Med 1986;145:816-22. [PubMed]

- Shortliffe EH, Davis R, Axline SG, et al. Computer-based consultations in clinical therapeutics: explanation and rule acquisition capabilities of the MYCIN system. Comput Biomed Res 1975;8:303-20. [Crossref] [PubMed]

- Tizhoosh HR, Pantanowitz L. Artificial intelligence and digital pathology: challenges and opportunities. J Pathol Inform 2018;9:38. [Crossref] [PubMed]

- FDA allows marketing of first whole slide imaging system for digital pathology. 2017 April 12: FDA new release.

- Komura D, Ishikawa S. Machine learning methods for histopathological image analysis. Comput Struct Biotechnol J 2018;16:34-42. [Crossref] [PubMed]

Cite this article as: Dietz RL, Pantanowitz L. The future of anatomic pathology: deus ex machina? J Med Artif Intell 2019;2:4.