Maintaining scientific integrity and high research standards against the backdrop of rising artificial intelligence use across fields

Introduction

OpenAI’s Chatbot Generative Pre-Trained Transformer (ChatGPT) is a type of artificial intelligence (AI) software which imitates conversations with human users. This chatbot operates with algorithms programmed to recognize natural language inputs and respond with suitable replies that are either pre-written or generated by the AI itself (1). The use of AI in science has already played a revolutionary role. Formerly, various software systems were used in the field of imaging diagnostics. Those were the first steps in the use of AI in clinical practice. Nowadays, there are several platforms with superior diagnostic approaches compared to the performance of human specialists. For example, AI outperforms every dermatologist in dermoscopic melanoma diagnosis, using an optimized deep-convolutional neural network (CNN) architecture with custom mini-batch logic and loss function (2). The next aspect to consider is scientific writing with the help of AI. For this purpose, the best solution is becoming text-generative AI.

ChatGPT has gained substantial worldwide interest. ChatGPT has hit an estimated 100 million monthly active users, making it the fastest-growing consumer internet application in history according to a UBS study (3). AI language models and particularly ChatGPT, have already been mentioned as authors of scientific articles (4). For example, there are many instances of its co-authorship in the domain of medical and healthcare research. An article by Tech Insider found co-authorship on research articles published in journals such as Nurse Education in Practice, Oncoscience, and the medical repository medRxiv. These research articles discuss topics ranging from AI-assisted medical education to an AI perspective on the use of the drug rapamycin. Scholars are quoted as saying that AI contributed on an intellectual level to the content of the writing, which is why co-authorship was given (4). It is possible that the use of ChatGPT makes authoring papers much easier, which might make scientific writing and research work more accessible. However, the next challenge for reviewers and scientists is noticing the use of AI when it is not mentioned as an author. As research becomes increasingly complex and multidisciplinary, the process of reviewing articles becomes more challenging. Traditional tools for plagiarism and inaccuracy had already become ineffective in the cases when AI was used as a generative language model.

The use of AI in scientific writing certainly needs transparent regulation. Chatbot AI could also be used for scientific reading. ChatGPT can be used to summarise long articles or to find interesting and relevant text sections. However, the summary given may be quite broad, and cannot critically analyse research differences (Figure 1). But still, it can be used by clinicians and scientists to quickly understand the current state of knowledge on a particular topic and identify potential gaps that need to be addressed (1).

The debate over whether ChatGPT should be given co-authorship if it has written a significant portion of the paper will likely persist over the next years. At the very least, its use should be noted and explained (5,6). The fact that ChatGPT was previously named as a co-author on several papers and that scientific journals swiftly moved to outlaw its mention as a co-author is another issue that has recently come up in the international press (7). ChatGPT was listed as a co-author in one of the sources in this article as well (8).

An increasing number of journals have formulated their policy on the use of generative AI in scientific writing. For example, numerous Elsevier journals allow the use of such a tool only to improve readability and language, requiring the authors to disclose the use of AI by a statement included in the manuscript and highlight that the authors remain fully responsible and accountable for the contents of the work (9). In turn, the PNAS journal requires the authors to describe the use of AI in the Material & Methods, if such a tool was used to prepare any part of the manuscript, but also indicate that such a software cannot be listed as a co-author, as it does not meet criteria for authorship and cannot share responsibility for the paper or be held accountable for the integrity of the data reported (10).

Currently, ChatGPT’s degree of understanding and interpretation is insufficient for medical students to use, particularly during exams for medical school. High-stakes examinations, such as those for medical licenses, might also have similar hurdles (11).

In this paper, we will discuss the pros and cons of using AI in scientific or academic writing while putting this in the wider context of scientific application. The aim is to examine in which ways AI has been, and will continue to revolutionize the scientific field and which precautions may be needed to counter any negative effects. What does the scientific community need to work with AI in an optimized and beneficial way? This paper, written by scientific students from around the world [with partial use of an AI large language model (LLM), specifically ChatGPT, to demonstrate the theories with clear examples], looks towards this question: is this the new future? And how should we encounter it?

AI-assisted manuscript writing and preparation

History of AI-assisted manuscript writing and preparation

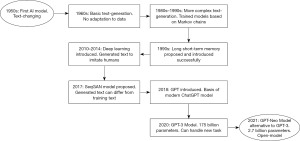

The history of writing and preparing manuscripts with the help of AI begins with the development of computer technology and applied machine learning (Figure 2).

Here are some historical facts regarding the history of AI in writing:

- 1950s: the first AI models were developed to generate text based on a given pattern. However, these models were limited in that they could not create a new text: they could only change the existing one (12);

- 1960s: this period saw the development of the first systems that used grammar and rules to generate text. These systems were limited in that they required significant expertise and could not adapt to new data (13);

- 1980s–1990s: a number of systems using statistical models for text generation were developed. These models are based on Markov chains and are trained on large text corpora. However, these systems were also limited in that they could not generate text that was significantly different from what they were trained on (14);

- 1990s: long short-term memory (LSTM) model was proposed, which allows modelling dependencies in long sequences and is successfully used for text generation (15);

- 2010–2014: a new approach to text generation known as deep learning was proposed. This approach allows the model to be trained on large text corpora and produce new text that looks as if it was written by a human;

- 2017: a Sequence Generative Adversarial Nets (SeqGAN) model combining deep learning with generative models was proposed. This model allows you to generate text that is different from the one it was trained on (16);

- 2018: the Generative Pre-Trained Transformer (GPT) model was proposed, which is the basis for the modern ChatGPT model. The GPT model uses the Transformer architecture and pre-training on large text corpora (17);

- 2020: the GPT-3 model was proposed, which has 175 billion parameters and is the largest and most complex model built to date. GPT-3 can handle a variety of tasks, including writing articles, answering questions, and generating code (18);

- 2021: the GPT-Neo model was proposed, which is an alternative to GPT-3 and has 2.7 billion parameters. GPT-Neo is an open model and allows researchers to use it for their own projects and experiments (19).

In recent years, many methods and algorithms have also been developed that allow you to quickly and efficiently create and prepare handwritten documents, such as the program “My Text in Your Handwriting”, which uses AI to analyze the user’s handwriting and generate text in their handwriting. To achieve this goal, machine learning algorithms were used, allowing the program to learn based on the user’s handwritten samples (20). Another example is the program “DeepWriter”, developed in 2016 by Chinese scientists. This program used deep learning to generate handwritten texts. To create a document, the user entered text on the computer, after which the program automatically generated a handwritten version of this text (21). Another program, the MyScript Nebo service allows users to create handwritten notes and documents on tablets and smartphones using handwriting recognition and real-time text generation (22). The application of AI in the writing and preparation of handwritten documents can be useful for the creation of various types of documents (23), and could be used in the creation of notes, letters, scientific articles, papers, resumes, and various types of other reports

The main functions of AI in this direction include:

- Handwriting recognition: handwriting recognition allowing users to write notes or documents using a regular pencil or pen and convert this handwriting into printed text without unnecessary complications. This may be particularly helpful in healthcare contexts, where quick notetaking can be turned into professional reports and documents, removing the need for healthcare workers to spend time typing up their notes;

- Handwriting text generation: handwriting-style text generation that allows to create documents that look like they were written by hand;

- Translation: translate texts from one language to another, which can be useful for creating international documents or for communicating with colleagues from different countries. This could also be helpful in improving health science and medical collaboration across language borders, eliminating the need for a ‘middleman’ of professional translation and therefore speeding up international research and medical processes;

- Text correction: checking the spelling and grammar of the text and providing suggestions for its linguistic improvement. This can be particularly useful in a range of contexts, such as ensuring medical reports are error-free, or improving the readability and therefore accessibility of research manuscripts and documents, for example in the health sciences. This may also be helpful for manuscripts where an international team is working together, such as this one, to ensure the text is linguistically sound, understandable, and coherent;

- Document organization: help organize documents, add tags or labels to make them easier to find in the future. This is certainly useful in research contexts: for example, when medical trials are performed and researched, the organisation of the hundreds of documents required can be simplified. This can equally apply to the organisation of applications for drug patents, the organisation of patient records, and the organisation of research material for manuscripts.

Today, there are several AIs on the market for writing and preparing manuscripts, namely: BERT (Bidirectional Encoder Representations from Transformers), a model developed by Google; Facebook’s RoBERTa is a model developed by Facebook; GPT-2 is a model developed by OpenAI as a continuation of OpenAI GPT-3; XLNet is a model developed by Google and the Royal Institute of Technology in Cambridge; Microsoft Turing is a model developed by Microsoft; and ChatGPT is one of the most popular applied machine learning models today. All of these technologies can contribute to the improvement of manuscripts in the health sciences domain.

Currently employed methods and algorithms

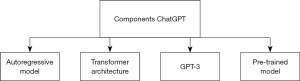

Let’s see what ChatGPT is based on (24). The main methods and algorithms used in ChatGPT are:

- Autoregressive model—ChatGPT is an autoregressive model, which means it generates text from left to right, one word at a time. Each subsequent word is predicted on the basis of previous words;

- Transformer models—a transformer is an architecture that processes sequences using a self-attention mechanism. Transformer technology allows ChatGPT to understand the context from which it can generate text;

- Masking—used during training to prevent overtraining and help the model generalize. This means that at some point, the input sequence is masked so that the model cannot see part of the input text during training;

- Loss function—a loss function such as cross-entropy is used to train the model, which allows you to assess how accurately the model predicts each subsequent word in the text. The function measures the distance between the predicted probability distribution for the next word and the actual probability distribution. The goal is to minimize the value of this loss function to ensure that the model can make correct predictions for the next word in the text;

- Intermediate layer—ChatGPT has an intermediate layer (known as embedding) which converts each word into a vector of fixed length. This layer helps the model understand the semantics of the words in the text and create context vectors for each word.

The main components of ChatGPT can be seen in the overview of Figure 3. Transformer is a neural network architecture that processes sequential data such as text. It is a deep learning model that was designed to process sequential data such as texts with high speed and efficiency. It was first proposed in 2017 in the article “Attention Is All You Need”, published by famous scientists from Google, and became the basis for creating numerous best machine learning models.

The main idea of the transformer is to use the self-attention mechanism to replace the traditional approach for sequential data processing. In the transformer, multiple layers of self-attention are used, each of which performs operations with attention to all elements of the input data sequence. Each self-attention layer finds weights for each of the elements of the input sequence of data, using the relative attention to other elements in this sequence, and consists of three components:

- Query function—used to calculate weights for each element of the data sequence;

- Key function (key function)—used to calculate key values for each element of the data sequence;

- Value function (value function)—used to calculate the values associated with each element of the data sequence.

These weights are then used to compute the output representation of the input data sequence.

With the help of self-attention mechanisms, it is possible to detect relationships between various elements of the input sequence of data, including semantic and contextual dependencies. This allows to obtain more accurate and correct results when processing sequential data, such as texts. Very interestingly from a health science perspective is the new function of being able to train AI models on your own data and texts: GPT3.5 Turbo, which is the AI-model that powers the free version of ChatGPT, can be trained on custom data, which refines it for use in specific circumstances (25). This is ideal for manuscripts for large research projects, such as drug trials, or any number of other health science related projects, as the model to write the manuscript will be intricately familiar with the exact context of the data and science underlying the work.

The self-attention mechanism began to develop in the mid-2010s, when the first works using the self-attention mechanism for sequential data processing were proposed. One of the most important works in this direction was the article “End to End Learning for Sequence-to-Sequence Models” [2014] by Dzmitry Bahdanau, Kyunghyun Cho and Yoshua Bengio. In this paper, the authors proposed a new approach to machine translation in which a self-awareness mechanism was used to select the most informative parts of the input sequence for translation.

The transformer also uses an encoder-decoder architecture, which allows the transformer to be used for various machine-learning tasks. The encoder-decoder architecture consists of two parts: encoder and decoder. An encoder takes a sequence of input data and converts it into components, which are then transmitted to a decoder. The decoder accepts the output components and generates the output sequence. The transformer also uses sublayers such as Batch Normalization, ReLU and Dropout activation functions to prevent model overtraining (24).

The purpose of the ChatGPT model (26) is to accomplish various tasks and provide a convenient and efficient tool for creating texts of various types, including messages, E-mails, articles and other types of content. Included in this are of course also manuscripts and research papers, which can be created with this tool. Due to the fact that the model is trained on a large number of different texts, it can generate texts with a high level of naturalness and grammatical correctness. This model was developed in OpenAI and has several different versions, including GPT, GPT-2, and GPT-3 (Figure 4). These types of models can be used to make manuscript writing far easier, especially against the backdrop of large, intricate research projects with according data sets, particularly in the health science context. In this domain, huge sets of data must be translated into a manuscript—the capabilities of the GPT models may take the strain off researchers, make the manuscript writing process simpler and therefore speed up the rate at which it is possible to share new and important medical findings.

GPT has 12 self-attention layers, GPT-2 has 48 self-attention layers, and GPT-3 has 12 to 96 self-attention layers, depending on the model size. Since GPT-3 versions have different sizes, the number of self-attention layers can vary from 12 to 96.

Today, the use of GPT-3 in ChatGPT allows ensuring very high quality of natural language generation and maximum accuracy of answers to user questions. This could, in future, possibly be used to provide quick answers to questions in medical contexts or prepare health science manuscripts by having the AI answer the research question statement to deliver a preliminary overview and structure for health science researchers to use.

Challenges of AI-assisted manuscript writing and preparation

Misinformation and “Science Pollution”

Manuscript writing is an important skill that researchers need to communicate their findings. This means that each manuscript is supposedly the product of authorship by one or more researchers. Authorship comes with original intellectual contribution, responsibility, and accountability for the published work (27), which cannot be effectively applied to AI models. The currency of scientific findings or discovery is its originality, a feature always assessed during critical science decisions such as peer review, research grant funding, hiring, tenure evaluation, and scientific awards (28). This originality is not given in AI-assisted manuscript writing, which is performed by LLMs such as ChatGPT, which are basically products of human coding and training (29) and hence, lack originality. The scope of the model may also be limited to the trainer’s knowledge domain or expertise, meaning that AI models may also lack the domain-specific knowledge required to write or prepare an effective manuscript in a particular subject area. An issue that results from this connection to the trainer’s knowledge/expertise, is that AI models can perpetuate and amplify biases already present in the training data sets, which can lead to misinformation. The risk of misinformation is much higher when considering that AI models can hallucinate information based on made-up data—information that can appear legitimate at first glance (30). This is especially dangerous for manuscripts and writing in health science contexts, as information produced in this domain must be evidence-based and well-founded. There is risk of hallucinated information making its way into manuscripts, reports, and research that can be harmful to a wider community.

Another challenge of AI-assisted manuscript writing is the lack of transparency and lack of access to the raw data used by AI models for data analyses, making it impossible to prove or interpret the output data generated. This is especially worrisome considering the type of hallucination previously mentioned. This lack of proof with LLMs can also contribute to “science pollution” and disseminate pseudo-scientific or unverified claims that can spread quickly and have a negative impact on public health and policy decisions, such as during the coronavirus disease 2019 (COVID-19) pandemic, when there was high pressure to generate novel data pivotal for coordinating public health responses (31-33). This is a particular threat if one considers the increasing use of preprint servers to swiftly publish scientific results prior to their peer review as well as the existence of predatory journals that fail high-quality standards in scientific publishing. Particularly if hallucinated information has made its way into research, preprint servers may become a far more dangerous source, and one much less trustworthy.

Scientific integrity and ethics

In January 2023, a professor from the University of the Philippines Diliman (UP Diliman) posted on Facebook that a student’s paper may have been written using an AI tool after two AI detection systems concluded that the paper might have been written using AI (34). This prompted numerous debates and negative reactions from educators and non-educators alike (35) prompting the faculty members of the UP Diliman AI program to issue a statement (36). A columnist even published a conversation with a chatbot that touched on education and research concerns such as cheating and plagiarism (37).

Given this, it is worth asking whether AI is detrimental to research and education. We keyed in the prompt “Is AI detrimental to student education and research? Answer in 300 words.” into ChatGPT, a popular chatbot developed by OpenAI, and it generated the following result:

“AI has the potential to revolutionize student education and research by providing new ways of learning and new methods of analyzing data. However, there are also concerns that AI may be detrimental to student education and research if not implemented properly.

One concern is that AI may replace human teachers and researchers, leading to a loss of personalization and human interaction in education and research. This could result in a more impersonal and less engaging learning experience for students. Additionally, AI-based systems may not be able to fully understand the nuances and complexity of certain subjects, leading to a lack of understanding or inaccurate conclusions in research.

Another concern is that AI may perpetuate biases and inequalities in education and research. AI systems are only as unbiased as the data they are trained on, and if the data is biased, the AI will also be biased. This could lead to unequal student opportunities and a lack of diversity in research findings.

Additionally, AI may lead to a lack of critical thinking and creativity in students, as they may rely too heavily on AI-based systems to solve problems and make decisions. This could lead to a lack of innovation and creativity in the workforce and in research. However, it is important to note that AI can also be used to improve student education and research by providing personalized learning experiences, analyzing large amounts of data, and identifying patterns and trends in research. It can also help to reduce bias and increase diversity in research findings by providing unbiased data analysis.

Overall, AI has the potential to revolutionize student education and research, but it is important to approach its implementation with caution and to consider the potential negative impacts it may have. It is essential to ensure that AI is used in a way that enhances student education and research, rather than replacing human teachers and researchers” (38).

It can be seen from this “response” that the answer is concise and coherent, although lacking in specificity and depth. It could pass for a high school essay since it is difficult to distinguish it from the writing skills of any regular high school student writing a simple essay. At the university level, however, this may fail to satisfy the expectations of professors from students, especially if the question demands more in-depth thinking and reflective insights, threatening the integrity of scientific writing at this level.

Interestingly, the above “answer” echoes a part of the sentiments of the UP Diliman AI program faculty (36). There are recognizable disadvantages to the presence of AI in research and education. But what do these disadvantages or ethical challenges include?

For example, if AI is used by students and researchers to write their manuscripts for them, or to plagiarize, fabricate, or falsify data, then it can destroy their educational journey and the scientific integrity surrounding their research domain. Not only this, but continued AI use may result in the suppression of intuitive knowledge, increasing dependency on its use and decrease human intervention in writing, researching and learning processes.

However, it should not be directly condemned. Rather, AI in education and in research should be regulated in order for its advantages to be maximized—these advantages can be if AI is used to complement research and studies as well as to supplement what students learn in the classroom, in the laboratory, or in the field. This can create a more holistic, meaningful learning, researching and scientific experience—something that earlier generations did not have the opportunity to capitalise on (39). AI can also be used to help make manuscripts more concise and understandable. These uses would be ethically not only viable, but even desired.

In other words, the balance can be seen by viewing AI as a tool. Like a hammer, it can be used to create, or it can be used to destroy, but, as with many ethical questions it cannot be judged as inherently good or bad, or one thing or the other. The manner by which it is used determines whether it will result in something beneficial or detrimental. Following ethical guidelines when using AI tools in manuscript preparation and writing can greatly help ensure academic integrity when using AI tools and prevent instances of plagiarism, fabrication, and falsification (40). As educators and experienced researchers, we are responsible for guiding our students and younger colleagues in the ethical way of using these AI technologies. Past ethical failures in AI use that threaten academic and scientific integrity should not prevent us from looking at the positive aspects of AI in research and education.

The responsibility for upholding scientific integrity and ethics should not lie on the shoulders of educators and senior researchers alone. Leaders and policymakers must also have a share of the burden since they are the ones in charge of determining the priorities, principles, and policies regarding the use of AI in areas such as education, research, scientific standards, and academia on institutional, national, and even global levels. The challenge may be encouraging these leaders/policymakers to begin taking steps that will lead to positive change. Without their concerted efforts, the benefits of AI in education, academia, scientific writing and research will not be fully taken advantage of and it might grow into a more serious threat due to ignorance, abuse, misuse, as well as an increase of the technological gap between those who have access and those who do not—deepening inequalities across many domains. A potent example of AI-policy can be seen in the principles adopted by “Nature” and other Springer Nature journals (41) that reject LLMs like ChatGPT as authors in research papers (42). This is a proactive approach by a publishing company to discourage researchers from unethical use of LLMs and to promote accountability for researchers. Another example is that Elsevier and Cambridge Press “allow” the use of LLMs and/or apps for academic writing, but they cannot be listed as official authors or co-authors—this is because AI tools cannot take the responsibility for the content of the manuscript in the fashion similar to humans (43). This difference can threaten integrity between journals, and is an example that ethics surrounding the topic are viewed differently across the board. More overarching policies from leaders would help to unify standards around AI-use.

According to the United Nations Educational Scientific and Cultural Organization (UNESCO) (44), “Artificial Intelligence (AI) has the potential to address some of the biggest challenges in education today, innovate teaching and learning practices, and accelerate progress (…). The promise of “AI for all” must be that everyone can take advantage of the technological revolution underway and access its fruits, notably in terms of innovation and knowledge.”

Ultimately, AI in education, research and scientific writing will continue to grow and evolve. We must learn to live with its presence in our education institutions and modern research. Integrating AI into these sectors effectively and ethically, while maintaining scientific integrity, will be difficult, but it is a challenge worth undertaking.

Outlook and recommendations

Strategies for better AI-use

These strategies have been drawn up to offer the scientific community and researchers methods to implement and familiarize themselves with the use of AI in a non-harmful and ethical way.

- Familiarize oneself with the use of GPT language models for manuscript writing: as GPT is a relatively new technology, it is important for authors to understand how it works and how it can benefit their writing process. Researchers can start by reading relevant articles and tutorials on GPT-based writing. Like any technology, someone has to input data for the tools to generate output, so any output still solely relies on what is put in. Therefore, if less data is put in, the result may also be limited (45);

- Use GPT as a tool for generating initial drafts: GPT language models can be used to generate initial drafts that can be refined and edited by authors. This can help authors to save time and reduce the burden of writing from scratch. Modifying an already drafted manuscript would streamline the process for any author (46);

- Use GPT for brainstorming and generating new ideas: GPT can help authors to generate new ideas and perspectives that they may not have considered before. This can lead to more creative and innovative manuscripts. It has been proven that the OpenAI GPT can generate different outcomes using the same data (47);

- Use GPT to help identify potential errors and inconsistencies in the manuscript: by inputting the manuscript into GPT, the program can identify grammatical errors, spelling mistakes, and other issues that may have been overlooked by the human author (48). This can help to improve the accuracy and clarity of the manuscript, as well as ensure that it adheres to established standards and guidelines;

- Collaborate with GPT developers: researchers can collaborate with GPT developers to improve the quality of language models and to tailor them to specific research domains.

Recommended regulations for AI-use

These strategies have been drawn up to offer recommendations for the regulation of AI use in the aim of doing so ethically and transparently.

- Follow ethical guidelines for using GPT: as with any technology, it is important to use GPT responsibly and ethically. Researchers should be aware of the potential risks and limitations of GPT, such as the possibility of bias, invasion of privacy, and harmful languages, thus the need for human oversight (45,46). Ethical guidelines such as the recommendations proposed for researchers and users by Zhou et al. (49), for ‘smart leaders’ by Weinstein in an article for Forbes (50), or for on-Campus by Hackworth Fellows at the Markkula Center for Applied Ethics (51), can serve as ethical guidelines. Policymakers, however, should strive to create overarching and comprehensive ethical guidelines for GPT, for researchers, users, academics, and relevant professions. This will create a unified, or more widely applicable set of guidelines that can be used by more, consistently;

- Acknowledge the use of GPT in manuscripts: when using GPT for manuscript writing, authors should acknowledge the use of the technology in their manuscripts and provide appropriate citations. This should be done within the Materials and Methods section, or the Acknowledgement part, or as a separate disclosure statement. According to some researchers, but also the policy of various journals, AI cannot be listed as an author under copyright law (52), but that does not mean it should never be listed as an author of an academic paper. Shahriar et al. reported that some journals, including Taylor and Francis are reviewing their policy on listing ChatGPT as a co-author (46), and as previously mentioned, many journals already have a concrete policy;

- Prevent privacy concerns when using ChatGPT: safety measures should always be in place, and users of ChatGPT and developers should ensure that data is handled responsibly, and steps should be taken to protect private information;

- Follow journal guidelines for manuscript submission: authors should ensure that their manuscripts meet the specific guidelines of the journal they are submitting to, including any requirements related to the use of technology or language models.

Furthermore, it is important to acknowledge that the use of GPT in manuscript writing is still a relatively new technology, and its full potential is yet to be explored. As such, it is recommended that authors stay informed about new advancements in the field and continuously evaluate the usefulness of GPT in their writing process.

Additionally, it is crucial to maintain a balance between the use of GPT and human input in manuscript writing (53). While GPT can help generate text quickly and accurately, it is essential to maintain the author’s voice and style throughout the manuscript. Shakib et al. say that as much as ChatGPT is potentially speeding up research and writing of scientific papers, a human influence is required, as ChatGPT may also generate misleading information. Moreover, it is recommended to consider the potential legal implications of using GPT in manuscript writing. For instance, copyright infringement issues may arise if the generated text is not original or properly attributed.

Lastly, it is important to recognize that the use of GPT in manuscript writing is not a one-size-fits-all solution. GPT has been proven to have the ability to produce different results for the same topic and data (47). Authors should evaluate their specific needs and circumstances before deciding to use GPT in their writing process. A summary of our strategies and recommendations can be found in Table 1.

Table 1

| Strategies |

| Familiarize with use before implementing AI |

| Use GPT to create first drafts for manuscripts |

| Use GPT for brainstorming/idea generation |

| Use GPT to check for errors in manuscript |

| Work with GPT developers to improve GPT |

| Recommendations |

| Create new and follow existing ethical guidelines |

| Cite/acknowledge AI-use correctly |

| Implement safety measures for privacy |

| Adhere closely to journal guidelines |

AI, artificial intelligence; GPT, Generative Pre-Trained Transformer.

Conclusions

As with any technology, AI can be used both for good and for bad. This paper has explored some of the aspects that show positive developments for the scientific community, as well as other aspects that challenge and threaten scientific integrity. The most important aspect to remember is that innovation in itself is neutral—neither inherently helpful nor inherently harmful. It is the use of the innovation and technology, and the user themselves, that determine how positively or negatively something impacts us. The power lies with the scientific community to wield AI as a tool for good, and not let it harm academic honesty or quality.

Therefore, for the scientific community, caution is advised when using AI technology as it becomes more and more commonplace. It is vital to ensure that in both diagnostics and scientific writing, ethics are constantly re-assessed, goals are adjusted, and checks and balances are maintained. AI has the power to and already has been, revolutionizing the scientific community. It offers the opportunity to vastly improve scientific research, methods, technologies, diagnostics and tools—it is therefore also imperative that while we maintain caution, we must continue to develop and improve AI technologies and our work with them. Fear of negative consequences should not prevent the scientific community from taking advantage of the benefits of AI—as this will benefit everybody. The recommendations given in the previous chapter give the first steps on how to work with AI beneficially.

Acknowledgments

The recommendations in this paper were developed by some members of USERN—a group of authors from different parts of the world who are experiencing the AI revolution affecting our fields in real-time. As UJAs (USERN Junior Ambassadors), it is our priority to strive to improve our field for the better. We hope that this paper enables other scientists to find the balancing line along which AI use may be beneficial to all while maintaining what is sacred to our field: integrity and honesty.

Funding: None.

Footnote

Peer Review File: Available at https://jmai.amegroups.com/article/view/10.21037/jmai-23-63/prf

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://jmai.amegroups.com/article/view/10.21037/jmai-23-63/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Salvagno M, Taccone FS, Gerli AG. Can artificial intelligence help for scientific writing? Crit Care 2023;27:75. [Crossref] [PubMed]

- Pham TC, Luong CM, Hoang VD, et al. AI outperformed every dermatologist in dermoscopic melanoma diagnosis, using an optimized deep-CNN architecture with custom mini-batch logic and loss function. Sci Rep 2021;11:17485. [Crossref] [PubMed]

- Wodecki B. UBS: ChatGPT is the Fastest Growing App of All Time [Internet]. AI Business. 2023. Available online: https://aibusiness.com/nlp/ubs-chatgpt-is-the-fastest-growing-app-of-all-time

- The Tech Insider. ChatGPT on a Scientific Paper as a Co-author? Does It Even Make Sense? [Internet]. Medium. 2023 [cited 2023 Aug 27]. Available online: https://pub.towardsai.net/chatgpt-on-a-scientific-paper-as-a-co-author-does-it-even-make-sense-a60de41bbaf6

- World Association of Medical Editors. Chatbots, ChatGPT, and Scholarly Manuscripts || WAME [Internet]. 2023. Available online: https://wame.org/page3.php?id=106

- Marušić A. JoGH policy on the use of artificial intelligence in scholarly manuscripts. J Glob Health 2023;13:01002. [Crossref] [PubMed]

- Sample I. Science journals ban listing of ChatGPT as co-author on papers [Internet]. the Guardian. 2023. Available online: https://www.theguardian.com/science/2023/jan/26/science-journals-ban-listing-of-chatgpt-as-co-author-on-papers

- O'Connor S. Open artificial intelligence platforms in nursing education: Tools for academic progress or abuse? Nurse Educ Pract 2023;66:103537. [PubMed]

- Elsevier. Guide for authors - Antiviral Research - ISSN 0166-3542 [Internet]. www.elsevier.com. 2023 [cited 2023 May 23]. Available online: https://www.elsevier.com/journals/antiviral-research/0166-3542/guide-for-authors

- PNAS Updates. The PNAS Journals Outline Their Policies for ChatGPT and Generative AI [Internet]. 2023. Available online: https://www.pnas.org/post/update/pnas-policy-for-chatgpt-generative-ai

- Huh S. Are ChatGPT’s knowledge and interpretation ability comparable to those of medical students in Korea for taking a parasitology examination?: a descriptive study. J Educ Eval Health Prof 2023;20:1. [Crossref] [PubMed]

- Byrd LJ, Smith C, Kunz R, et al. Big Data, Machine Learning, Artificial Intelligence [PowerPoint]. U.S. Department of Energy Office of Scientific and Technical Information. May 5, 2020. doi:

10.2172/1617329 . - Winograd T. Understanding natural language. Cognitive Psychology 1972;3:1-191.

- Oh AH, Rudnicky AI. Stochastic language generation for spoken dialogue systems. Association for Computing Machinery. 2000 Jan 1. Available online: https://aclanthology.org/W00-0306/

- Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput 1997;9:1735-80. [Crossref] [PubMed]

- Yu L, Zhang W, Wang J, et al. SeqGAN: Sequence Generative Adversarial Nets with Policy Gradient. Proceedings of the AAAI Conference on Artificial Intelligence. 2017. doi: https://doi.org/

10.1609/aaai.v31i1.10804 . - Radford A, Narasimhan K, Salimans T, et al. Improving Language Understanding by Generative Pre-Training. OpenAI [Internet]. 2018; Available online: https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf

- Brown TB, Mann B, Ryder N, et al. Language Models are Few-Shot Learners. arXiv:2005.14165v4 [Preprint]. 2020. Available online: https://doi.org/

10.48550 /arXiv.2005.14165 - Kashyap R, Kashyap V, Narendra CP. GPT-Neo for commonsense reasoning-a theoretical and practical lens. arXiv:2211.15593v2 [Preprint]. 2022. Available online: https://doi.org/

10.48550 /arXiv.2211.15593 - Haines TSF, Mac Aodha O, Brostow GJ. My Text in Your Handwriting. ACM Trans Graph 2016; [Crossref]

- Yuen MC, Ng KF, Lau KM, et al. Design an Intelligence System for Early Identification on Developmental Dyslexia of Chinese Language. Proceedings of the 19th International Conference on Wireless Networks and Mobile Systems. 2022 [cited 2023 Jun 18]. Available online: https://www.scitepress.org/Papers/2022/112815/112815.pdf

- Ghosh T, Sen S, Obaidullah SM, et al. Advances in online handwritten recognition in the last decades. Computer Science Review 2022;46:100515.

- Al-Dmour A, A, Abuhelaleh M. Arabic handwritten word category classification using bag of features. Journal of Theoretical and Applied Information Technology 2016;89:320-8.

- ChatGPT. Most important terms related to ChatGPT [Internet]. ChatGPT. 2023 [cited 2023 Sep 13]. Available online: https://chatgpt.ch/en/technical-terms/

- Edwards B. You can now train ChatGPT on your own documents via API [Internet]. Ars Technica. 2023 [cited 2023 Aug 28]. Available online: https://arstechnica.com/information-technology/2023/08/you-can-now-train-chatgpt-on-your-own-documents-via-api/#:~:text=Developers%20can%20now%20bring%20their

- Hilčenko SJ. CHATGPT ABOUT CHATGPT. HORIZONTI / HORIZONS 2023 [Internet]. 2023 Jan 1 [cited 2023 Sep 13]; Available online: https://www.academia.edu/104717961/CHATGPT_ABOUT_CHATGPT

- da Silva JA, Dobránszki J. How Authorship is Defined by Multiple Publishing Organizations and STM Publishers. Account Res 2016;23:97-122. [Crossref] [PubMed]

- Shibayama S, Wang J. Measuring originality in science. Scientometrics. 2019;122:409-27.

- Sejnowski TJ. Large Language Models and the Reverse Turing Test. Neural Comput 2023;35:309-42. [Crossref] [PubMed]

- TELUS international. Generative AI Hallucinations: Explanation and Prevention [Internet]. 2023. Available online: https://www.telusinternational.com/insights/ai-data/article/generative-ai-hallucinations

- Naeem SB, Bhatti R, Khan A. An exploration of how fake news is taking over social media and putting public health at risk. Health Info Libr J 2021;38:143-9. [Crossref] [PubMed]

- Chavda VP, Sonak SS, Munshi NK, et al. Pseudoscience and fraudulent products for COVID-19 management. Environ Sci Pollut Res Int 2022;29:62887-912. [Crossref] [PubMed]

- Rzymski P, Nowicki M, Mullin GE, et al. Quantity does not equal quality: Scientific principles cannot be sacrificed. Int Immunopharmacol 2020;86:106711. [Crossref] [PubMed]

- Subingsubing K. Student’s rambling essay triggers AI question in UP [Internet]. INQUIRER.net. 2023. Available online: https://newsinfo.inquirer.net/1718304/students-rambling-essay-triggers-ai-question-in-up

- Manila C. Alleged use of AI to complete student’s final essay sparks debate at University of the Philippines [Internet]. Yahoo News. 2023 [cited 2023 May 16]. Available online: https://sg.news.yahoo.com/alleged-ai-complete-student-final-062143228.html

- Diliman Information Office. Statement by the Faculty of UP Diliman Artificial Intelligence (AI) Program on the Use of AI Tools in the Academic Environment - University of the Philippines Diliman [Internet]. University of the Philippines Diliman. 2023. Available online: https://upd.edu.ph/statement-by-the-faculty-of-up-diliman-artificial-intelligence-ai-program-on-the-use-of-ai-tools-in-the-academic-environment/

- Lasco G. A conversation with ChatGPT [Internet]. INQUIRER.net. 2023 [cited 2023 May 16]. Available online: https://opinion.inquirer.net/160619/a-conversation-with-chatgpt?

- OpenAI. ChatGPT - Is AI detrimental to student education and research? Answer in 300 words [Internet]. OpenAI; 2022. Available online: https://chat.openai.com/chat

- Gocen A, Aydemir F. Artificial Intelligence in Education and Schools. Research on Education and Media 2021;12:13-21.

- Sy PA. Academic Integrity Guidelines [Internet]. Google Docs. 2023 [cited 2023 May 16]. Available online: https://upsilab.org/acadai

- Nature. Initial submission | Nature [Internet]. Nature; 2014. Available online: https://www.nature.com/nature/for-authors/initial-submission

- Nature. Tools such as ChatGPT threaten transparent science; here are our ground rules for their use. Nature 2023;613:612. [Crossref] [PubMed]

- Bilal M. https://twitter.com/MushtaqBilalPhD/status/1637051831695167493 [Internet]. Twitter. 2023 [cited 2023 May 16]. Available online: https://twitter.com/MushtaqBilalPhD/status/1637051831695167493

- UNESCO. Artificial intelligence in education | UNESCO [Internet]. www.unesco.org. 2019. Available online: https://www.unesco.org/en/digital-education/artificial-intelligence

- Hariri W. Unlocking the Potential of ChatGPT: A Comprehensive Exploration of its Applications, Advantages, Limitations, and Future Directions in Natural Language Processing. arXiv:2304.02017v5 [Preprint]. 2023. Available online: https://doi.org/

10.48550 /arXiv.2304.02017 - Shahriar S, Hayawi K. Let’s have a chat! A Conversation with ChatGPT: Technology, Applications, and Limitations. arXiv:2302.13817v4 [Preprint]. 2023. Available online: https://doi.org/

10.48550 /arXiv.2302.13817 - OpenAI. OpenAI Platform [Internet]. 2023. Available online: https://platform.openai.com/docs/guides/gpt/faq

- Kim SG. Using ChatGPT for language editing in scientific articles. Maxillofac Plast Reconstr Surg 2023;45:13. [Crossref] [PubMed]

- Zhou J, Müller H, Holzinger A, et al. Ethical ChatGPT: Concerns, Challenges, and Commandments. arXiv:2305.10646v1 [Preprint]. 2023. Available online: https://doi.org/

10.48550 /arXiv.2305.10646 - Weinstein B. Why Smart Leaders Use ChatGPT Ethically And How They Do It [Internet]. Forbes. 2023 [cited 2023 Aug 26]. Available online: https://www.forbes.com/sites/bruceweinstein/2023/02/24/why-smart-leaders-use-chatgpt-ethically-and-how-they-do-it/

- University SC. Guidelines for the Ethical Use of Generative AI (i.e. ChatGPT) on Campus [Internet]. 2023. Available online: https://www.scu.edu/ethics/focus-areas/campus-ethics/guidelines-for-the-ethical-use-of-generative-ai-ie-chatgpt-on-campus/

- Lee JY. Can an artificial intelligence chatbot be the author of a scholarly article? J Educ Eval Health Prof 2023;20:6. [Crossref] [PubMed]

- Castillo-González W. The importance of human supervision in the use of ChatGPT as a support tool in scientific writing. Metaverse Basic and Applied Research 2023;2:29.

Cite this article as: Morrison FMM, Rezaei N, Arero AG, Graklanov V, Iritsyan S, Ivanovska M, Makuku R, Marquez LP, Minakova K, Mmema LP, Rzymski P, Zavolodko G. Maintaining scientific integrity and high research standards against the backdrop of rising artificial intelligence use across fields. J Med Artif Intell 2023;6:24.