Exploring machine learning diagnostic decisions based on wideband immittance measurements for otosclerosis and disarticulation

Highlight box

Key findings

• Machine learning (ML) classifiers trained with artificial data can be used to interpret wideband acoustic immittance (WAI) data and achieve good results for differentiating normal, otosclerotic and disarticulated ears. They can also reveal the key features involved in the classification process.

What is known and what is new?

• ML classifiers trained with real measurement data holds the potential for improved diagnosis accuracy of middle ear disorders using WAI/wideband tympanometry data.

• Artificial data can be used to train classifiers (convolutional neural networks or extreme gradient boosting) and achieve comparable classification results as with real measurement data. The extracted key features used in classification correspond to visually verifiable criteria.

What is the implication, and what should change now?

• Analyzing key features not only makes the automatic classification of the ML algorithm more reliable, but also gives clinicians an indication of where the characteristics of WAI measurements can be found in certain pathologies. This supports clinical diagnosis in a meaningful way, not just as a black box method.

Introduction

Wideband acoustic immittance (WAI) and wideband tympanometry (WBT) hold great potential for improving noninvasive middle-ear diagnostics since they provide comprehensive and detailed information about the middle-ear system. As traditional approaches, such as otoscopy and tympanometry, provide valuable insights into middle-ear function, they often lack the ability to accurately differentiate between various middle-ear pathologies. Previous studies have shown that the usage of WAI data can aid in diagnosing middle-ear pathologies including superior canal dehiscence, ossicular fixation including otosclerosis and disarticulation (1), and otitis media based on WBT data. But, despite their potential benefits in middle-ear diagnostics, the clinical application of WAI and WBT remains limited, as they provide an extensive amount of information, and their analysis and interpretation remain challenging. Due to significant interindividual differences in middle-ear measurements, it is difficult to establish clear thresholds or criteria for differentiation between normal and pathological middle-ear conditions. Therefore, the diagnosis and classification of middle-ear pathologies based solely on visual inspection of WAI and WBT data with sufficient accuracy is not yet possible.

To improve WAI and WBT analysis, recent papers have shown promising approaches in using classifiers based on machine learning (ML) and deep learning (DL), using measured WBT data as training datasets. These approaches have already excelled in image recognition, and in some instances even surpassed human clinicians in diagnosis accuracy. For example, in (2), a neural network algorithm using tympanic membrane images achieved higher accuracies than some clinicians in detecting acute otitis media (AOM) and otitis media with effusion (OME).

Besides the analysis quality, i.e., the classification performance, another main aspect to consider for prospective clinical application is classifier interpretability. To get clinical admission for ML as aid in differential ME diagnosis, it will be necessary to comprehend the decisions made by the classifier and to allow clinicians understand which regions found in the data are responsible for the classifier’s decision.

Grais et al. (3) used several ML classifiers, namely k-nearest neighbor, support vector machines, random forest (RF), as well as DL-based classifiers like feedforward neural networks and convolutional neural networks (CNNs), to analyze 675 sets of measured WBT data in normal ears and ears with OME of patients aged 1–73 years. Furthermore, Grais et al. (3) used a Wilcoxon rank sum test to indicate where the WBT data show the most significant differences between normal and OME ears. They also identified important regions from the WBT data that the RF classifier used for its classification decisions. A different approach to differentiate normal ears from OME was done by (4), who employed a CNN to analyze 1,014 sets of measured WBT data as well as WAI data at ambient pressure of children aged between 2 months and 12 years. Averaged saliency maps were generated to evaluate the most important features for the diagnostic groups. Nie et al. (5,6) classified otosclerotic and normal ears using measured WBT data with a total of 135 datasets, 80 patients with otosclerosis and 55 controls. To improve the classifiers performance, synthetic WBT data were generated out of the existing, limited number of measured WBT data using a data augmentation algorithm. Also, (6) improved upon (5) by implementing Domain Adaptation with Gaussian Processes to guide parameter optimization during the classifiers training process.

The present paper builds on (7) and aims to improve the implementation of ML for differential diagnostics as well as to contribute to a better understanding of their workings. In contrast to the already mentioned papers, (7) classified normal, otosclerotic and disarticulated ears using a CNN, trained entirely with artificial, simulated WAI data. Besides energy reflectance (ER) (or absorbance) amplitude, which is solely used in most papers, the WAI data used by (7) also contains pressure reflectance phase (R phase) as well as ear canal impedance amplitude (ZEC) and phase (ZEC phase). The artificial WAI data are simulated and obtained with a finite-element (FE) ear model and the Monte-Carlo method. We consider this unique approach as a valuable tool that holds great promise for improving the non-invasive diagnosis of middle ear disease for several compelling reasons. First, proper ML training, especially for DL approaches, requires a substantial amount of realistic data. Especially for data on rare middle ear pathologies, it can be difficult, costly, and time-consuming to obtain enough real measurement data. Second, the FE model can also be used to obtain data for other middle ear pathologies, such as otitis media or superior canal dehiscence, or for hearing implants, such as stapes prostheses. In 2002, (8) published an FE model of the ME and their results suggested that the FE model is potentially useful for the study of biomechanics of the ear and that their FE model could be extended for the study of tympanic membrane perforation, otosclerosis or implantable hearing devices. In their later publication (9), the inner ear was added to their FE model, upon which (10) based their FE model and predicted postoperative hearing outcomes for two types of stapes prostheses (11). Third, simulated data can be obtained for different degrees of severity of pathologies. In particular, early stages of pathologies are difficult to obtain in real measurements, as patients often seek medical attention only when their condition has reached an advanced stage. Fourth, the simulation helps to understand cause-and-effect relationships between model parameters and how they affect the WAI data. For example, stiffening of the annular ligament results in increased reflectance particularly in the low to mid-frequency range up to about 1–2 kHz. The study by (12) showed that simulated audiograms generated using an FE model were consistent with preoperative audiograms in cases of otosclerosis and incudostapedial joint discontinuity and concluded that their FE model-based findings provide insight into the pathogenesis of CHL due to otosclerosis, middle ear malformations, and traumatic injury.

Therefore, successfully using simulation-based WAI data for classifier training is desirable.

The paper emphasizes three main points:

- The potential success of using simulation-based WAI data for classifier training to classify normal, otosclerotic, and disarticulated ears;

- The applicability of XGB to WAI data;

- And the usefulness of ML interpretability algorithms in identifying relevant key features for differential diagnosis and increasing confidence in classifier decisions.

To the best of our knowledge, the ML algorithm extreme gradient boosting (XGB) has not yet been used to classify normal, otosclerotic, and disarticulated ears. We present this article in accordance with the STARD reporting checklist (available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-10/rc).

Methods

WAI data

Ethics approval was not applicable for this study, as we did not perform any measurements. The test data used comprised real measurements on patients and are shown in Figure 1 along with their corresponding medians. They originate from the freely accessible WAI database described in (13), where measurement data are obtained at ambient pressure in the frequency range 226–6,000 Hz. Within the WAI database, the study (1) was the only study with data for ears with otosclerosis (14 samples) and disarticulation (6 samples) and the subjects ranged from age 22 to 72 years. The inclusion criteria for their study were an Air-Bone Gap (ABG) of more than 10 dB on pure-tone audiometry, an intact and healthy tympanic membrane consistent with middle ear on otoscopic examination and surgically confirmed diagnosis of the pathologies. For the otosclerotic and disarticulated ears, the mean ABG was larger than 30 dB for frequencies up to 1,000 Hz. The pathologic test data set therefore consists of patients with significant disease severity, making it well suited for our study as we want to validate our method with clear cases before including more diverse pathologic scenarios. Differential middle ear diagnosis using WAI data and ML is a relatively new and challenging field. After filtering data for adults and using different criteria for plausibility, such as consistency in the reported ZEC and ER data, the study (7) provided a total of 452 datasets: 440 normal ears, 7 otosclerosis ears and 5 disarticulation ears.

For training and validation data, we did not use the experimental data from the WAI database. Especially for the pathological cases, the WAI database contains data from few individuals, but ML and especially DL approaches require large data sets for proper model training and good performance on new data. Therefore, the virtual WAI data presented and defined in (7) are used for training and validation, including the WAI measurements ZEC, ZEC phase, ER and R phase.

The virtual data were obtained through the use of Monte Carlo simulations and the FE model from (14). To limit the simulated data to plausible data, literature-based cutoff limits for the simulated stapes transfer function, umbo transfer function, and ER were applied (15,16). In addition, limits for relative changes due to otosclerosis and disarticulation were used (1). Out of the original 400,000 virtual subjects, 79 remained within all defined limits and were therefore plausible in both normal and pathological manifestations. Pathological manifestation is characterized for otosclerotic ears by the fact that in the simulation, the stiffness parameters of the annular ligament are increased by a factor of 10,000 compared to the value in the normal ear. In simulated disarticulated ears, the stiffness, and damping parameters of the incudostapedial joint are zero. To augment the data and account for variations due to probe insertion for each of the 79 remaining virtual subjects, 40 variations were simulated by varying the ear canal length and ear canal area, resulting in 3,160 datasets for each manifested case. Therefore, the training data consist of 3,160 datasets per class, resulting in a total of 9,480 datasets, which are displayed in Figure 1 with the median and 10th and 90th percentiles.

The measured quantities are distributed at 96 logarithmically spaced datapoints between 226 and 6,000 Hz. Since simulated and measured values for ER magnitude and R phase differ too greatly above 3 kHz, which was attributed to large interindividual variability in the tympanic cavity in (7), these quantities are only displayed up to 3 kHz. Finally, min-max scaling was applied based on training and test data to normalize the used data to values between 0 and 1 with normalization for ER, R phase and ZEC phase as well as logarithmic ZEC magnitude. Normalization is performed based on all data for training. The formulas to recalculate the original values are provided in Table 1.

Table 1

| Criteria | Offset | Scale | Value normalized |

|---|---|---|---|

| ER | 1.3557e−04 | 0.9776 | Vn, ER = (Vo, ER − OffsetER) × ScaleER |

| ZEC | 88.0527 | 0.0113 | Vn, ZEC = [20 × log10(Vo, ZEC) − OffsetZEC] × ScaleZEC |

| R phase | −16.9489 | 0.0547 | Vn, R phase = (Vo, R phase − OffsetR phase) × ScaleR phase |

| ZEC phase | −2.3286 | 0.2275 | Vn, ZEC phase = (Vo, ZEC phase − OffsetZEC phase) × ScaleZEC phase |

The offset is set to the minimum value of the corresponding criteria, and the scale factor is computed as 1 divided by the range (max–min) of the corresponding criteria. R phase and ZEC phase in radians. ZEC, ear canal impedance; WAI, wideband acoustic immittance; ER, energy reflectance.

Classifiers

The first classifier used, XGB, is a scalable ML algorithm based on tree boosting (17). Decision trees use certain features for splitting and therefore classifying the data. During the boosting process, more decision trees are created during every boost round, decreasing the loss function along its gradient and hence improving on the residuals of the trees created in the previous boosting round. XGB can be used for different tasks, such as classification, regression, and ranking. It has already been used in various data science competitions and achieves state-of-the-art results (18). XGB stands out due to fast computation and applicability for small to large datasets and is available as an open-source library for the gradient boosting framework. For this paper, XGB version 1.7.3 is used.

The second classifier used is a CNN. CNNs have been developed for image recognition and are currently used for a variety of visual or multidimensional tasks. By using convolutional operations, CNNs are capable of learning complex or abstract concepts by automatically extracting relevant features from the input data. CNNs are available via open source libraries and have been implemented for this paper through the TensorFlow keras library version 2.9.0.

Evaluation metrics

To evaluate classifier performance, common evaluation metrics include accuracy, precision, recall, specificity, F1 score, and AUC. However, all evaluation metrics can be calculated with the results displayed in the confusion matrix. For better transparency, the confusion matrices are displayed, as sometimes not all valuation metrics of interest are mentioned.

For the overall scores, the averaging can be made with classes equally weighted (macro-score) or with respect to the class size (micro-score). Using the micro-score can result in misleading overall scores when testing on data containing largely underrepresented groups, therefore, classifiers could achieve high overall scores even when performing badly on detecting pathological cases. Transparency over the actual results per class and how the overall score is being composed becomes more important as more classes are being classified. Again, for multiclass classifications, the confusion matrices provide more information about wrongfully predicted classes.

For this study, the resulting confusion matrices and all metrics are displayed except for AUC, which is more common for binary classification. Macro-recall and macro-F1-score are preferred to overall score due to the small prevalence of pathologies in test data (7 otosclerotic and 5 disarticulation datasets out of total 452 sets). Macro-Recall shows screening performance, and the macro-F1-score also takes precision into account.

Classifier architecture

Input values for both classifiers are the values for ER, ZEC magnitude, R phase and ZEC phase. Thus, each individual dataset consists of 384 (4×96) data points.

XGB-structure

The XGB model can be set up using only a few parameters and even achieves good results using mostly default-parameters. For this study, the following parameters were used: Num_parallel_tree (independently parallel created trees per boost round), subsample (percentage of data used for creation of a tree), learning rate (contribution of a tree to final model), alpha (regularization parameter), max_depth (maximum depth of trees that can help understand complex patterns), and num_boost_rounds (sequential rounds in which trees are created). The parameters used are shown in Table 2.

Table 2

| Parameter | Value |

|---|---|

| Num_parallel_tree | 1 |

| Subsample | 0.1 |

| Learning_rate | 0.3 |

| Alpha | 6 |

| Max_depth | 5 |

| Num_boost_round | 50 |

| Num_class | 3 |

XGB, extreme gradient boosting.

The XGB model is trained using all simulated data for training, and no splitting in training and validation is applied. It is trained in 50 boost rounds, and in every boost round, 3 trees (1 parallel tree for each class) are created, resulting in a total of 150 decision trees. The evaluation metrics used for the training are the multiclass error (merror) and the multiclass log loss (mlogloss). With 50 boosting rounds, we observed convergence of the target function (accuracy of 99.8% and logloss of 0.05).

Neural network architecture

The CNN aims to be a so-called lightweight CNN, a comparatively small model with a reduced number of layers and model parameters that results in reduced computation time. The lower complexity helps to prevent overfitting and reduces the need for large datasets for proper model training. The input data are shaped as a 1D array with a height of 384, width of 1 and 1 channel. The CNN structure is based on the CNN described in (7), which consist of only two sequential convolutional layers, each followed by a batch normalization layer as well as an activation layer using ‘ReLU’ activation. The convolutional layers consist of 20 channels, a kernel of size [1,20], stride 1 and padding = “same” to keep the format the same. After the 2nd convolutional layer, a flattening layer is used, followed by a fully connected dense layer limiting the outputs to 3 and a SoftMax layer for the final prediction of the class. This architecture results in a total of 31,643 trainable parameters. The entire structure of the CNN is described in Table 3. The implementation was performed in Python to subsequently use the iNNvestigate library (19) to analyze the importance of the input features.

Table 3

| Layer size | Channel | Kernel size [w, h] | Stride | Padding | Output size [ch, w, h] |

|---|---|---|---|---|---|

| Input layer | – | – | – | – | 1×1×384 |

| Convolution layer Conv2D | 20 | 1×20 | 1 | Same | 20×1×384 |

| Batch normalization layer | – | – | – | – | 20×1×384 |

| ReLU layer | – | – | – | – | 20×1×384 |

| Convolution layer Conv2D | 20 | 1×20 | 1 | Same | 20×1×384 |

| Batch normalization layer | – | – | – | – | 20×1×384 |

| ReLU layer | – | – | – | – | 20×1×384 |

| Flatten layer | – | – | – | – | [7,680] |

| fully connected layer | – | – | – | – | [3] |

| SoftMax layer | – | – | – | – | [3] |

CNN, convolutional neural network; w, width; h, height; ch, channels.

For the training process, all available data samples are randomly divided into an 80% training set and a 20% validation set, without considering the virtual subjects to which each data sample belongs. The network was trained using stochastic gradient descent with momentum (SGDM) with a learning rate of 0.01, categorical cross-entropy chosen as the loss function and accuracy selected as the evaluation metric. The training went over 20 epochs with batches of size 30, and the training and validation loss substantially decreased in the first five epochs. After every batch, validation was performed, and batches were shuffled after every epoch. Early stopping and saving of the best weights were applied but were not used throughout training since for training and validation, both accuracy and loss steadily improve toward convergence before training ends at 20 epochs. For both classifiers, an NVIDIA Quadro P2200 GPU was used, and the training duration was 1.3 s for XGB and 73 sec for CNN.

Interpretability

To better understand and verify the plausibility of the classifier, its decision-making process must be analyzed, and the importance of the used data features must be determined.

XGB uses multiple decision trees in which different split features can be found. Even though all decision trees are included in the classification, their weight in the classification depends on their contribution to the model’s accuracy. Such information, i.e., how often a split feature is used or how well it contributes to split data, is used in different already built-in feature importance measures for XGB. The available importance measures for features are ‘gain’, ‘total_gain’, ‘total_cover’, ‘cover’ and ‘weight’.

- ‘weight’: the number of times a feature is used to split the data across all trees.

- ‘gain’: the average gain across all splits in which the feature is used.

- ‘cover’: the average coverage across all splits in which the feature is used.

- ‘total_gain’: the total gain across all splits in which the feature is used.

- ‘total_cover’: the total coverage across all splits in which the feature is used.

We will concentrate on the feature importance ‘gain’, as this measure was observed to provide the clearest visual interpretation. The feature importances derived from the decision trees are fixed for all testing data, as the trees are constructed solely based on the training data and do not change during testing.

CNNs often consist of multiple different layers, each with different filters, channels, neurons, and many trainable parameters, resulting in a large complexity of the network. Especially due to their complexity and the variety of different implementations of CNNs, it is difficult for the user to understand the mechanisms and the working of CNNs in their entirety, which is often regarded as a black box. Due to the need to understand the CNN decision-making process, different methods for analyzing CNNs have been developed. iNNvestigate (19) have created an open-source library containing a variety of methods for analyzing neural networks. The analyzers can be used to interpret activity in the neural network for individual test data. This can lead to different feature importances for different sets of test data.

The iNNvestigate analyzer returns an array containing different importance values for each input feature. In this paper, each set of test data is analyzed individually, and the importance values are then averaged for each class. For both XGB and CNN, the obtained importance values are normalized to sum up to 100%. This normalization enables a relative assessment of each feature’s importance compared to others, irrespective of the scale or units of the original values, ensuring comparability with importances obtained from other methods.

Here, as a method for the analyzer, deep Taylor decomposition (DTD) is used. It is designed for but not limited to image classification [grayscale or red green blue (RGB)]. DTD recursively selects input value P and determines one root point close to it in the input layer with value 0, which is passed forward to obtain an output. The output neuron is assigned a relevance score, and with the use of backpropagation (relevance propagation), pixelwise relevance scores are estimated for the given input P; see (20). The individually analyzed and obtained feature importance values can be used to point out key features for that specific set of data and might be of great use for clinical usability.

Data scenarios

Simulated data are being used for the classifier’s training due to the rarity of pathological data. It is expected that for the classifier to achieve good results, the same key features must emerge for both the training and test data. Implausible qualitative deviations in either the training or measurement data can lead to the classifier not learning a key feature or learning implausible key features, both worsening the classifier’s performance. In the simulated and measurement data used, some implausible deviations are present that are expected to influence the classifier’s performance on the real measurement test data. Therefore, two scenarios will be examined:

- Cross-validation using the simulated data will be investigated to determine how well the classifiers perform on simulated data, which mostly show good qualitative compliance. This is being used to validate whether the expected key features (expected based on visual interpretation of training data) are being found by the classifiers.

The simulated data originate from 79 virtual subjects, where data from the same virtual subject exhibit similarities. The variations observed within data from the same virtual subject are attributed to differences in probe insertion depth, reflecting repeated measurements on the same patient. The simulated data include some virtual subjects that exhibit significant deviations or extreme values compared to most other data samples. Such extreme cases are also expected to occur in clinical practice, making it particularly relevant to understand how the classifiers respond to and classify these extreme samples. If all or most data from the same virtual subject are classified falsely, this would indicate that in clinical practice, even despite multiple measurements, some cases might be falsely classified.

Therefore, a leave-one-out cross-validation for the 79 virtual subjects is performed with a loop of 79 rounds, where in each round, a different virtual subject is selected as test data. For each loop, the confusion matrix is saved containing the results for that subject, and in the end, the overall results shown are shown for all loops.

- Testing the classifiers on the real measurement data, representing how the testing is to be used in real-world applications. Simulated data are only used for training (and validation).

Since some substantial overlap between data of different classes can be found, i.e., “mild otosclerosis” can lie in the range of normal ears in terms of reflectance, it is expected that neither training data nor test data can be predicted with 100% accuracy.

Results

Validation of simulated data

The leave-one-out validation was carried out on 79 virtual subjects and took 97 s for XGB and 97 min for the CNN. The achieved overall accuracy is 91.2% (XGB) and 94.5% (CNN) and is identical to macro-recall due to equal class-sizes (see Table 4). Macro-F1-scores are almost identical to macro-recall, 91.2% (XGB) and 94.5% (CNN) due to macro-precisions of 91.3% (XGB) and 94.5% (CNN) being almost identical to macro-recall.

Table 4

| Study design | Case | Accuracy (%) | Precision (%) | Recall/sensitivity (%) | Specificity (%) | F1-score (%) |

|---|---|---|---|---|---|---|

| XGB—validation | All (macro average) | 91.2 | 91.3 | 91.2 | 95.6 | 91.2 |

| CNN—validation | 94.5 | 94.5 | 94.5 | 97.3 | 94.5 | |

| XGB—test | 74.1 | 38.6 | 81.8 | 85.8 | 38.2 | |

| CNN—test | 77.4 | 54.1 | 81.0 | 87.0 | 54.8 | |

| XGB—validation | Normal | 91.2 | 86.9 | 88.2 | 93.3 | 87.5 |

| Otosclerosis | 91.3 | 94.4 | 95.5 | 92.8 | ||

| Disarticulation | 95.7 | 91.0 | 98.0 | 93.3 | ||

| CNN—validation | Normal | 94.5 | 92.2 | 91.3 | 96.2 | 91.8 |

| Otosclerosis | 97.3 | 96.5 | 98.3 | 96.9 | ||

| Disarticulation | 94.2 | 95.8 | 97.0 | 95.0 | ||

| XGB—test | Normal | 74.1 | 99.4 | 73.9 | 83.3 | 84.7 |

| Otosclerosis | 7.0 | 71.4 | 85.2 | 12.8 | ||

| Disarticulation | 9.3 | 100 | 89.0 | 16.9 | ||

| CNN—test | Normal | 77.4 | 99.4 | 77.3 | 83.3 | 87.0 |

| Otosclerosis | 5.8 | 85.7 | 78.2 | 10.9 | ||

| Disarticulation | 57.1 | 80.0 | 99.3 | 66.7 | ||

| (7)—WAI-measurements CNN classification, diagnose otosclerosis (n=7) and disarticulation (n=5) with separation from normal ears (n=440) | Otosclerosis | 78.3 | 8.3 | 85.7 | 85.2 | 15.2 |

| Disarticulation | 13.9 | 100 | 93.1 | 24.4 | ||

| (7)—WAI-measurements CNN classification + ASRT confirmation, diagnose otosclerosis (n=7) and disarticulation (n=5) with separation from normal ears (n=440), optimistic scenario | Otosclerosis | 73.7 | 60.0 | 85.7 | 99.1 | 70.6 |

| Disarticulation | 71.4 | 100 | 99.6 | 83.3 | ||

| (5)—CNN trained with measured WBT data, separate normal ears (n=55) from otosclerosis (n=80) without data augmentation and transfer learning | Otosclerosis | 89.7 | 89.4 | 89.2 | – | 89.3 |

The performance parameters are shown as accuracy, precision, recall or sensitivity, specificity and F1-score. XGB, extreme gradient boosting; CNN, convolutional neural network; WAI, wideband acoustic immittance; ASRT, acoustic stapes reflex threshold.

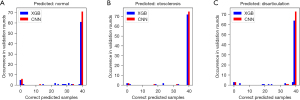

Each virtual subject is composed of 3 distinct classes (normal, otosclerosis, disarticulation), resulting in a total of 237 subject classes. Within these subject classes, each consisting of 40 datasets per class, 197 subject classes were entirely accurately predicted by XGB and 219 by the CNN. However, full, or partial misclassification occurred on 18 normal, 7 otosclerosis, and 15 disarticulation subject classes for XGB and on 8 normal, 4 otosclerosis, and 6 disarticulation subject classes for CNN. The histogram in Figure 2 provides insights into how often certain counts of correct predictions occur across all validation rounds.

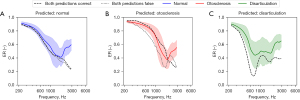

Figure 2 illustrates that in most validation rounds, the classes are entirely correctly predicted. However, when a class is wrongly predicted, the most frequent number of correctly predicted samples is 0. This observation strongly indicates that certain datasets with extreme deviations are prone to misclassification in clinical applications, which can be attributed to the significant overlaps between the datasets of different classes. These data overlaps pose a challenge in the classification process. Figure 3 visually supports this finding, as it displays all the wrongfully predicted datasets during validation for each classifier and shows their mean average as well as the mean and standard deviation of the corresponding class they were inaccurately predicted as.

Additionally, attempts were made to identify indicators of classifier misclassifications by investigating the probability with which the classifiers made predictions. However, it was observed that even when a class was predicted with 100% probability, it could still be incorrect, leading to the absence of any correlation between prediction probability and prediction accuracy.

Classification of measurement data

For the classification of real measurement data, the computation time for XGB was 1.3 and 73 s for the CNN. The confusion matrices are displayed in Figure 4, showing correct predictions for the pathological cases in 5 of 7 otosclerotic and 5 of 5 disarticulated ears for XGB (Figure 4A) and 6 out of 7 otosclerotic and 4 out of 5 disarticulated ears for the CNN (Figure 4B).

Out of the total of 452 sets of test data, 298 sets were correctly classified by both classifiers, while 65 sets were misclassified by both into the same class. These cases are visualized in Figure 5, where their mean values are aligned with their predicted class, along with the corresponding training data averages for reference. In the remaining 89 sets, one classifier made a false prediction, while the other classifier made the correct prediction.

The achieved overall accuracies were 74.1% (XGB) and 77.4% (CNN), macro-recalls 81.8% (XGB) and 81.0% (CNN) and macro-F1-scores 38.2% (XGB) and 54.8% (CNN), see Table 4. It is noticeable that the largest differences between validation and test are in the macro-precision scores, which are 91.3% (Val-XGB) and 94.5% (Val-CNN) versus 38.6% (Test-XGB) and 54.1% (Test-CNN), whereas macro-recall scores are 91.2% (Val-XGB) and 94.5% (Val-CNN) compared to 81.8% (Test-XGB) and 81.0% (Test-CNN). XGB achieved a macro-recall of 81.8% with 71.4% for otosclerosis and 100% for disarticulation. The F1-score (macro) was 38.2%, 12.8% for otosclerosis and 16.9% for disarticulation. For the CNN, the macro-recall was 81.0%, 85.7% for otosclerosis and 80% for disarticulation and the achieved F1-score (macro) was 54.8%, with 10.9% for otosclerosis and 66.7% for disarticulation.

For comparison, Table 4 also shows the results from (7) with their CNN having a slightly lower performance as (5). In (7), they trained their CNN using the same simulated WAI data and achieved a recall of 85.7% for otosclerosis and 100% for disarticulation and an F1-score of 15.2% for otosclerosis and 24.4% for disarticulation. Nie et al. (5) used real measurement WBT data for training their CNN and classified between normal and otosclerotic ears. Without using transfer learning and data augmentation, (5) predicted 80 otosclerotic ears with a recall of 89.2% and F1-score of 89.3% whereas an F1-score of 94% has been achieved with transfer learning and data augmentation. In a later published paper the same authors even achieved a F1-score of nearly 96% (6) on the same dataset, improving the classification performance of their developed lightweight CNN using a Gaussian processes-guided domain adaptation.

Classification key features

It is expected that XGB and CNN classify based on key features that are visually apparent in the simulated and real measurement data. These key features must be coherent with clinical and literature findings. As the derived feature importance values for XGB are solely based on the training data and do not change during testing, they are the same for all test data. Therefore, only one set of importance values exists for XGB, which is shown in Figure 6. It shows large importance peaks for ER (Figure 6A), especially between 600 and 1,000 Hz, as well as minor peaks at 300 Hz and between 2 and 3 kHz. Striking peaks for the ZEC phase (Figure 6A) were found between 600 and 1,000 Hz. For the R phase (Figure 6C) and ZEC amplitude (Figure 6B), the importance values are mostly 0 or very small. This correlates well with the differences between the median curves of the different classes, as seen in Figure 6. Large importance values can be found where the median curves show the largest differences.

Recall that the importance for XGB can only be evaluated on training data, whereas for the CNN, the importance values can be analyzed for individual datasets, allowing for evaluation on both training and test data. Due to the diverse characteristics of individual curves, the importance values obtained by DTD for each dataset can vary significantly. Therefore, the CNN datasets are individually analyzed, and their importance values are then averaged based on their actual class. The feature importance values were analyzed on training data (Figure 7) and test data (Figure 8).

For the averaged feature importances (Figure 7), large values have been found for ER (Figure 7A), especially between 300 and 1,500 Hz as well as between 2 and 3 kHz, with the curves being less peaky and more evenly distributed as for XGB. This only provides a rough correlation to the dissimilarities between the median curves of the various classes. For ER, the changes in ER up to 1,500 Hz do not precisely align with the visual representation of the mean curves in the training data; for disarticulation, the importance drops at 800 Hz, which is the point where mean differences are most evident. After 2 kHz, the importance values appear to be reasonable. Smaller importance values for the R phase (Figure 7C) near 3 kHz and the ZEC phase (Figure 7D) between 5 and 6 kHz have been found that seem implausible compared to the training data. For the ZEC phase, larger importance values between 600 and 1,000 Hz were expected but not found. Attempts were made to achieve a CNN more sensitive to the ZEC phase as a key feature by using a pretraining CNN with only the ZEC phase as a measurand and then using the determined CNN weights as initial weights for the final CNN. However, this led to the pretrained weights prevailing in the final CNN and the CNN not finding other key features.

Figure 8 presents the CNN-importance values obtained from analyzing the test data, and it exhibits similar outcomes as Figure 7. However, there is a slight variation in the importance values for ER (Figure 8A) between 400 and 1,500 Hz. This could be an indicator that the feature importances are heavily influenced or even largely determined by the training process, making the interpretation of the test data more dependent on the training data than initially expected.

Discussion

CNN configuration

As good results were already achieved in (7), it was intended to implement a similar CNN in Python and add the analysis of key features by using the iNNVestigate library. However, using the same model in Python, we could not obtain the same results and simultaneously achieve plausible values for feature importance. Therefore, some modifications were made, and the data format changed from (1 channel, height 4, width 96) to (channel 1, height 384, length 1). Using a 1D format was not possible because the iNNVestigate analyzer requires data in the format of (samples, channels, height, length). Additionally, the scalar quantities (age related ear-canal area and first ear-canal antiresonance frequency) were removed because they were not important or rather displayed high interindividual variability, limiting its discriminating power (5).

The splitting of training data into training and validation data has been made randomly, as splitting data based on the virtual subject from whom they descend lead to difficulties in converging validation loss and accuracy.

It should be noted that the CNN achieved good results using entirely different parameters for kernel size, channels, padding, stride, and data format. Some parameter settings led to good results but implausible key importance values and vice versa. It remains unclear whether there is a single best CNN structure and set of parameters or if multiple plausible structures can yield good results for performance and key features.

Common to all different architectures was that randomness seemed to produce noticeable deviations in the results, whereas the results of XGB were very robust. We hypothesize that these variations originate from the initial weights that are given to neurons at the beginning of training. For the final model, deterministic behavior was enforced, and the model was saved.

Training data volume and classifier speed

XGB showed an enormous advantage in terms of computing speed. To put into numbers, the train duration for classification was 1.3 s for XGB and 73 s for the CNN. The duration for validation was 97 s for XGB and 97 min for CNN. This presents an advantage for XGB, as CNNs demand substantial amounts of training data, which directly correlates to high training duration. Even in screening applications, where the prevalence of pathological cases is evident, considerable amounts of training data are needed for the pathological classes. Attempts to address this by reducing training data to reflect prevalence and compensating with higher weights for less represented pathological classes resulted in a significant decline in performance. This underscores the significance of artificial data and computational efficiency in the classification process.

Validation performance

Both classifiers achieved validation scores of over 90% for macro recall and macro-F1 score, with the CNN performing slightly better than XGB. The validation results showed that in most cases, a set of data is either predicted entirely correctly or incorrectly. This is plausible, as datasets that originate from the same virtual subject are very similar and are therefore expected to be predicted to the same class. When looking at the averaged simulation data, significant differences between classes can be observed (Figure 1). On the other hand, when looking at curve distributions, high variability and overlapping between classes can be seen, i.e., ER below the median of otosclerosis and above the median of normal seem vastly congruent up to 1,500 Hz (Figure 7A). Taking the considerable overlaps into account, correctly classifying all datasets seems nearly impossible solely based on WAI data, and it is impressive that the classifiers even achieve such high results on the simulated WAI data. This observation is supported by Figure 3, which clearly illustrates that data misclassified by both classifiers strongly resemble the mean data of the class they have been predicted as.

Classification performance

The results of this paper show that XGB and CNNs trained with artificial WAI data can help in the differential diagnosis of normal, otosclerotic and disarticulated ears. The classifiers performed classification on real measurement data and were able to achieve sensitivities (recall) for otosclerosis of 71.4% (XGB) and 85.7% (CNN) and for disarticulation of 100% (XGB) and 80% (CNN). The achieved macro-F1-scores were comparatively low at 38.2% and 54.8%, which is solely the result of the prevalence in the test data since precision is calculated by

Since 440 out of 452 test datasets are normal ears, it is highly likely that some normal ears are predicted as either disarticulation or otosclerosis. In combination with the low number of pathological ears, this results in low precision and consequently leads to low F1-scores for the pathological classes.

The overall results are comparable to those of (7) without using the acoustic stapes reflex threshold (ASRT) as a second diagnostic criterion. For added ASRT, their precision and F1-scores improve because fewer normal ears are falsely classified as pathologic. In comparison, Nie et al. (5,6) used five-fold cross-validation for binary classification of 55 normal and 80 otosclerosis ears. They achieved significantly higher F1-scores, namely 94% (5) and up to 97% (6). It must be considered though, that our study performed classification on 3 classes, which is more difficult than binary classification. Also, the test data of Nie et al. (5,6) contained more pathological ears than normal ears, whereas our test datasets contain only a few pathological cases according to prevalence. As mentioned, the low number of pathological ears easily result in low precision- and F1-scores with only a few normal ears being misclassified.

We expect better performances by further investigation of inclusion criteria for normal ears, using WBT data and adding a second diagnostic criterion such as stapes reflex. When capturing normal ears, certain individuals, such as older adults, may fall within the normal range but exhibit characteristics that are close to those associated with otosclerosis compared to the normal ear of a younger person. Consequently, as evident in Figure 5, when mispredictions occur by both classifiers, the data tend to exhibit similarities to the mean of the training data for their predicted class. This observation underscores the significance of having well-defined inclusion criteria for normal ears, ensuring accurate classification and differentiation between different classes. Large improvements are expected using WBT data, as they provide more data to be analyzed and hence have the potential to provide more distinguishable data. Additionally, adding a second diagnostic criterion such as ASRT can help reduce normal ears being falsely classified as pathologies, therefore improving the classifier precision.

Key features

Both classifiers underwent feature importance analysis to identify key features used in their classification process. As concluded in (1), impedance and therefore ER are observed to change for conductive disorders and can help in differentiating conductive pathologies. ER is more suitable for differentiation because it is independent of measurement location, and relative changes in ER are more distinguishable. This is visually apparent in the simulated and measured data and detected by both XGB and CNN as key features.

XGB demonstrated that the most crucial key feature for classification is ER between 600–1,000 Hz, followed by impedance phase between 600 and 1,000 Hz as the second key feature.

Especially ER between 600–1,000 Hz is consistent with indicators reported in literature, i.e., (21) who reported increase for ER in low-to-mid frequencies, for otosclerosis as well as ER decreases for disarticulation between 500–800 Hz. These results align well with the data utilized in this study, providing additional support for the classifiers’ decision-making process.

The CNN feature importance analysis revealed mixed results. While some correlations with the mean curves of the training data were observed for ER up to 1,500 Hz, which is also consistent with values reported in literature (21), and between 2 and 3 kHz, the identified feature importances did not precisely align. Moreover, a certain smaller and implausible importance was found for the R phase at 3 kHz and the ZEC phase between 5 and 6 kHz, while the expected feature importance for the ZEC phase between 600 and 1,000 Hz was not detected. Further investigation is required to fully understand the value of analyzing CNNs’ key features. Future research may explore the potential improvement in feature importance detection when analyzing 3D-WBT data and employing different CNN structures.

In summary, XGB shows strengths in robustness and computation speed, being approximately 60 times faster than the implemented CNN. It achieved good results, although further investigation is needed to clarify whether performance could be further improved using different XGB parameters. XGB efficiently picks on the differences between training data and determines key features with distinctive peaks for classification that remain constant throughout evaluation on test data; thus, the criteria on how data will be predicted are clear. The CNN shows strengths in classification performance and the possibility of individual interpretability. As it is possible to show what features were involved for individual classification results, this could be a great aid in diagnosis as it can be indicated to the doctor or hearing professional what areas of the measurement data are worth looking at more closely. To obtain sufficient training data for the CNN, artificial data can be used.

Conclusions

This study successfully demonstrates the classification of normal, otosclerotic, and disarticulated ears using a CNN and XGB to classify real measurement WAI data.

This demonstrates the potential success of using simulation-based WAI data for classifier training to classify normal, otosclerotic, and disarticulated ears and that XGB is applicable to WAI data. Furthermore, ML interpretability algorithms are useful to identify relevant key features for differential diagnosis and to increase confidence in classifier decisions.

To fully evaluate the clinical applicability of both classifiers used in this preliminary study, further evaluation with more real measurement data, especially for the pathological cases, is essential. In addition, the inclusion of more pathologies in the analysis of the key features used by the classifiers is recommended.

Acknowledgments

Funding: This work was supported by

Footnote

Reporting Checklist: The authors have completed the STARD reporting checklist. Available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-10/rc

Data Sharing Statement: Available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-10/dss

Peer Review File: Available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-10/prf

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-10/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. Ethics approval was not applicable for this study, as the authors did not perform any measurements.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Merchant GR, Merchant SN, Rosowski JJ, et al. Controlled exploration of the effects of conductive hearing loss on wideband acoustic immittance in human cadaveric preparations. Hear Res 2016;341:19-30. [Crossref] [PubMed]

- Crowson MG, Bates DW, Suresh K, et al. “Human vs Machine” Validation of a Deep Learning Algorithm for Pediatric Middle Ear Infection Diagnosis. Otolaryngol Head Neck Surg 2023;169:41-6. [Crossref] [PubMed]

- Grais EM, Nie L, Zou B, et al. An advanced machine learning approach for high accuracy automated diagnosis of otitis media with effusion in different age groups using 3D wideband acoustic immittance. Biomedical Signal Processing and Control 2023;87:105525. [Crossref]

- Sundgaard JV, Bray P, Laugesen S, et al. A Deep Learning Approach for Detecting Otitis Media From Wideband Tympanometry Measurements. IEEE J Biomed Health Inform 2022;26:2974-82. [Crossref] [PubMed]

- Nie L, Li C, Marzani F, et al. Classification of Wideband Tympanometry by Deep Transfer Learning With Data Augmentation for Automatic Diagnosis of Otosclerosis. IEEE J Biomed Health Inform 2022;26:888-97. [Crossref] [PubMed]

- Nie L, Li C, Bozorg Grayeli A, et al. Few-Shot Wideband Tympanometry Classification in Otosclerosis via Domain Adaptation with Gaussian Processes. Applied Sciences 2021;11:11839. [Crossref]

- Lauxmann M, Viehl F, Priwitzer B, et al. Classifying Otosclerosis and Disarticulation Using a Convolutional Neural Network Trained With Simulated Wideband Acoustic Immittance Data. Heliyon 2024; [Crossref]

- Gan RZ, Sun Q, Dyer RK Jr, et al. Three-dimensional modeling of middle ear biomechanics and its applications. Otol Neurotol 2002;23:271-80. [Crossref] [PubMed]

- Gan RZ, Reeves BP, Wang X. Modeling of sound transmission from ear canal to cochlea. Ann Biomed Eng 2007;35:2180-95. [Crossref] [PubMed]

- Kwacz M, Marek P, Borkowski P, et al. A three-dimensional finite element model of round window membrane vibration before and after stapedotomy surgery. Biomech Model Mechanobiol 2013;12:1243-61. [Crossref] [PubMed]

- Kwacz M, Marek P, Borkowski P, et al. Effect of different stapes prostheses on the passive vibration of the basilar membrane. Hear Res 2014;310:13-26. [Crossref] [PubMed]

- Hirabayashi M, Kurihara S, Ito R, et al. Combined analysis of finite element model and audiometry provides insights into the pathogenesis of conductive hearing loss. Front Bioeng Biotechnol 2022;10:967475. [Crossref] [PubMed]

- Voss SE. An online wideband acoustic immittance (WAI) database and corresponding website. Ear Hear 2019;40:1481. [Crossref] [PubMed]

- Sackmann B, Eberhard P, Lauxmann M. Parameter Identification From Normal and Pathological Middle Ears Using a Tailored Parameter Identification Algorithm. J Biomech Eng 2022;144:031006. [Crossref] [PubMed]

- Rosowski JJ, Chien W, Ravicz ME, et al. Testing a method for quantifying the output of implantable middle ear hearing devices. Audiol Neurootol 2007;12:265-76. [Crossref] [PubMed]

- Rosowski JJ, Nakajima HH, Hamade MA, et al. Ear-canal reflectance, umbo velocity, and tympanometry in normal-hearing adults. Ear Hear 2012;33:19-34. [Crossref] [PubMed]

- Friedman JH. Greedy function approximation: A gradient boosting machine. Ann Statist 2001;29:1189-232. [Crossref]

- Chen T, Guestrin C. XGBoost: A Scalable Tree Boosting System. 2016. doi:

10.1145/2939672.2939785 .10.1145/2939672.2939785 - Alber M, Lapuschkin S, Seegerer P, et al. iNNvestigate Neural Networks! Journal of Machine Learning Research 2019;20:1-8.

- Montavon G, Bach S, Binder A, et al. Explaining nonlinear classification decisions with deep Taylor decomposition. Pattern Recognition 2017;65:211-22. [Crossref]

- Nakajima HH, Pisano DV, Roosli C, et al. Comparison of ear-canal reflectance and umbo velocity in patients with conductive hearing loss: a preliminary study. Ear Hear 2012;33:35-43. [Crossref] [PubMed]

Cite this article as: Winkler ST, Sackmann B, Priwitzer B, Lauxmann M. Exploring machine learning diagnostic decisions based on wideband immittance measurements for otosclerosis and disarticulation. J Med Artif Intell 2024;7:9.