GPT-4o vs. residency exams in ophthalmology—a performance analysis

Highlight box

Key findings

• GPT-4o achieved the highest accuracy (74.2%), outperforming GPT-4 (61.9%) and Gemini Advanced (65.8%), while approaching human residents’ performance with similar trends across different subspecialties.

• GPT-4o excelled in text-only questions, achieving accuracy of 82.3%, demonstrating strength in understanding and generating responses for text-based questions compared to GPT-4’s 73.8% and Gemini Advanced’s 72.3%.

• GPT-4o also showed superior image-processing capabilities in every metric, with 60.5% accuracy compared to GPT-4’s 41.8% and Gemini Advanced’s 39.3%. It excelled in fundus, pathology, and labeled images, yet still struggled with complex visuals like drawings, graphs, and multi-image prompts.

What is known and what is new?

• General-purpose Large Language Models (LLMs) have shown notable performance in clinical decision-making. However, their efficacy in specialties like ophthalmology has been limited by challenges in visual interpretation and scarce subspecialty-specific training data.

• Our findings demonstrate significant advancements in both text and image processing. GPT-4o’s improved performance marks a meaningful leap in artificial intelligence (AI) capabilities within the subspecialty of ophthalmology, suggesting increased potential for integration in clinical settings.

What is the implication, and what should change now?

• GPT-4o’s progress in text and image analysis shows promise for ophthalmology training and diagnostics. To assess LLMs’ performance for clinical implementation, further studies should focus on accuracy and visual data interpretation while ensuring bias-free implementation.

• Ongoing collaboration between AI developers and medical professionals is vital for effective clinical AI integration.

Introduction

Tracing its journey back to the mid-20th century, artificial intelligence (AI)’s acceleration has been dramatic, and in recent years this landscape is rapidly evolving, and now includes neural network-driven chatbots that automate complex tasks; they adeptly understand natural language and produce human-like responses, showcasing remarkable progress in AI development. Highlighted by Large Language Models (LLMs) like OpenAI’s Generative Pre-trained Transformer (GPT) and Google’s AI, now branded as Gemini (1,2).

Trained on vast amounts of texts and code data, these models are able to analyze statistic dependencies between words and code efficiently and perform task-oriented text generation, language translation and provide informative answers (3,4).

OpenAI’s release of their new model GPT-4o in mid-May 2024 (4), holds promise for a deeper understanding of concepts, more up-to-date knowledge, more efficient resource management server-side, and significantly faster response times (5).

Comparing LLMs is tough since they were designed and trained differently, and differ in how they employ information and handle input types. The varied architectures as well as the access to various information sources affect the quality of the response (6).

Since their rise in popularity, LLM models have been studied in different aspects of the medical field to utilize their potential; despite extensive testing in the field of ophthalmology regarding possible AI use (7-14). Unlike major fields of medicine (15), ophthalmology is a specialized area with scarce resources, with less training data available for training, making it more challenging for chatbots. However, in newer improved models, we expect clinical reasoning to improve even within limited resource availability.

We aim to track the recent progress of chatbots and measure their reliability (16,17) by subjecting them to solve the same exams meant for human resident physicians in ophthalmology—national board exams (18).

This study benchmarks widely-used AI models, OpenAI’s GPT-4o, GPT-4 and Google’s Gemini Advanced against Israeli ophthalmology residency board certification exams, compares them to each other and to the performance of residents. Beyond comparing competencies, the study identifies areas where AI models excel and face challenges crucial for integrating AI into education and practice, emphasizing image-processing capabilities, and potentially reshaping knowledge acquisition and application.

Methods

Dataset collection and curation

The 600 questions used in the dataset for this study were obtained from state certification examinations administered to Israeli ophthalmology residents (18), spanning across 12 ophthalmic subspecialties, with 379 questions relying on text only, and 221 incorporating additional materials such as clinical photographs, diagnostic imaging, and anatomical diagrams based on Basic and Clinical Science Course (BCSC) textbooks, facilitating a comprehensive evaluation of AI chatbots’ capabilities. To collect the recent data on the topic, we focused on the 2020–2023 editions when gathering the questions. Cognizant of the importance of language in achieving comprehension and accuracy, the questions were manually translated from Hebrew to English, focusing on both linguistic and medical accuracy. We arrived at 594 questions after excluding 1 question with video supplement, which is not a feasible input form for chatbots, and 5 appealed questions that were deemed unfair by residents and the exam committee. Importantly, while the BCSC was used to evaluate the relevancy of the questions, and the accuracy of the answers generated by the chatbots, the only content directly used in the chatbots came from the Israeli board certification exams. Direct content from the BCSC was not used in any chatbot.

AI chatbots evaluated

We evaluated three chatbots: GPT-4o and GPT-4 which were developed by OpenAI, and Gemini Advanced by Google’s Deepmind. All models were trained on vast amounts of data, and all exhibit proficiency in comprehension, generation and augmentation of text in natural language (19-22). All tested models used paid subscription and are regarded as more capable variants of their free-tier counterparts, performing effectively in prior assessments (23). These chatbots encompass a broad spectrum of AI capabilities, from robust text generation to sophisticated comprehension of concepts, and thus facilitate an extensive evaluation of AI’s potential in medical knowledge assessment.

Comparative analysis framework

This study also made a comparative analysis with Israeli ophthalmology residents, who undergo a five-year intensive training period, and includes written exams comprised of 150 multiple-choice questions, covering information presented in the American Academy of Ophthalmology (AAO) BCSC textbooks; based on the findings of the written test, our aim was to compare the performance of the chatbots to that of residents in an effort to assess the efficacy of AI in replicating the knowledge and decision-making skills of human physicians. Data from past exams held by the Israeli Medical Association, such as pass rates and correct answers, were used for reference (18).

Evaluation methods and outcome measures

Chatbots were to select the most appropriate answer from the list of options based on multiple-choice questions. In the rare cases when a chatbot provided no answer or more than one, we prompted it to answer again and choose a single answer, and they all complied successfully. Each of the responses was assigned a score of right or wrong based on the answer key for the Israeli examination which was used. The primary outcome measure was the proportion of questions each AI chatbot answered correctly. Secondary objectives included annual changes from 2020 to 2023, breakdown of accuracy by 12 ophthalmic sub-specialties, an accuracy comparison between text-only and image-integrated questions, and further testing included categorizing questions according to reliance on mathematical equations, and image questions based into sub-categories: those with labels (arrows, asterisks, etc.), those with multiple images, and by content: faces, eye (close-ups, slit-lamp), fundoscopy, pathology/histology, ocular imaging [optical coherence tomography (OCT) and ultrasound (US)], head imaging [computed tomography (CT)/magnetic resonance imaging (MRI)], drawings (including graphs), visual field tests, mixture of several categories, and others.

Statistical analysis

The primary statistical method used was the Chi-squared test, which determined the significance of differences in accuracy between the model to assess whether observed differences in accuracy rates were statistically significant. For each comparison, a contingency table was constructed to compare the number of correct and incorrect answers provided by each model, with GPT-4o serving as the baseline reference, except for the comparisons by exam years where the results of the 2020 exam for each model served as baseline against consecutive exam years. Odds ratios were calculated to determine the likelihood of each model providing a correct answer relative to GPT-4o, allowing for a clearer understanding of performance differences between models. 95% confidence intervals (95% CI) were calculated and statistical significance was defined at a P value <0.05. Additionally, a bias elimination approach was adopted in the evaluation by question type, by treating all questions blocked by Gemini Advanced as unanswered by all models, to evaluate performance differences without skewing results due to blocked content.

Results

Overall performance

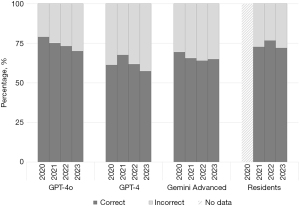

In this analysis, the accuracy of three AI models was compared (Figure 1). The latest model, GPT-4o, processed and answered all 594 questions with an accuracy rate of 74.2%, serving as the baseline for subsequent comparisons. GPT-4 also answered all 594 questions but had a lower accuracy rate of 61.9%. The odds ratio was 0.56 (95% CI: 0.4–0.7), indicating GPT-4 is 44% less likely to answer correctly based on odds (P<0.001). Gemini Advanced answered 457 questions, achieving a 65.8% accuracy rate, while unanswered questions were omitted from the calculations and figures to follow, to assess only its correct and incorrect answers. Its odds ratio was 0.67 (95% CI: 0.5–0.85), suggesting a 33% lower probability of providing a correct answer compared to GPT-4o (P=0.003). These results indicate that GPT-4o significantly outperforms GPT-4 and Gemini Advanced in terms of accuracy.

Evaluation of variance by years

Using Chi-squared tests to evaluate the performance of exams from the years 2021–2023 compared to the baseline of 2020 (Figure 2), this form of testing was done separately for each model. GPT-4o’s accuracy rates across exams from these years are 78.9%, 75.0%, 73.1%, and 70.0%. GPT-4’s accuracy rates are 61%, 67%, 62%, and 57%. Lastly, Gemini Advanced accuracy rates are 69%, 65%, 64%, and 65%. Despite the downward trend in GPT-4o’s accuracy, analysis showed no statistically significant difference in performance between the 2020 exam and other exams for any of the models (P values ranging 0.08–0.92). This is in comparison to the performance of the residents in recent years, excluding 2020 when exam results were not publicly released. In 2021–2023, the average grades were 72.7%, 74.3%, and 71.8%, respectively.

Evaluation by individual topics

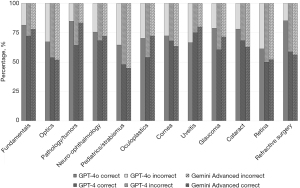

Upon comparing the models based on their performance in each subspecialty (Figure 3), GPT-4o served as the baseline and showed it outperformed the other models in 10 out of 12 topics. Most notably in “Refractive Surgery”, where it achieved an accuracy rate of 85.2%, significantly outperforming GPT-4’s accuracy rate of 58.8% (95% CI: 0.1–0.8, P=0.01) and Gemini Advanced with 56.2% (95% CI: 0.1–0.7, P=0.009). Another significant result is GPT-4o’s accuracy of 84.7% in “Pathology/Tumors” against GPT-4’s 64.4% (95% CI: 0.1–0.8, P=0.01). However, Gemini Advanced achieved 83.3% in this topic, which did not represent a significant difference from our baseline (95% CI: 0.3–2.8, P=0.85). GPT-4 managed to achieve a higher accuracy rate than the baseline in the topic “Uveitis” (75% compared to GPT-4o’s 66.6%), but it was not considered significant with 95% CI: 0.4–5.2, P=0.52. Gemini Advanced achieved scores above the baseline in topics “Uveitis” and “Oculoplastics” with accuracy rates of 80% (compared to 66.6%, 95% CI: 0.5–8.0, P=0.32) and 72.2% (compared to 70.2%, 95% CI: 0.3–3.8, P=0.88), respectively, but both were not considered statistically significant.

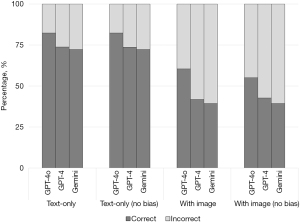

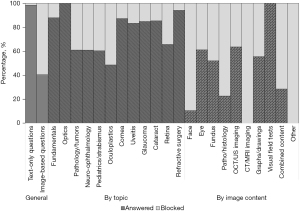

Evaluating performance by question type

We used different question type to compare the performance of each model to itself, showing significantly better performance in text only vs. image-based questions. In comparison of different models against each other (Figure 4), using GPT-4o as the baseline (accuracy 82.3%), it showed significantly improved performance against other models in text-only questions, with GPT-4 having a 73.8% accuracy rate and an odds ratio of 0.6 (95% CI: 0.4–0.8, P=0.004), indicating a 40% lower probability of giving a correct answer. Similarly, Gemini Advanced’s accuracy at 72.3% and an odds ratio of 0.56 (95% CI: 0.4–0.8, P=0.001) means a 44% lower likelihood of providing a correct answer. Likewise, GPT-4o’s 60.5% accuracy in image-based questions was significantly better than the others, with GPT-4 having a 41.8% accuracy rate and an odds ratio of 0.47 (95% CI: 0.3–0.7, P<0.001), indicating a 53% lower probability of giving a correct answer. Additionally, Gemini Advanced’s accuracy at 39.3% and an odds ratio of 0.42 (95% CI: 0.2–0.7, P<0.001) means a 58% lower probability of providing a correct answer.

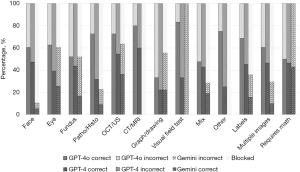

Further comparisons by different categories

When comparing GPT-4o to other models by different sub-categories, we found superior performance in every type of image tested. When calculating significance with Chi-squared tests, it was significantly superior to GPT-4 in types with labels, with multiple images, and in types “Eye” and “Pathology/Histology”. In this case only, we treated Gemini Advanced’s blocked questions as incorrect, and GPT-4o was significantly better in all sub-categories. The only sub-category not involving images was questions requiring mathematical equations, in which all model performed similarly.

Discussion

We examined OpenAI’s newest AI model, compared it to its predecessor, and direct competitor from Google, by testing their ability to answer multiple choice questions for Israeli residents in ophthalmology, then analyzed the difference in performance similarly to studies on older GPT variants, to gauge the leap in performance between models (7,8,15). We found impressive results in all the models. It was expected that the newest model would perform better than the older ones, and it did. However, it is important to note the older models are mere months old as we are writing this paper, demonstrating rapid improvements in this technology. When tracking Israeli ophthalmology residents’ performance, we found that the residents, who dedicate a significant amount of time learning for their exams, achieved an average of 73% correct answers across the years we examined (18).

Another positive finding was the lack of yearly trend in the chatbots’ performance. This indicates impressive resilience of the knowledge base of the AI models, which are adaptable to yearly changes in the exam content.

When comparing GPT-4o’s performance to that of residents, it appears there have been major developments in the past few months alone—it outperformed the residents in the 2021 exam, and scored very close in 2022 and 2023, with insignificant difference when comparing each year results to the corresponding one performed by the residents (P values ranging 0.16–0.64), while none of the other models scored as well as the residents in 2021–2023; however, in the 2021 exam the difference was insignificant for both GPT-4 and Gemini Advanced (P values ranging 0.21–0.33), possibly due to small sample sizes in individual years.

These results highlight the leap in performance of general-purpose LLMs that were not trained specifically for medical expertise, at times outperforming humans in highly specific clinically oriented exams, general medicine (15), and even in specific areas like ophthalmology (8).

The findings of this study are an addition to a compounding body of evidence on the usability of AI chatbots in clinical settings, and further highlighted by findings of recent studies that applied the multimodal nature of these models, demonstrating their usage directly as tools for clinical analysis and diagnostics, including multimodal cases requiring image-processing (14,24), and specific studies designed similarly to ours, for example a comparison study by Antaki et al. between GPT-4 and the older GPT-3.5 based on BSCS questions as well, demonstrating superiority of the newer model while outperforming their human group, albeit not in a significant manner, aligning with our the findings (7), another study by Mihalache et al. where GPT-4 was given ophthalmology-oriented multiple choice questions, showing similar trends to our findings in regards to text-only and image-based questions (25), an additional study by Mihalache et al. that evaluated previous performance of Gemini Advanced (Gemini, and Bard) on board certification examinations, though evaluation methods and conditions were different and focused on geographical location, response lengths, times, and quality of explanations (12). A study by Panthier & Gatinel challenged GPT-4 to solve the European Ophthalmology Board exams in French, in which it performed well, though it is noted that unlike our experiences, the model struggled significantly with image-based questions and they were excluded from the final calculations, and these differences in performance are possibly attributable to the different language used which affect the models’ understanding (11); among other studies seeking to assess performance similarly (8-10), as well those focusing on accessible production of novel tools for clinical settings, by automating tasks that would normally be considered challenging technical obstacles, such as a study by Choi & Yoo (13) which developed a predictive score-based risk calculator for glaucoma risk in a novel “no code” approach within GPT-4, now enabled via use of AI chatbots, and works without reliance on dedicated deep learning algorithms (26-29) that are more expensive to develop and operate, effectively demonstrating the potential broad applications of chatbots when in the hands of dedicated professionals.

Upon assessment by specific topics and categories, we notice a trend of GPT-4o’s superior performance, though not always significant such as when evaluating the performance per subspecialty topics (Figure 3). Other than two topics where GPT-4o performed significantly better than GPT-4 and just one against Gemini Advanced, performance in individual topics appears mostly insignificant compared to the other models. This includes a topic where GPT-4o was outperformed by GPT-4 and two topics where Gemini Advanced managed to do so, albeit none were statistically significant; this is presumably attributable to common drawbacks of statistical tests with lower sample sizes, where statistical power of a test decreases with less data points, and it becomes harder to differentiate significant differences from random variations in the data, as shown by subspecialty topics with smaller number of questions, such as refractive surgery with a total of 34, where GPT-4o significantly outperformed its competitors; the total number of questions in individual topics ranges from 108 in the largest one (“fundamentals”) to just 24 in the smallest (“uveitis”), which is also the one where GPT-4o was surpassed by the other models. Significant differences carry less weight in smaller subset of questions.

Further categorization allowed for interesting comparisons, as in the case of text-based questions and those with integrated images, where each model performed better than itself in a statistically significant manner in regards to text, compared to images; notably when compared to each other (Figure 4), GPT-4o performed significantly better than the other two when comparing solely by either text, or images, it also highlights one of the greater challenges of AI—image processing. Figure 5 reveals the leap in image-processing capabilities with the newer GPT-4o model, as it handled every type of image better than the other models, and particularly in images showing pathology and visual field tests, which the other models handled very poorly in comparison. In the case of images showing drawing and graphs, performance was better but still below 40% correct answers, and we assume it is due to the abstract nature of this content, which is based on colorless lines and is difficult to train for, as data can be represented here in immensely different ways. Regarding photos of the “other” category—they are very few, and show photographs medically-related objects, and the data is too little to discuss. Questions involving mathematical equations (Figure 5) are text-based only, comprising a category of 28 in total, and the models showed similar performance, with no statistical significance between them (P values ranging 0.59–0.78), indicating that all three possess similar understanding of the text and the knowledge to use the right equations to reach an answer.

Limitations in image processing range from the practical ability of the model to freely interpret data, to ethical and safety constraints set by the developers, which is still a subject for discussion in the AI and medical community. The safety measures used in Gemini Advanced prevent it from analyzing a large number of medical images, making it ultimately unable to process 137 out of 600 questions due to technical, ethical or security considerations, of which 131 were images (60% of all images); when analyzing questions blocked by Gemini Advanced (Figure 6), it is notable that only 1.6% of text-only questions were blocked compared to 59.5% of image-based ones, indicating that prompting the chatbot to analyze an image has a much higher likelihood of triggering what appears to be exception rules set by the developers. The reason for blocking is not stated by the chatbot, nor are the conditions causing this known. Upon closer inspection of question types, it appears that 89.4% of questions with images of faces were blocked, possibly hinting at a major reason being privacy of individuals. Notably, questions with several images were blocked 71% of the time, possibly because most also contain at least one image of a face. However, further analysis also shows high rates of blocking in questions pertaining to pathology, neuro-ophthalmology, pediatrics, oculoplastics, and retina with block rates of 39%, 39%, 39.5%, 51.3% and 34% respectively. Other than pediatrics which contains many images with faces of patients, the images in most other topics are mainly of histology samples, CT/MRI scans, and fundoscopy examinations which wouldn’t pose risk of breaching an individual’s privacy. Therefore, we have yet to understand conditions for blocking by Gemini Advanced’s current model. Beyond these findings, at times it refused to acknowledge an image was uploaded along with the question, other times it refused to analyze the image upon understanding that it’s medicine-related in fear of giving incorrect medical advice, and sometimes it blocked images for no apparent reason. This limitation is particularly significant given ophthalmology’s reliance on visual examination and imaging for diagnosis, treatment, and patient monitoring; blocked questions were excluded from Gemini’s calculations, however the chart in Figure 4 also includes bars representing calculations with eliminated bias, by treating all questions blocked by Gemini Advanced as if they were blocked by all models, and this proved not to change statistical significance of the performance differences, except for making the difference in image-only performance insignificant between GPT-4o and GPT-4, meaning Gemini is left behind when ignoring the large amount of blocked questions, and even more so when taking them into account. Figure 5 which aims to analyze specifically image-related content, does not exclude Gemini’s blocked question, this is because when considering models for actual usage in clinical settings, the absolute number of questions answered will matter most, not accounting for bias.

Regarding possible repeatability issues in the models’ performance, it is important to acknowledge that each question was prompted once for each chatbot. This approach mirrors real-world examination conditions but introduces a limitation: we cannot fully assess how much the results might vary if the testing were repeated. This is a recognized limitation of our study, as testing the models multiple times on the same set of questions could reveal variations in performance that a single attempt cannot capture. Understanding these variations is crucial for assessing the stability and consistency of AI models in clinical applications. Despite this limitation, we believe the trends observed in our data provide a strong indication of the models’ relative performance. As demonstrated in Figure 5, GPT-4o consistently outperformed the other models across various image types, suggesting that these differences are not due to random chance but reflect genuine performance capabilities. Moreover, several factors in the study help minimize the impact of potential variability; specifically, we opted to use paid subscriptions for the models, including for those that are also available for free, to ensure optimal resource allocation from the servers, reduce downtime, and mitigate fluctuations in model response quality. An additional factor is the large size of our database, which we tested over multiple days and times, and can act as a “buffer” against any inconsistencies that might arise from one-time testing. Although these steps cannot entirely eliminate the potential for variability, they serve to reduce its impact, allowing us to draw meaningful conclusions about each model’s performance. We recognize that repeatability remains an important factor and suggest that future research could specifically focus on repeated testing to further validate the consistency of AI models under varied conditions. Discussing repeatability also begs the questions of the thought process behind the chatbots’ responses, which our study did not capture as part of its design. While models often provide rationales for their answers, our study was designed to primarily focus on the accuracy of the responses rather than an in-depth evaluation of their reasoning processes. This decision aligns with our goal to simulate real-world exam conditions, where the emphasis is placed on whether the answers provided are correct, rather than the detailed reasoning behind them. Future research could benefit from a dedicated qualitative study that examines both the correctness of the answers and the soundness of the underlying reasoning, particularly in contexts where reliable clinical decision-making is essential.

It is worth mentioning the ever-present possibility that the models had access to the exact database questions as part of their training dataset. This cannot be verified, due to the non-disclosure of the exact materials used to train the models, making it a possibility and possibly a limitation in any study with similar design, including ours. However, it is unlikely due to the fact the models attempted to provide rationales for their answers, suggesting general understanding of the tasks given. Additionally, the original language of the exam files, which is Hebrew, would have been automatically translated to a different language if they were indeed used; however, this study’s version was translated manually by the authors, and therefore likely phrased differently in a manner that would minimize the odds of a model retrieving existing answers from the dataset.

Conclusions

Comparison of all questions in the dataset (Figure 1), with no regard to year, topic, and type, shows GPT-4o outperforming both GPT-4 and Gemini Advanced, who achieved similar accuracy rates. In comparisons by sub-categories, GPT-4o also outperforms its competitors in the majority of cases; Being a newly released LLM at the time of testing warrants polishing, and potentially improved performance in the near future. This research invites more studies of the performance details of AI in handling different languages and topics, suggesting the possibility of use in ophthalmologic diagnosis. Subsequent studies should address confidence levels and repeatability in answering questions, and further focus on image-interpretation capabilities. Thus, the study contributes to the ongoing debate on how AI integration can be further embraced in medicine and its sub-specialties. Despite the challenging conditions, there is a clear deposit of what AI can do in terms of clinical decision-making and improving the health of patients. The longer goal as we try to manually align and optimize AI and human labor is a future where healthcare gets enhanced for medical professionals and their patients.

Acknowledgments

We extend our sincere gratitude to Dr. Haneen Jabaly-Habib, Head of the Ophthalmology Department at Tzafon Medical Center, for her exceptional mentorship and steadfast support. The American Academy of Ophthalmology reviewed and approved the methodology of this paper. We thank the Israeli Medical Association for the open sharing of their content, facilitating research and the advancement of different topics, including AI, in the medical field.

Footnote

Data Sharing Statement: Available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-269/dss

Peer Review File: Available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-269/prf

Funding: None.

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-269/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. IRB approval is not required for this study in accordance with local or national guidelines.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Waisberg E, Ong J, Masalkhi M, et al. GPT-4: a new era of artificial intelligence in medicine. Ir J Med Sci 2023;192:3197-200. [Crossref] [PubMed]

- Masalkhi M, Ong J, Waisberg E, et al. Google DeepMind's gemini AI versus ChatGPT: a comparative analysis in ophthalmology. Eye (Lond) 2024;38:1412-7. [Crossref] [PubMed]

- Bansal G, Chamola V, Hussain A, et al. Transforming Conversations with AI—A Comprehensive Study of ChatGPT. Cogn Comput 2024;16:2487-510. [Crossref]

- Hello GPT-4o | OpenAI [Internet]. [cited 2024 May 23]. Available online: https://openai.com/index/hello-gpt-4o/

- Models - OpenAI API [Internet]. [cited 2024 May 23]. Available online: https://platform.openai.com/docs/models/continuous-model-upgrades

- Tian S, Jin Q, Yeganova L, et al. Opportunities and challenges for ChatGPT and large language models in biomedicine and health. Brief Bioinform 2023;25:bbad493. [Crossref] [PubMed]

- Antaki F, Milad D, Chia MA, et al. Capabilities of GPT-4 in ophthalmology: an analysis of model entropy and progress towards human-level medical question answering. Br J Ophthalmol 2024;108:1371-8. [Crossref] [PubMed]

- Antaki F, Touma S, Milad D, et al. Evaluating the Performance of ChatGPT in Ophthalmology: An Analysis of Its Successes and Shortcomings. Ophthalmol Sci 2023;3:100324. [Crossref] [PubMed]

- Mihalache A, Popovic MM, Muni RH. Performance of an Artificial Intelligence Chatbot in Ophthalmic Knowledge Assessment. JAMA Ophthalmol 2023;141:589-97. [Crossref] [PubMed]

- Cai LZ, Shaheen A, Jin A, et al. Performance of Generative Large Language Models on Ophthalmology Board-Style Questions. Am J Ophthalmol 2023;254:141-9. [Crossref] [PubMed]

- Panthier C, Gatinel D. Success of ChatGPT, an AI language model, in taking the French language version of the European Board of Ophthalmology examination: A novel approach to medical knowledge assessment. J Fr Ophtalmol 2023;46:706-11. [Crossref] [PubMed]

- Mihalache A, Grad J, Patil NS, et al. Google Gemini and Bard artificial intelligence chatbot performance in ophthalmology knowledge assessment. Eye (Lond) 2024;38:2530-5. [Crossref] [PubMed]

- Choi JY, Yoo TK. Development of a novel scoring system for glaucoma risk based on demographic and laboratory factors using ChatGPT-4. Med Biol Eng Comput 2025;63:75-87. [Crossref] [PubMed]

- Carlà MM, Gambini G, Baldascino A, et al. Exploring AI-chatbots' capability to suggest surgical planning in ophthalmology: ChatGPT versus Google Gemini analysis of retinal detachment cases. Br J Ophthalmol 2024;108:1457-69. [Crossref] [PubMed]

- Katz U, Cohen E, Shachar E, et al. GPT versus Resident Physicians — A Benchmark Based on Official Board Scores. NEJM AI 2024. doi:

10.1056/AIdbp2300192 .10.1056/AIdbp2300192 - Lee P, Bubeck S, Petro J. Benefits, Limits, and Risks of GPT-4 as an AI Chatbot for Medicine. N Engl J Med 2023;388:1233-9. [Crossref] [PubMed]

- Preiksaitis C, Rose C. Opportunities, Challenges, and Future Directions of Generative Artificial Intelligence in Medical Education: Scoping Review. JMIR Med Educ 2023;9:e48785. [Crossref] [PubMed]

- Israeli Medical Association | Residency Website | Ophthalmology Exams [Internet]. [cited 2024 Sep 12]. Available online: https://www.ima.org.il/InternesNew/ViewCategory.aspx?CategoryId=14188

- Hassani H, Silva ES. The Role of ChatGPT in Data Science: How AI-Assisted Conversational Interfaces Are Revolutionizing the Field. Big Data Cogn Comput 2023;7:62. [Crossref]

- Bayer M, Kaufhold MA, Buchhold B, et al. Data augmentation in natural language processing: a novel text generation approach for long and short text classifiers. Int J Mach Learn Cybern 2023;14:135-50. [Crossref] [PubMed]

- Zhou S, Zhang Y. DATLMedQA: A Data Augmentation and Transfer Learning Based Solution for Medical Question Answering. Appl Sci 2021;11:11251. [Crossref]

- Shukla M, Goyal I, Gupta B, et al. A Comparative Study of ChatGPT, Gemini, and Perplexity. International Journal of Innovative Research in Computer Science and Technology 2024;12:10-5. (IJIRCST). [Crossref]

- Bahir D, Zur O, Attal L, et al. Gemini AI vs. ChatGPT: A comprehensive examination alongside ophthalmology residents in medical knowledge. Graefe’s Archive for Clinical and Experimental Ophthalmology 2024; [Crossref] [PubMed]

- Mihalache A, Huang RS, Cruz-Pimentel M, et al. Artificial intelligence chatbot interpretation of ophthalmic multimodal imaging cases. Eye (Lond) 2024;38:2491-3. [Crossref] [PubMed]

- Mihalache A, Huang RS, Popovic MM, et al. Accuracy of an Artificial Intelligence Chatbot's Interpretation of Clinical Ophthalmic Images. JAMA Ophthalmol 2024;142:321-6. [Crossref] [PubMed]

- Li F, Su Y, Lin F, et al. A deep-learning system predicts glaucoma incidence and progression using retinal photographs. J Clin Invest 2022;132:e157968. [Crossref] [PubMed]

- Poplin R, Varadarajan AV, Blumer K, et al. Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat Biomed Eng 2018;2:158-64. [Crossref] [PubMed]

- Li Z, He Y, Keel S, et al. Efficacy of a Deep Learning System for Detecting Glaucomatous Optic Neuropathy Based on Color Fundus Photographs. Ophthalmology 2018;125:1199-206. [Crossref] [PubMed]

- De Fauw J, Ledsam JR, Romera-Paredes B, et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat Med 2018;24:1342-50. [Crossref] [PubMed]

Cite this article as: Shvartz E, Zur O, Attal L, Nujeidat Z, Plopsky G, Bahir D. GPT-4o vs. residency exams in ophthalmology—a performance analysis. J Med Artif Intell 2025;8:48.