Use of artificial intelligence to transcribe and summarise general practice consultations

Highlight box

Key findings

• In this study, the cloud-based GPT-4 model was successful in consistently producing reasonably complete and accurate summaries of N=47 simulated primary care consultations, based on two independent reviewers using a validated tool to evaluate the quality of electronic health care records.

What is known and what is new?

• It has been speculated that the large language models (LLMs) like ChatGPT could be used routinely for future day-to-day workings and potentially improve efficiency in healthcare.

• Our study, however, is an important first step in safe implementation of artificial intelligence/LLM in summarising doctor-patient consultations a proof-of-concept demonstration that this technology is now capable of supporting the daily workings of the medical profession.

What is the implication, and what should change now?

• Further research is needed with testing this technology in real-life clinical scenarios before considering routine practice implementation.

Introduction

Since the announcement of OpenAI’s ChatGPT (1), it has been speculated that the large language models (LLMs) like ChatGPT could be used routinely for future day-to-day workings and potentially improve efficiency in healthcare. The place of such artificial intelligence (AI) programs is of particular interest to the healthcare professionals as a prospective administrative, educational and decision-making aid but there are some concerns being raised about the spread of misinformation, perpetuation of biases, and loss of accountability (2-4).

LLMs are a type of AI algorithms, which are trained on large volumes of text data collected from the internet to train a programme capable of generating outputs that mimic human-written text in response to prompts or instructions (2,3,5). These outputs can be conversations, texts, summaries, poems, essays, or translations (2,3,5). The three core capabilities from LLM that can be potentially used in a healthcare setting are natural language processing, the capability to collect information and context from text information and generate understandable human-like outputs. These capabilities can be used to handle clinical administrative tasks, aiding in documentation and summarising large patient health records. Voice recognition technology is another field of AI technology becoming commonplace. Popularised by ‘smart speaker’ devices and transcription software, AI-powered real-time transcription of interviews, lectures or conversations with background interference filtered out by the program are now readily available (6). Several concerns have been identified regarding use of AI models in general due to the requirement of training data to tailor the function of the model to its use case, there is a risk of training bias depending on the variety and quality of the training data (6-8). There are also concerns about ‘hallucination’, a phenomenon where LLMs generate text that is either nonsensical or even false/misleading output to the provided input. As a result of this, there is a risk that a LLM or ChatGPT model can add information to fit into previously seen patterns or note structures, creating a significant risk to patient safety, if implemented without detailed evaluation (7,9). Hallucinations or falsifications commonly occur due limitations in training data, inherent biases in the model or due to lack of domain-specific knowledge. There have been other drawbacks identified as well, such as, presence of ‘black box application’ referring to a lack of transparency in how the AI models use, interpret and retain the data inputs. Finally, there are concerns around privacy of the patient’s medical information being shared to the host computers of the models run on a cloud-based model such as OpenAI’s ChatGPT (1,5,7).

General practitioners (GPs) are often the first and the main point of contact for general healthcare in the UK in the National Health Service (NHS). On average, there are 30 million GP consultations taking place every year across the UK. GPs spend a significant portion of their working time on documenting notes from patient consultations in electronic records. A study involving N=61 GPs in the UK revealed that GPs spend 14% of their working time documenting consultation notes and updating electronic health care records (10). Furthermore, 8.6% of all interruptions during a GP’s workday stem from issues related to computers, technology, electronic health records (EHRs) (11). This highlights the need for an efficient and reliable way to reduce the administrative burden on primary care clinicians. Implementing an assistive tool to reduce administrative burden could provide a method of reducing high levels of GP burnout, with GP burn out worsening significantly over the past 15 years, and a known association between the increasing administrative burden and higher rates of GP burnout (12). In addition, manual transcription of findings from consultations may introduce errors and inconsistency. In recent years, it has been suggested that recording consultations between patients and doctors could reduce administrative burden on doctors and improve the quality of healthcare (13).

Our study objective was to explore the use of the most advanced LLMs to develop a tool for auto-transcribing and auto-summarising general practice consultations.

Methods

Study design

The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study used publicly available data in the form of videos of mock consultations and no identifiable information was used, an informed consent or ethical board review was not deemed necessary. This study was designed to evaluate the feasibility of developing a fine-tuned LLM capable of real-time transcription and summarising primary care consultations that could output accurate and clinically relevant consultation notes. This study was conducted using publicly accessible simulated recordings of primary care consultations or mock consultations to evaluate whether there is further scope for development and testing on cohorts of real-life settings and involving clinical patients. This was a proof-of-concept study design.

Test sample

A bank of recorded mock primary care consultations was collected. All the recordings used in the study were available from publicly available sources, which required no login information or paywall. All recordings were mock GP consultations performed by an actor and experienced GP to help with teaching and training of GPs. The topics covered a variety of common first presentations, health enquiries about quitting smoking or alcohol or other common topics, such as diabetic reviews, patients making complaints or raising concerns about their care. All recorded mock consultations took place in a primary care setting and involved mock patients of different demographic backgrounds. Evaluation matrix we wanted to perform an objective evaluation of the AI-generated medical summaries and we used the ‘Q-note’ score for this purpose. The ‘Q-note’ (14) questionnaire examined 12 areas on different elements of consultation summary and an additional final question for ‘overall impression’ was also included. Each area 2 of 10 was assessed for completeness, categorised as fully complete [4], partially complete [3], unacceptable [2], missing [1], or not applicable (N/A) [4] (Figure 1). To allow for a fair comparison across different summaries, N/A was scored as a 4. Thus, each AI-generated summary was given a score out of a total score of 52 (Figure 1).

When assessing the completeness of a summary generated, assessors were told to focus not on the quality and conciseness of the writing but consider ‘complete’ an understandable piece of writing absent mistakes, inaccuracies, or any ‘hallucinations’ by the model. The inclusion of hallucinations is an area of particular emphasis, as it has been identified that LLM may be at risk of ‘hallucinating’ information to make a statement sound more human or fit an expected structure, a particular concern when handling medical information (9,15).

Model selection

To evaluate performance, we considered four LLMs as candidates, three of them were fine-tuned based on the MTS-Dialog Dataset, a bank of 1,700 patient-doctor conversations with subsequent clinical notes (16). Three of the models were selected as the most promising candidates for success that could be run locally on the user’s machine, whereas the final model, GPT-4, was a more powerful cloud-based LLM run by OpenAI (17). The three models selected were BART (18), BioBART (19), and Flan-T5 (20). These three local models were fine-tuned against the same data set and programmed to generate an acceptable output based on the same reference data. For the GPT-4 model, we opt one-shot in-context learning approach, that uses one random example from MTS-Dialog Dataset as the demonstration in the prompt context (Box 1, which shows the prompt used), to generate the final summary, which empirically shown good performance (21). All the four models then generated a set of clinical summaries for N=10 recorded mock consultations. Each recorded consultation and the corresponding summary were reviewed by one of the clinician assessors and evaluated on the study questionnaire, including awkwardly worded sentences, internal inconsistencies or language not suitable for medical documentation. The results from the four models were then compared, and a single model was then selected based on the highest level of completeness, for further evaluation.

Table 1

| Section of GP patient consultation summary | Mean | Median | 95.0% lower CI for mean | 95.0% upper CI for mean |

|---|---|---|---|---|

| Chief complaint(s) | 3.90 | 4.00 | 3.83 | 3.98 |

| History of present illness | 3.76 | 4.00 | 3.67 | 3.84 |

| Problem list | 3.89 | 4.00 | 3.83 | 3.96 |

| Past medial history | 3.77 | 4.00 | 3.59 | 3.94 |

| Medications | 3.62 | 4.00 | 3.42 | 3.81 |

| Adverse drug reactions and allergies | 3.82 | 4.00 | 3.69 | 3.95 |

| Social and family history | 3.48 | 3.50 | 3.31 | 3.65 |

| Review of systems | 3.69 | 4.00 | 3.53 | 3.85 |

| Physical findings | 3.39 | 3.50 | 3.19 | 3.59 |

| Assessment | 3.95 | 4.00 | 3.90 | 3.99 |

| Plan of care | 3.87 | 4.00 | 3.78 | 3.96 |

| Follow-up information | 3.80 | 4.00 | 3.72 | 3.89 |

| Overall impression | 3.65 | 3.50 | 3.53 | 3.77 |

LLM, large language model; GP, general practitioner; CI, confidence interval.

| The prompt Could you summarize the dialogue without any hallucinations? Here are some examples of them: Dialogue: {MTS dialogue example} Summary: {MTS summary example} Input: {input dialogue} |

Outcome evaluation

The selected model was run on a bank of N=47 audio and video-recorded example primary care consultations. Each consultation was viewed by the primary reviewer and a second reviewer, who evaluated the generated summary independently and recorded any comments on the note generated. The collated data from all the evaluation forms was viewed and any summaries which had significant differences between the two reviews were checked for mistakes during data collection. A significant difference was defined as any pair of reviews in which on reviewer rated fully complete and the other rated either missing or incorrect. In cases where the two reviews scored differently an average was taken.

Statistical analysis

The total and individual component Q-note scores were presented with mean and median values. A Kruskal-Wallis test was performed to detect any significant difference in total modified Q-note score performance between any of the difference clinical presentations.

Qualitative evaluation

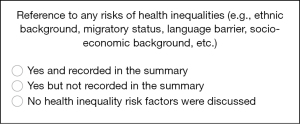

A full list of any free text ‘qualitative’ comments included in the reviews was then collected and analysed for any common trends in keywords, or description of errors. The secondary outcome was looking at whether the summarizing model would pick up on context or references to personal situations that would put the patient at increased risk of health inequality. These could include references to socio-economic situation, immigration status, language barriers or any other concern the reviewer considered may put the patient at risk of not receiving the best possible care. We were looking for any scenarios where factors related to health inequalities were discussed but not included in the summary (Figure 2).

Results

The initial review of the four different models revealed that summaries with incomplete sentences or incomplete information were generated by FLAN-T5, BART, and BioBART models respectively, and subsequently the evaluation for these models was stopped early. The model built on the GPT-4 API did produce full summaries for the duration of the consultation and selected for the full evaluation. The GPT-4-based model produced summaries of 47 corresponding mock general practice consultations were each scored independently by two reviewers.

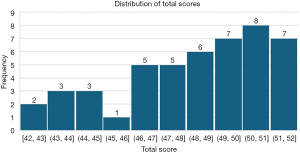

Primary outcome-overall performance

Of the 47 consultations summarized by the final GPT-4 model, 38 (80.9%) scored above 46 out of a total possible score of 52 (Figure 3) on the modified Q-note score. Across all the summaries, six instances of a summary element were rated as ‘unacceptable’, with both reviewers agreeing only once. Only one instance of the reviewer’s “overall impression” was rated as ‘unacceptable’. For each of the 13 evaluation fields, the mean score ranged from 3.39 to 3.95 (Table 1). On average, the summaries scored worst on the physical findings section (mean score, 3.39) and scored best on the assessment (mean score, 3.95). No statistically significant difference was detected between any of the topic groups (P value =0.48). The results from an independent sample Kruskal-Wallis test can be viewed in Figure 4.

Analysis across different clinical presentations

Each consultation was retrospectively classified into 12 different categories, based on symptom presentation. Mean performance Q-note scores across different clinical presentations are presented in Table 2.

Table 2

| Clinical presentation based on different body systems and themes | Frequency | Percent | Mean total score |

|---|---|---|---|

| Cardiovascular | 3 | 6.4 | 48.50 |

| Communication skills | 3 | 6.4 | 49.67 |

| Dermatology | 5 | 10.6 | 48.70 |

| Endocrinology | 2 | 4.3 | 50.75 |

| Gastrointestinal | 3 | 6.4 | 45.75 |

| Geriatric health | 1 | 2.1 | 45.17 |

| Mental health | 2 | 4.3 | 52.00 |

| Musculoskeletal | 5 | 10.6 | 49.75 |

| Neurology | 6 | 12.8 | 49.10 |

| Paediatrics | 3 | 6.4 | 47.67 |

| Respiratory | 4 | 8.5 | 48.00 |

| Sexual health | 10 | 21.3 | 48.80 |

| Total | 47 | 100.0 | 48.51 |

LLM, large language model.

Qualitative findings

From the comments section of the evaluation form, the reviewers left their reasoning behind any markdowns from “fully complete” in the review process. From these comments, themes were identified as common or notable issues with the AI-produced summaries. The most commonly recurring reason for a summary section not to be marked “fully complete” was the absence of pertinent negative findings or negative history in the summary. A comment along this theme was included in 38% (18 of 47) of the consultation summaries. This often referred to the initial history of presenting complaints where important symptoms to be ruled out were not recorded or in response to allergies or adverse reactions. In two consultation summaries, the patient mentioned an allergy or reference to a historic reaction to penicillin, but it was not recorded in the AI-generated summary. In 14 (30%) summaries, it was noted that during the physical examination, while the performance of a physical exam was recorded, any numerical findings, such as weight, blood pressure or peak flow, were not recorded or only described as usual in the summary. In four of the AI-generated summaries, it was commented that the note did not record the patient’s use of over-the-counter medications such as the contraceptive pill.

Detection of health inequality factors

From the review process, none of the consultations included in the study featured any discussion relevant to health inequalities.

Discussion

Key findings

The primary objective of this research was to evaluate the feasibility of using new AI algorithms such as LLMs for the purpose of summarising GP consultations in real time. The study utilised four base models, with the GPT-4-cloud-based model alone producing satisfactory outputs. From the chosen GPT-4-based model, 80.9% of the summaries generated in this initial study scored above 88% on the modified Q-note score, with reasonable accuracy and completeness. The sample of mock consultations covered a wide spectrum of different primary care presentations, and the model was performed equally across all topics tested. A small portion of the summaries were rated poorly, although only one case was given an overall impression score of unacceptable for getting the overall focus of the consultation note incorrect. This, however, was a more complex case where the patient’s primary issue was to get a referral to a specialist, not a diagnosis in the consultation, highlighting a need for further investigation into the model’s performance with complex consultations.

Comparison with existing literature

Research on the potential implementation of AI/LLM into clinical practice is still minimal. Previous studies have demonstrated methods for AI scribing tools to support students with disabilities through multiple inputs (22), and another study proposed a method of developing an AI-powered medical scribe for clinical encounters in the hospital setting where a member of the team would normally document the encounter (23). Wang et al. focussed their evaluation on the time saved by their tool ‘AI digital scribe’, where they found that the digital scribe was 2.7 times faster than typing and dictation (24). Clough et al. and Tung et al. evaluate the discharge summary generated by GPT-4 and compare them with the clinicians’ summary (25,26). However, no previous study has systematically explored the accuracy and completeness of LLM summarised clinical notes documentation generated from transcript conversation, an essential step working towards implementation in real-world clinical encounters.

Strengths and limitations

The nature of the Q-note scoring matrix means that the model will score higher for longer notes. In practice, concise notes containing pertinent information are most often useful for quick reference. In the future it may be useful to train the model in the prompt which areas of the consultation are of greatest import aiding the model in the prioritization of what is to be recorded. In addition, due to the small sample size of some topics, differences in performance in endocrine or geriatrics-focused consultations could be excluded. The testing of the models on videos or recordings of mock GP consultations designed for practice for exams also presents limitations to the generalisability of the findings of the study. Physical examination was often not performed during the video, instead the findings appeared on the screen or were given on a note for the physician to discuss with the patient. There is a possibility that the model will perform better if transcribing a full consultation where the physical exam is performed while the model is transcribing, and further testing is required to see how accurately it follows the consultation. Health inequalities in an important significance at a practice and a policy level (27). In researching the place for AI tools in healthcare, we wanted to ensure that implementing such tools would not worsen health inequalities by further overlooking risk factors for patients being missed or otherwise underserved by the healthcare system. However, from the evaluation of our sample, we found a gap in education resources as none of the OSCE-style mock educational consultations available contained discussion about such factors. The use of primarily educational videos for testing, meant few instances of complex, multimorbidity presentations, with more than one presenting complaint, which are increasingly making up more of the demand on primary care practitioners (28), the majority of the consultations in the sample focused on a single presenting issue.

Implications and actions needed

To the best of our knowledge, the primary factors contributing to the performance differences between the local models (BART, BioBART, and T5) and GPT-4 are the number of parameters and the token size limitations for input and output. While the exact number of parameters for GPT-4 has not been disclosed, its predecessor, GPT-3, is known to contain approximately 175 billion parameters, suggesting that GPT-4 likely exceeds this figure. In contrast, local models such as BART, BioBART, and T5 typically have significantly fewer parameters. For instance, BART’s large variant has around 406 million parameters, while T5 ranges from 60 million to 11 billion parameters depending on the specific version. These figures are substantially lower than those of the GPT models, which may account for some of the observed performance disparities.

Moreover, the token size limitations in smaller models like BART and BioBART hinder their ability to effectively process and summarise large-scale transcripts from video or audio files. These constraints restrict their capacity to generate comprehensive summaries from extensive raw data. Identifying or developing more robust local models capable of handling larger token sizes and producing longer outputs could offer a more reliable solution for processing and summarising substantial volumes of data. Future research should focus on optimising these models to achieve a better balance between model parameter size and performance. The findings from this study have shown that currently available LLM technologies have the potential of generating accurate and complete summaries of primary care consultations, with a good degree of consistency. Further work is still required however, before this technology is ready for implementation with real patient encounters in clinical practice. Several limitations and challenges have been identified for implementation of AI and LLM in a healthcare setting. Firstly, the high level of cost of access for LLM application programming interface (API) requests have hindered the application of LLM in healthcare. This is now improving with many LLM’s APIs becoming available to work on, many of which are much more affordable than previously available. The use of a cloud-based AI solution still posed serious challenges in maintaining privacy and protection of sensitive patient data. The storage of patient data also, leaves the significant ethical question to be discussed of the use of patient data in the training and further development of AI tools, which by nature are designed to be self-improving through the continuous collection of use data. Importantly, it is unknown how this technology is viewed by patients and physicians, which will be crucial to understand before considering wider implementation. The evaluation of the videos has identified a noticeable gap in education resources. With very few references to health inequalities discussed in general practice mock consultations often used for educational purposes. This is an increasingly discussed topic within the profession, and it is important that educational resources continue to be produced to reflect these developments.

Conclusions

This study is a promising first step of evaluation for using LLM-based AI assistants to reduce a significant amount of administrative burden on clinicians of accurate note-keeping for electronic health care records. Further research is required in this area and details of ethical and data protection issues need to be discussed by the wider medical and computer science community. However, an effective method of evaluating and comparing such tools has been demonstrated and a proof of concept that this technology is now capable of supporting the daily workings of the medical profession. Further research is needed with testing this technology in real-life clinical scenarios before considering routine practice implementation.

Acknowledgments

None.

Footnote

Peer Review File: Available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-257/prf

Funding: None.

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-257/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). The study used publicly available data in the form of videos of mock consultations and no identifiable information was used, an informed consent or ethical board review was not deemed necessary.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Floridi L, Chiriatti M. GPT-3: Its nature, scope, limits, and consequences. Minds and Machines 2020;30:681-94. [Crossref]

- Clusmann J, Kolbinger FR, Muti HS, et al. The future landscape of large language models in medicine. Commun Med (Lond) 2023;3:141. [Crossref] [PubMed]

- Harrer S. Attention is not all you need: the complicated case of ethically using large language models in healthcare and medicine. EBioMedicine 2023;90:104512. [Crossref] [PubMed]

- Cascella M, Montomoli J, Bellini V, et al. Evaluating the Feasibility of ChatGPT in Healthcare: An Analysis of Multiple Clinical and Research Scenarios. J Med Syst 2023;47:33. [Crossref] [PubMed]

- Briganti G. A clinician’s guide to large language models. Future Med AI 2023. doi:

10.2217/fmai-2023-0003 .10.2217/fmai-2023-0003 - Chen H, Gomez C, Huang CM, et al. Explainable medical imaging AI needs human-centered design: guidelines and evidence from a systematic review. NPJ Digit Med 2022;5:156. [Crossref] [PubMed]

- Sallam M. ChatGPT Utility in Healthcare Education, Research, and Practice: Systematic Review on the Promising Perspectives and Valid Concerns. Healthcare (Basel) 2023;11:887. [Crossref] [PubMed]

- Leslie D, Mazumder A, Peppin A, et al. Does "AI" stand for augmenting inequality in the era of covid-19 healthcare? BMJ 2021;372:n304. [Crossref] [PubMed]

- Xiao Y, Wang WY. On Hallucination and Predictive Uncertainty in Conditional Language Generation. In: Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume. 2021:2734-44.

- Edwards PJ. GPs spend 14% of their session time documenting consultation notes and updating electronic health records. Br J Gen Pract 2024;74:202. [Crossref] [PubMed]

- Sinnott C, Moxey JM, Marjanovic S, et al. Identifying how GPs spend their time and the obstacles they face: a mixed-methods study. Br J Gen Pract 2022;72:e148-60. [Crossref] [PubMed]

- Hall LH, Johnson J, Watt I, et al. Association of GP wellbeing and burnout with patient safety in UK primary care: a cross-sectional survey. Br J Gen Pract 2019;69:e507-14. [Crossref] [PubMed]

- Elwyn G. Recording consultations—an inevitable future which could improve healthcare. BMJ Opinion 2020. Available online: https://blogs.bmj.com/bmj/2020/03/12/recording-consultations-an-inevitable-future-which-could-improve-healthcare/

- Burke HB, Hoang A, Becher D, et al. QNOTE: an instrument for measuring the quality of EHR clinical notes. J Am Med Inform Assoc 2014;21:910-6. [Crossref] [PubMed]

- Shen Y, Heacock L, Elias J, et al. ChatGPT and Other Large Language Models Are Double-edged Swords. Radiology 2023;307:e230163. [Crossref] [PubMed]

- Abacha AB, Yim W, Fan Y, et al. An empirical study of clinical note generation from doctor-patient encounters. In: Proceedings of the 17th Conference of the European Chapter of the Association for Computational Linguistics. 2023:2291-302.

- Brin D, Sorin V, Vaid A, et al. Comparing ChatGPT and GPT-4 performance in USMLE soft skill assessments. Sci Rep 2023;13:16492. [Crossref] [PubMed]

- Lewis M, Liu Y, Goyal M, et al. BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics. 2020:7871-80.

- Yuan H, Yuan Z, Gan R, et al. BioBART: Pretraining and Evaluation of A Biomedical Generative Language Model. In: Proceedings of the 21st Workshop on Biomedical Language Processing. 2022:97-109.

- Longpre S, Hou L, Vu T, et al. The flan collection: Designing data and methods for effective instruction tuning. In: Proceedings of the 40th International Conference on Machine Learning. 2023:22631-48.

- Mathur Y, Rangreji S, Kapoor R, et al. SummQA at MEDIQA-Chat 2023: In-Context Learning with GPT-4 for Medical Summarization. In: Proceedings of the 5th Clinical Natural Language Processing Workshop. 2023:490-502.

- Ganesh Bharathwaj VG, Gokul PS, Yaswanth MV, et al. Artificial Intelligence-Based Scribe. In: Innovative Data Communication Technologies and Application: Proceedings of ICIDCA 2020. Singapore: Springer; 2021:819-29.

- Finley G, Edwards E, Robinson A, et al. An automated medical scribe for documenting clinical encounters. In: Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Demonstrations. 2018:11-5.

- Wang J, Lavender M, Hoque E, et al. A patient-centered digital scribe for automatic medical documentation. JAMIA Open 2021;4:ooab003. [Crossref] [PubMed]

- Clough RAJ, Sparkes WA, Clough OT, et al. Transforming healthcare documentation: harnessing the potential of AI to generate discharge summaries. BJGP Open 2024;8:BJGPO.2023.0116.

- Tung JYM, Gill SR, Sng GGR, et al. Comparison of the Quality of Discharge Letters Written by Large Language Models and Junior Clinicians: Single-Blinded Study. J Med Internet Res 2024;26:e57721. [Crossref] [PubMed]

- Marmot M. Health equity in England: the Marmot review 10 years on. BMJ 2020;368:m693. [Crossref] [PubMed]

- Head A, Fleming K, Kypridemos C, et al. OP29 Dynamics of multimorbidity in England between 2004 and 2019: a descriptive epidemiology study using the clinical practice research datalink. J Epidemiol Community Health 2020;74:A14.

Cite this article as: Haniff Q, Meng Z, Pongkemmanun T, Sia ZC, Newport H, Ooi Y, Jani BD. Use of artificial intelligence to transcribe and summarise general practice consultations. J Med Artif Intell 2025;8:43.