Multimodal deep learning breast cancer prognosis models: narrative review on multimodal architectures and concatenation approaches

Introduction

A prognosis refers to the predicted course and outcome of a disease, including the likelihood of successful treatment and patient recovery (1). Breast cancer is the most common cancer in women around the world. Survival is linked to advances in treatment, detection through screening programs, and increased disease awareness. Prognosis is estimated by looking at what has happened to large groups of people diagnosed with similar cancer over many years. The prognosis can be good, poor, or a number. It is frequently expressed in terms of a five- or ten-year survival rate.

Machine learning (ML) is a powerful tool in breast cancer prognosis prediction, enabling the analysis and interpretation of complex biological data to identify patterns and relationships. Various techniques, such as neural networks, support vector machines, random forests, and ensemble methods, have been shown to enhance the accuracy of predictive models (2-5). Deep learning, a subset of ML, has revolutionized breast cancer prognosis prediction by automatically extracting meaningful features from complex, high-dimensional data. Deep learning algorithms, such as convolutional neural networks (CNNs) and recurrent neural networks (RNNs), process diverse data types, particularly in integrating genetic, histopathological, and radiological information to predict survival rates and treatment responses. These automatic feature extraction capabilities reduce reliance on manual feature engineering, allowing models to discover subtle patterns and interactions for accurate predictions. ML and deep learning have significantly improved breast cancer prognosis prediction by analyzing both unimodal and multimodal datasets. Unimodal approaches focus on single data sources like gene expression profiles or histopathological images, predicting patient outcomes. However, multimodal approaches combine diverse data types like genomics, imaging, and clinical records. Deep learning, with its powerful neural networks, excels in integrating these data sources and extracting intricate relationships between modalities. Several studies (6-8) have demonstrated that transitioning from unimodal to multimodal models enhances accuracy and enables more personalized predictions in breast cancer care. The rapid development of multi-omics public datasets, such as The Cancer Genome Atlas (TCGA), Molecular Taxonomy of Breast Cancer International Consortium (METABRIC), OMICS, and others, has greatly accelerated research in cancer studies.

While numerous review studies address cancer prognosis, most focus on reviewing the application of ML and deep learning predictive models implemented in unimodal or multimodal approaches, leaving gaps in the analysis of multimodal deep learning techniques, which are increasingly vital for handling the complexities of breast cancer data. Existing reviews have not specifically explored the unique potential of multimodal architectures and concatenation approaches associated with enhancing predictive accuracy and addressing the integration challenges posed by diverse data types—such as clinical, genomic, and imaging data. This review aims to fill this gap by focusing on the potential of multimodal architectures, particularly through the use of advanced concatenation techniques, to improve predictive power and support precision medicine in breast cancer treatment. Ultimately, this review serves as a unique and much-needed contribution to the literature by evaluating state-of-the-art multimodal approaches in breast cancer prognosis and advocating for further research to address identified gaps in model interpretability, robustness, and tumor heterogeneity.

The discussion of each paper in the survey includes information about multimodal datasets, preprocessing techniques, multimodal architectures, concatenation approaches, results, and limitations. This survey provides the most recent analysis of multimodal breast cancer prognosis using deep learning methods, summarizing and comparing all the related work. We present this article by the Narrative Review reporting checklist (available at https://jmai/amegroups.com/article/view/10.21037/jmai-24-146/rc).

Objective of the study

- To critically review studies published under deep multimodal learning for breast cancer prognosis.

- To identify the proposed multimodal architectures and concatenation approaches used in the literature and their contributions to precision medicine for breast cancer patients.

- To identify the model’s limitations and future research gaps.

Methods

A literature review was conducted to identify diverse multimodal learning models solely on deep learning techniques. Table 1 provides the detailed search strategy used in performing the review. Potential papers were found using Google Scholar, Scopus, and Web of Science databases. The terms “Breast cancer prognosis prediction”, “Multimodal deep learning in breast cancer prognosis”, and “Deep learning in breast cancer prognosis prediction” were among the terms used in the search. We found 90 potential papers that were reviewed and filtered; 71 articles that were excluded did not demonstrate a multimodal breast cancer prognosis using deep learning methods. The publication date was not in any way restricted; backward and forward searches were conducted using the matched search results, which included assessing the references of matched articles and other sources that had cited these publications. This method was repeated until all relevant publications were discovered. Finally, 19 publications on multimodal deep-learning breast cancer prognosis were chosen, including 18 journal articles and one conference paper.

Table 1

| Items | Specification |

|---|---|

| Date of search | October 1, 2023, and September 28, 2024 |

| Databases | Google Scholar, Scopus, and Web of Science |

| Search terms | “Breast cancer prognosis prediction”, “multimodal deep learning in breast cancer prognosis”, and “deep learning in breast cancer prognosis prediction” were among the terms used in the search |

| Timeframe | 2023–2024 |

| Inclusion/exclusion criteria | We found 90 articles during the search; after reviewing and filtering, 19 articles were selected, and those that did not demonstrate a multimodal breast cancer prognosis using deep learning methods were removed |

| Selection process | Aminu evaluated and filtered the retrieved titles separately for content suitability, which C.X. later verified. In case of discrepancies, Z.Z. cross-checks the titles and makes the final selection |

ML review

ML approaches have been increasingly integrated into breast cancer prognosis prediction, utilizing both unimodal and multimodal data to enhance accuracy and personalization in patient outcomes (9). Unimodal models, which focus on a single type of data such as gene expression or clinical features, simplify analysis and interpretation while minimizing computational resources (10-12). Models based solely on gene expression profiles can effectively predict survival outcomes, as evidenced by the performance of the 70-gene signature in stratifying patients’ risk levels (13). Conversely, multimodal approaches, which integrate various data types—such as imaging, genomic, and clinical data—have been shown to improve the development of comprehensive predictive models (14-16).

Unimodal predictive models

In recent years, various approaches have been explored for unimodal breast cancer survival prediction, with significant advancements in gene expression profiling and machine-learning techniques. One notable study (17) pioneered the use of a 70-gene signature to predict breast cancer prognosis, demonstrating its superior predictive capability compared to traditional clinical factors. The study utilized Kaplan-Meier survival curves and multivariate proportional hazards regression to validate the model, marking a significant milestone in the development of genetic-based prognostic tools. Similarly, a study (18) introduced a pathway-based deregulation scoring matrix to enhance gene-based models, achieving an area under the curve (AUC) of 0.83 in training data and 0.79 in testing, further underscoring the potential of using biological pathways in improving breast cancer prognosis. Their approach expanded on gene-expression models, offering a more holistic understanding of gene deregulation in cancer pathways.

Recent studies have demonstrated that ML-based models show promising results in breast cancer survival prediction. Researchers (19) combined neural networks with extra tree classifiers to develop an architecture that achieved 99.41% accuracy on the Wisconsin Breast Cancer dataset. Although the model demonstrated near-perfect sensitivity and specificity, its generalizability on larger datasets remains a concern. Similarly, a support vector machine-recursive feature elimination (SVM-RFE) method identified a 50-gene signature that outperformed the earlier 70-gene model, offering improved accuracy (96.95%) and an AUC of 0.99 (20). Lastly, researchers integrated genomic and transcriptomic profiles to stratify patients into high- and low-risk categories, revealing significant survival differences between the groups (21).

Collectively, these studies highlight the evolving landscape of unimodal breast cancer prediction, ranging from gene-expression-based models to cutting-edge machine-learning methods. The studies also highlight that unimodal models, even without integrating multiple data modalities, can deliver precise and clinically valuable breast cancer prognosis predictions.

Multimodal predictive approaches

The reviewed studies highlight the diverse approaches in utilizing ML models for multimodal breast cancer survival prediction, with significant emphasis on tree-based models, Bayesian networks (BN), and feature selection algorithms. A study (22) employed statistical classification trees to integrate clinical factors such as lymph node status and estrogen receptor levels, achieving high accuracy and sensitivity (up to 90%). However, this method struggled with adaptability due to threshold limitations. Another study (23) advanced this approach by using BN in a multimodal setup, incorporating gene expression and clinical data, which resulted in an AUC of 0.793. Although this integration approach demonstrated improved predictive performance, small sample sizes remained a limitation. These findings collectively highlight the potential of integrating clinical and genetic data to enhance breast cancer prognosis, particularly with flexible models like BN that improve adaptability and predictive power over traditional methods.

Furthermore, feature selection methods like I-RELIEF and MEMBAS algorithms have shown promise in improving the prognostic capabilities of breast cancer models. A study (24) utilized I-RELIEF to identify key genetic and clinical markers, achieving 90% sensitivity but with limited specificity (67%), highlighting the need for larger datasets to validate the model further. To address this limitation, researchers (25) leveraged fuzzy feature selection and a multimodal data approach, resulting in over 70% prediction accuracy using only 15 selected markers. Additionally, logistic regression models and SVM-based hybrid models have contributed to advancements in multimodal breast cancer prediction. For instance, a research (26) applied binomial boosting with logistic regression, achieving an AUC of 0.82. At the same time, a study (27) demonstrated the efficacy of GASVM, reporting a 94.73% accuracy and emphasizing the role of hybrid approaches.

Moreover, advanced multimodal and ensemble learning techniques have gained prominence, especially in integrating multiple data types for better survival predictions. For example, a multi-omics approach using stacked ensemble learning has demonstrated significant advantages (28). This approach utilized deep neural networks (DNN), gradient boosting machine (GBM), and distributed random forest (DRF) as base models, achieving impressive AUC scores across diverse datasets. The results highlight the power of combining different base classifiers for robust prediction performance. Similarly, a research (29) demonstrated the effectiveness of a multimodal ML framework incorporating SVMs and random forest (RF) classifiers, coupled with dimensionality reduction techniques such as principal component analysis (PCA) and log-cosh variational autoencoders (VAEs). This method consistently achieved over 90% across multiple evaluation metrics, underscoring the benefits of integrating sophisticated ML frameworks with advanced feature selection methods to optimize breast cancer survival prediction.

The multimodal ML approaches in breast cancer survival prediction methods highlight the growing importance of integrating clinical, genomic, and imaging data for more accurate and robust predictive models. Tree-based models, BN, and logistic regression have proven effective in combining diverse data types, though small datasets and limitations in model flexibility often constrain their performance. In contrast, SVM-based hybrid models and multi-kernel learning approaches have demonstrated superior performance, particularly when utilizing genetic algorithms, multi-omics data, and novel kernel functions. These models, such as the GPMKL and SVM utility kernels, consistently outperform traditional models by achieving high AUC values and precision metrics. Despite the success of hybrid models, challenges still need to be addressed in addressing data imbalance and expanding sample sizes. As the field advances, multimodal integration, particularly through more sophisticated algorithms like SVMs and ensemble models, holds promise for enhancing the precision of breast cancer survival predictions, thus contributing significantly to the development of personalized medicine.

Deep learning study review

Breast cancer is one of the world’s deadliest diseases and has garnered significant attention due to its high mortality rate (30). Breast cancer arises from anomalous cell development in the body, accumulating and separating from other cells via blood arteries. Genes in the nucleus control cell mobility, and anomalies in the genome can turn these genes off or on, potentially leading to breast cancer.

In 2022, there was a global incidence of 2.3 million breast cancer cases among women, with a corresponding mortality rate of 670,000 individuals. Breast cancer is the most detected cancer worldwide, with approximately 7.8 million women being diagnosed within the past five years, making it the world’s most prevalent cancer, according to the World Health Organization Report 2024. Breast cancer occurs worldwide in women of all ages after puberty, but the incidence of this disease increases later in life. Every 14 seconds, a woman is diagnosed with breast cancer worldwide. Breast cancer is the most common cancer in women and is diagnosed in 140 out of 184 countries worldwide, according to a report from the Breast Cancer Research Foundation (BRCF). Breast cancer mortality did not change much from the 1930s to the 1970s. Only in 1980 did the world realize the improvement in survival rates in the countries that established early detection programs, which gave rise to various treatment alternatives for invasive diseases.

Determining cancer patients’ survival expectations is an essential problem in predicting prognosis. Cancer survival prediction is a censored survival analysis classification problem that indicates cancer patients’ survival when an event (i.e., death) occurs within a period. In the field of medical cancer disease classification, the long-term survival rate and the short-term survival rate are two regularly used cancer prognosis indicators. Breast cancer patients, for example, frequently use the 5-year index. Patients who survive for more than five years are considered long-term survivors, while those who survive for less than five years are classified as short-term survivors (31). Cancer survival analysis is divided into binary classification and risk regression. In a binary classification task, patients are often divided into two groups—short-survival and long-survival—based on a predetermined threshold (e.g., five years). Risk regression studies commonly apply Cox proportional hazard models and their extensions to generate risk scores for patients. A prior research (32) has utilized such models to estimate patient risk scores effectively.

Multimodal datasets

This section presents the multimodal datasets used in the surveyed work. The aim is to provide details on each dataset, the preprocessing techniques applied, and the number of features used in each study.

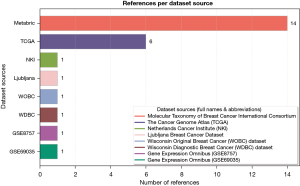

The datasets used in the survey have diverse data types, including genomics, imaging, clinical records, and other molecular profiling data. Genomic data, imaging modalities, and clinical datasets play a crucial role in understanding breast cancer prognosis. A study (33) highlights how DNA sequencing can identify gene mutations associated with survival and subtypes. Additionally, imaging techniques provide insights into tumor size, shape, and distribution, while clinical datasets contain essential patient-related parameters, including age, gender, hormone receptor status, tumor grade, and treatment history. Integrating these data types into deep-learning models enhances prediction accuracy and contributes to personalized medicine strategies for effective breast cancer management. The survey also identifies key data sources in the multimodal breast cancer prognosis field, improving the accuracy of patient survival predictions. Figure 1 illustrates the distributions of these datasets.

Figure 1 shows that the METABRIC dataset has the highest number of 14 references, followed by the TCGA data source, which has six references. The rest of the data sources appear only once in the surveyed literature. METABRIC is the most frequently used dataset in breast cancer prognosis prediction studies. Researchers have utilized three datasets derived from METABRIC—clinical profiles, copy number alterations (CNA), and gene expression—containing 25, 200, and 400 features, respectively, after preprocessing the original dataset, which initially comprised 27 clinical features, 26,298 CNA features, and 24,368 gene expression features. However, a study (34) employed all the original features using a graph neural network (GNN).

In TCGA/TCGA-BRCA data sources, researchers have utilized clinical, gene expression, methylation, protein, pathological, and whole-slide image multimodal data. One study (35) used gene expression and whole-slide images with 345 records and 32 selected features each after pre-processing the data (31). A separate study (36) incorporated the highest number of TCGA datasets in this domain, evaluating a model using five datasets—gene expression, CNA, methylation, protein, and pathological images—extracting 578 data samples and 350 features from each dataset. In contrast, a different study (37) focused on gene expression, CNA, and whole-slide images, selecting 80, 80, and 2,343 features, respectively. Meanwhile, research (38) utilized only two genomic and whole-slide image datasets, with 32 selected features each, to assess model performance.

Although the study (39) is among the earlier works in this domain, it utilized multiple data sources to address the small data challenge affecting more recent research. Specifically, the proposed model incorporated datasets such as METABRIC, the Netherlands Cancer Institute (NKI), Ljubljana, the Wisconsin Original Breast Cancer (WOBC), and the Wisconsin Diagnostic Breast Cancer (WDBC). Among these, the METABRIC and NKI datasets were used to evaluate both clinical and gene expression data, with the clinical profiles containing 23 and 19 selected features, respectively. In comparison, the gene expression datasets included 24,496 features. Similarly, a research (31) explored multiple data sources, including METABRIC, TCGA, GSE8757, and GSE69035, to evaluate the model’s performance, providing comprehensive comparative results.

Preprocessing techniques

Preprocessing techniques are crucial for training deep learning models, enhancing performance, accuracy, and generalization to new data. All reviewed studies used preprocessed data. Following are the overall preprocessing techniques used in the literature.

Data cleaning

A crucial technique in preparing gene expression and CNA data for deep learning tasks. It involves methods such as handling missing values, data deletion and filtering, normalization, and discretization. These steps ensure that the data is accurate, complete, and formatted for practical model training and analysis. Techniques like K-nearest neighbor (KNN) and weighted KNN are used to impute missing values, while data deletion and filtering remove irrelevant or noisy data points. Normalization and discretization help scale features and ensure uniformity while converting continuous variables into categorical ones, which improves model performance.

Data reduction

Various techniques, such as isometric feature mapping, mRMR, FSelector, ABC algorithm, randomForestSRC, and cell profiler, are employed to select the most relevant features. Isometric feature mapping reduces data dimensionality while preserving essential information—mRMR selection of pertinent features to the target variable with minimal redundancy, improving model performance. FSelector identifies essential features for accurate predictions. ABC algorithm aids in identifying discriminative features, improving model performance. RandomForestSRC, based on RFs, identifies vital features based on their predictive power. Cell Profiler, a software tool for image analysis in biological research, is also used for feature selection in multimodal breast cancer datasets.

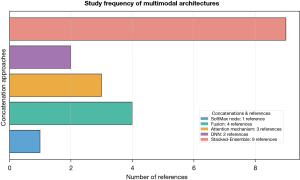

Concatenation approaches

This section analyses the types of deep learning multimodal architectures concatenation approaches used to address multimodal breast cancer prediction methods, organized by SoftMax node, Fusion, Attention mechanism, DNN, and stacked-ensemble approaches. For each surveyed work, we will provide details about the concatenation approach, multimodal architecture, the results produced, and future research gaps. Figure 2 illustrates the statistics of the concatenation approaches implemented within the surveyed work.

The DNN approach

This section includes three studies that implemented their proposed architectures using DNN to concatenate the predictions of individual modalities. This study builds on the research (31), which utilized a shallow self-attention network to embed features from various modalities, integrate attention weights, and apply sigmoid normalization. It generated weighted multimodal features, constructed a patient-gene bipartite graph, and extracted modality-specific features from the gene, CNA, and clinical data for survival prediction of a multiple fully DNN. Meanwhile, the GPDBN architecture consists of two modules (35): intra-BFEMs, which extract bilinear features from genomic and pathological image data, and inter-BFEM, which models the relationships between these features. The multi-layer DNN joins these bilinear features, feeds them into the multi-layer neural network, and uses dropout layers to prevent overfitting. SoftMax generates predictive scores for short-term and long-term survival classes, leveraging complementary intra- and inter-modality relationship information. A stacked ensemble approach (36) was developed to address limitations in existing methods, such as high dimensionality, heterogeneity, and dataset imbalance, while also reducing noise and irrelevant features. The BSense model uses DNNs, gradient-boosting machines, and DRFs as base learners, with the Artificial Bee Colony algorithm optimizing genomic datasets and parallel Bayesian optimization tuning hyperparameters. The final ensemble prediction is combined using the meta-learner DNN.

Result analysis

In Table 2, MAFN achieved an AUC of 0.938, an accuracy of 0.890, a precision of 0.905, an F1-score of 0.924, and a recall of 0.943 on the METABRIC dataset. On the TCGA-BRCA database, MAFN obtained an AUC of 0.932, an accuracy of 0.915, a precision of 0.921, an F1-score of 0.948, and a recall of 0.976 (31). Experiments on four breast cancer datasets showed that multimodal data significantly improved performance over single modalities. MAFN outperformed existing deep learning methods and traditional ML algorithms regarding AUC, accuracy, precision, and recall, demonstrating its effectiveness and robustness for multimodal survival prediction. A study (35) found that intra-BFEMs outperformed models using genomic or image data, demonstrating their ability to capture intra-modality relationships. Additionally, combining modalities in BaselineGP yielded better performance than single modalities, highlighting their complementarity.

Table 2

| References | Acc | Pre | Sn/Recall | MCC | AUC | F1-score | C-index |

|---|---|---|---|---|---|---|---|

| (31) | 0.890 | 0.905 | 0.943 | – | 0.938 | 0.924 | – |

| (35) | – | – | – | – | 0.817 | – | 0.723 |

| (36) | 0.842 | 0.889 | 0.802 | 0.688 | 0.911 | – | – |

Acc, accuracy; AUC, area under the curve; C-index, concordance index; MCC, Matthews correlation coefficient; Pre, precision; Sn, sensitivity.

GPDBN achieved the best performance among all models, with a C-index of 0.723. It also outperformed existing approaches, showing a 2.0% improvement over MDNNMD and Kaplan-Meier curves. By extracting more discriminative representations than raw features or intra-BFEM alone, GPDBN demonstrated its effectiveness. Additionally, the BSense model showed consistent and statistically significant improvements in predictive performance across multiple breast cancer datasets (36). It achieved 83.9% AUC, 85.2% accuracy, and 92% sensitivity, outperforming the best individual model in the TCGA-BRCA dataset. BSense also outperformed the METABRIC dataset with 87.3% AUC, 84.5% accuracy, and 88.4% sensitivity. It also outperformed the Metabolomics dataset with 91.1% AUC, 84.2% accuracy, and 80.1% AUC, proving the effectiveness of the multimodal stacked ensemble approach.

In conclusion, previous studies have demonstrated the effectiveness of various models in predicting survival outcomes in breast cancer patients. However, research has also highlighted the need for further improvements, particularly in incorporating temporal relationships and dynamic disease progression modeling to enhance performance (31), as well as improving model interpretability for deep learning-based predictions (35). Additionally, a study (36) introduced different approaches to address these challenges, including feature selection techniques to mitigate high dimensionality—especially in image data—and hyperparameter optimization to achieve optimal model performance.

SoftMax node approach

This section includes only one study (39) that explores the use of the SoftMax node. In this study, a probabilistic graphical model (PGM) is used to extract structure from clinical data. At the same time, manifold learning techniques are applied to reduce the dimensionality of high-dimensional microarray data. A deep belief network (DBN) is then trained on the reduced data to extract hierarchical representations. Two separate models are created: the PGM for clinical data and the DBN for microarray data. These models are integrated by representing probabilities as continuous nodes in another PGM, with SoftMax nodes integrating probabilities from both models. This mixture of the model’s probabilities enables informed predictions across data modalities.

Result analysis

The proposed BN + DBN method outperformed baseline SVMs and KNN methods in predicting regional recurrence, local recurrence, distant metastasis, and death in the NKI dataset. It also outperformed the METABRIC dataset with 92% accuracy for 2-year survival prediction, 89% for 3-year survival, 85% for 4-year survival, and 82% for 5-year survival. The BN alone achieved 74% accuracy for the Ljubljana dataset, 97% for the WOBC dataset, and 97% for the WDBC dataset. The method effectively makes decision-making under uncertainty from noisy data, integrates discrete clinical and continuous genomic features, and addresses issues like dimensionality in microarray data.

Fusion approach

This section includes four studies (34,38,40,41) that employed fusion concatenation approaches to combine multimodal architectures.

Adaptive fusion block (AFB) (38) comprising two intra-SA modules, one inter-CA module, an AFB, and a prediction block. The intra-SA module generates query, key, and value features, calculates an intra-modality channel affinity matrix, and extracts features from genomic and pathological image data modalities. The inter-CA module extracts these features and computes two inter-modality channel affinity matrices. The AFB then integrates intra-modality features and concatenates them with inter-modality features. The final combined representation is fed into a DNN in the prediction block.

A score-level fusion approach that leverages three independent DNNs, each tailored to a specific data modality: gene expression, CNA profiles, and clinical data was introduced (40). Each DNN consists of an input layer, four hidden layers with 1,000, 500, 500, and 100 units, respectively, and an output layer. Batch normalization is applied to accelerate training, while dropout prevents overfitting. The outputs are combined through score-level fusion to generate the final prediction. The weights for gene expression, CNA, and clinical DNNs are optimized on the validation set, and predictions are made on the test set by forward-passing multi-dimensional data through each DNN.

Multimodal fusion neural network (MFNN) (34) consists of four main components. The first component involves multimodal data, including gene expression profiles, CNA profiles, and clinical data. The second component constructs bipartite graphs between patients and the gene expression/CNA data, linking patients to abnormally expressed genes in the case of gene expression and directly linking patients to genes for CNA data. The third component applies GNNs to patient-gene and patient-CNA bipartite graphs, aggregating neighbor information to obtain an embedding for each patient from each modality. Finally, the multimodal fusion layer concatenates the patient embeddings from each modality with clinical data embeddings, forming a final multimodal embedding, which is subsequently passed through multiple fully connected layers for survival prediction.

Gated multimodal unit (GMU) (41) was employed as a fusion layer to integrate information from multiple modalities. Feature extraction was performed using a two-layer 1D CNN for clinical data, while a bidirectional long short-term memory (BiLSTM) network extracted features from concatenated CNA and gene expression data. The GMU dynamically fused these extracted features, which were then inputted into a MaxoutMLP classifier. To address data imbalance before feature extraction, SMOTE-NC oversampling was applied. The 1D CNN and BiLSTM handled feature extraction, while the GMU fusion layer adjusted modality weights. The MaxoutMLP classification layer then predicted patient survival using cross-entropy loss optimization with L2 regularization.

Result analysis

All the studies surveyed in this section outperformed their respective benchmarks. Table 3 presents the performance of each study under the fusion concatenation approach found in our review. Notably, a study (34) achieved the highest performance among the surveyed works. Various validation techniques were implemented to assess the robustness of the proposed methods.

Table 3

| References | Acc | Pre | Sn | MCC | AUC | F1-Score | C-index |

|---|---|---|---|---|---|---|---|

| (38) | – | – | – | – | 0.841 | – | 0.742 |

| (40) | 0.826 | 0.749 | 0.450 | 0.486 | 0.845 | – | – |

| (34)† | 0.954 | 0.964 | 0.976 | 0.875 | 0.983 | – | – |

| (41) | 0.937 | 0.891 | 0.845 | – | 0.964 | 0.845 | – |

†, highest performance. Acc, accuracy; AUC, area under the curve; C-index, concordance index; MCC, Matthews correlation coefficient; Pre, precision; Sn, sensitivity.

It was observed that all studies compared their models with existing state-of-the-art approaches and conducted validation tests. For instance, a study (38) performed an ablation test using feature visualization and the t-SNE algorithm to analyze abstract features from genomic and pathological images. The results showed that fusion data accurately predicted breast cancer prognosis by distinguishing between long-term and short-term survivors. Similarly, the authors (40) evaluated their model’s performance using the TCGA-independent breast cancer dataset, demonstrating that the MDNNMD model consistently outperformed unimodal datasets and existing models. In addition, a study (34) tested model effectiveness on five independent datasets covering breast cancer, metastatic colorectal cancer, pediatric acute lymphoid leukemia, and pan-lung cancer. The results emphasized the importance of fusing clinical, copy number, and gene expression data for improved predictions.

In conclusion, the studies highlight the novelty of their multimodal architecture, which extracts complementary features from individual models, and the fusion approach, which enhances model performance for better predictions despite limitations due to small data and high dimensionality.

Attention-mechanism approach

Three studies (37,42,43) implemented proposed architectures that utilized attention mechanisms to concatenate the predictions of individual modalities.

A research (37) incorporated pathological images, CNA, and gene expression features as inputs mapped to a shared feature space. These inputs were processed through a modality-specific attentional factorized bilinear module (MAFB) to generate modality-specific representations. The representations were then passed through a cross-modality attentional factorized bilinear module (CAFB) to create cross-modality representations. The final multimodal representation was concatenated and passed through prediction modules for survival prediction. Both MAFB and CAFB utilized factorized bilinear models to reduce the number of parameters. Similarly, a study (42) utilized five nonnegative matrix factorization (NMF) algorithms to extract 200-dimensional feature matrices from pre-processed gene expression data. Weights were calculated using an attention mechanism, normalized using SoftMax, and combined with a 25D clinical feature vector to create a multimodal 225D feature representation per sample. These features were then input into a DNN for breast cancer prognosis classification. furthermore, A multimodal architecture for classification was proposed (43), where data is passed through two separate CNNs for each modality (CNA and gene expression). The CNNs have kernel sizes of 3 and 2, with initial weights from Glorot normal initialization and stride of 1. ReLU is used as the activation function. Probability distribution scores are calculated, and modality-specific attention features are obtained. Bi-modal attention is formed between CNA-clinical and gene-clinical. An attention layer creates attention-weighted matrices over the feature maps, and cross-modality matrices are computed using SoftMax for final prediction.

Result analysis

In Table 4, a study (37) reveals that HFBSurv outperforms single fusion baselines in C-index and AUC, with improved performance from low-level MAFB fusion and hierarchical fusion with CAFB. It outperforms other deep learning and traditional methods, outperforming an additional ten TCGA cancer datasets and establishing HFBSurv risk as an independent prognostic factor. Meanwhile, a study (42) conducted experiments using the METABRIC breast cancer dataset, which contains 1,980 samples for training, validation, and testing. Attention-based multimodal neural decoder (AMND) outperformed single-NMF models by 1.1–2.7%, with the best AUC of 87.04%. AMND also achieved the highest accuracy (84.88%), precision (85.76%), F1-score (90.84%), and recall (97.23%) compared to single-NMF models. Variants of AMND showed attention mechanism, and multimodal fusion improved prognostic prediction. AMND outperformed other methods, such as SVM (80.13%), linear regression (LR) (76.39%), and RF (72.8%). AMND achieved higher AUC than previous methods: 2.54% higher than MDNNMD (84.5%), 2.54% more than PGM (82%), and 5.04% more than BPIM (84.5%). Attention mechanism and multimodal fusion improved prognostic prediction by better representing omics and clinical data relationships. However, another study (43) evaluated the performance of a multimodal DNN model using receiver operating characteristic (ROC) curves. The model showed the best performance for clinical data (0.82), followed by gene expression (0.69) and CNA data (0.68). The model also showed high precision and recall with low false positive/negative rates. Comparing the model to an external dataset showed significant improvements in AUC, accuracy, precision, and sensitivity. The model outperforms existing approaches based on comprehensive statistical analysis.

Table 4

| References | Acc | Pre | Sn/Recall | MCC | AUC | F1-score | C-index |

|---|---|---|---|---|---|---|---|

| (37) | – | – | – | – | 0.806 | – | 0.766 |

| (42) | 0.849 | 0.858 | 0.972 | – | 0.870 | 0.908 | – |

| (43) | 0.912 | 0.841 | 0.798 | – | 0.950 | 0.845 | – |

Acc, accuracy; AUC, area under the curve; C-index, concordance index; MCC, Matthews correlation coefficient; Pre, precision; Sn, sensitivity.

Most studies in this section conducted validation tests using independent test datasets. However, a study (42) did not include model validation. In contrast, another research (37) validated the model using ten independent datasets, demonstrating its superiority across all samples. Similarly, a study (43) employed the TCGA dataset and performed t-tests and p-tests, yielding statistically significant results

Stacked-ensemble approach

This section includes eight papers that implemented the stacked-ensemble concatenation approach in this surveyed work. This approach dominated the multimodal deep-learning breast cancer prognosis literature. The details are as follows: in this study, a novel multimodal architecture was developed using a two-stage stacked ensemble model to extract features from multiple data sources. This approach enhances prediction performance by leveraging the strengths of individual classifiers (44). To address the limitations of previous research, a bi-phase stacked ensemble framework was introduced. The first phase employed SiGaAtCNN models to extract hidden features. In contrast, the second phase utilized an ensemble RF classifier to integrate the multimodal data into the final survival classification (45).

A novel ensemble method, called the Deviation-Support Fuzzy Ensemble (DeSuFEn) (46), combines predictions from multiple base classifiers. It utilizes various multimodal deep learning architectures, such as SiGaAtCNN STACKED RF (C1), SiGaAtCNN + Input STACKED RF (C2), BiAttention (C3), and BiAttention STACKED RF (C4), for feature extraction, classification, and cross-modality attention. The approach aims to enhance breast cancer prognosis prediction by leveraging structural information in multi-omics data. Similarly, a multimodal architecture that integrates graph convolutional networks (GCN) with a Choquet fuzzy ensemble classifier was proposed (47). This model constructs separate graphs for gene expression and CNA data, trains a GCN model on each graph, concatenates clinical data features, and applies the Synthetic Minority Over-sampling Technique (SMOTE) to address class imbalances. The Choquet fuzzy ensemble classifier then combines the probabilistic outputs of RF, SVM, and logistic regression to improve prediction performance.

An EBCSP model was developed to predict breast cancer survival (48). The model consists of three neural networks: a CNN for clinical data, a DNN for copy number variation (CNV) data, and a long short-term memory (LSTM) for gene expression data. The extracted features were stacked and fed into a RF classifier for binary classification into long-term and short-term survival classes. Similarly, a study (49) employed distinct CNN architectures to extract features from clinical, CNA, and gene expression data modalities. The extracted features were concatenated to form a “stacked feature set”. LSTM and gated recurrent unit (GRU) recurrent networks were then utilized as classifiers to predict survival from the stacked features. Additionally, a complex voting classifier was implemented to generate the final prediction based on majority voting. This hybrid model integrated feature extraction and classification through an LSTM/GRU ensemble with voting, leveraging multiple modalities for improved prognosis.

Recent studies (50,51) have addressed the challenges of single-modality and heterogeneous deep learning architectures in breast cancer survival prediction. To address the modality gap from the heterogeneous data (50), a multi-scale bilinear convolutional neural network (MS-B-CNN) module is proposed for extracting unimodal representations from various data modalities like gene, CNA, and clinical. It uses a multi-scale fusion of features from different layers, learning modality-invariant embedding spaces and reconstructing original features. The results are stacked for final prediction using an extra tree classifier. In contrast, the heterogeneous multimodal ensemble method combines feature extraction, stacked feature set creation, and classification (51). The model used CNNs to extract features from clinical and gene expression data, DNNs to learn representations from CNV data, and an RF classifier for survival prediction.

Result analysis

In Table 5, three studies (48,49,51) have outperformed all prior work in the literature. However, variations exist in the evaluation metrics they implemented. AUC is the primary metric reported, but a study (51) does not apply AUC in the overall model architecture—only for single models. Instead, the authors use secondary metrics such as accuracy, precision, recall, and F1-score. The multimodal breast cancer dataset presents challenges related to class imbalance, and these studies achieved notable improvements in reducing model classification bias. Specifically, their models attained F1-scores of 0.99 and 0.98, while Matthews correlation coefficient (MCC) was reported to reach 0.936. Even with the average results in the metrics used, however, the MCC score in Du's study (50) ranks second, indicating that their model has addressed the imbalance issue.

Table 5

| References | Acc | Pre | Sn/Recall | MCC | AUC | F1-score | C-index |

|---|---|---|---|---|---|---|---|

| (44) | 0.912 | 0.841 | 0.798 | 0.762 | 0.950 | – | – |

| (45) | 0.902 | 0.841 | 0.747 | 0.730 | 0.930 | – | – |

| (46) | 0.829 | – | 0.586 | – | – | 0.629 | – |

| (47) | 0.820 | 0.630 | 0.667 | – | – | 0.647 | – |

| (48)† | 0.980 | 0.980 | 1.000 | – | 0.970 | 0.990 | – |

| (49)† | 0.980 | 0.990 | 0.992 | 0.936 | 0.982 | – | – |

| (50) | 0.930 | 0.902 | 0.828 | 0.836 | – | – | – |

| (51)† | 0.970 | 0.980 | 0.970 | – | – | 0.98 | – |

†, studies with top performance. Acc, accuracy; AUC, area under the curve; C-index, concordance index; MCC, Matthews correlation coefficient; Pre, precision; Sn, sensitivity.

Additionally, the studies (44,45) achieved AUC values of 0.950 and 0.930, outperforming all secondary metrics. While these results are superior to those of more recent studies (46,47), which reported less competitive performance, the latter introduced a Choquet fuzzy ensemble as a novel architecture in the domain.

In conclusion, this section has introduced highly novel architectures based on stacked ensemble learning. The discussion highlights the progressive development of various architectures. Initially, distinct CNN models were used to train and extract relevant features from multimodal datasets. These models were later enhanced with sigmoid-attention mechanisms to dynamically extract more relevant features (44,45). More recently, novel architectures have been introduced to address variations in prediction score distributions in conventional ensemble models. For instance, a study (46) proposed a deviation-support-based fuzzy ensemble. At the same time, another study (47) explored structural relationships between data samples by implementing a GCN with a Choquet fuzzy ensemble (ChoqFuzGCN).

Discussion

This section summarizes the surveyed work, focusing on multimodal deep-learning architectural design and concatenation approaches in breast cancer prognosis predictive models. After analyzing each study critically, the following points highlight more information about the survey.

Statistics of deep learning models

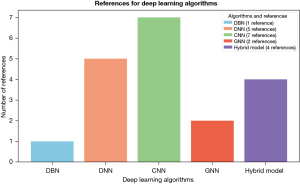

The survey examines the effectiveness of deep learning algorithms in classifying breast cancer patients’ prognoses in multimodal settings, highlighting their ability to learn complex patterns in high-dimensional and heterogeneous data and finding novel architectures for improved results.

The statistics in Figure 3 demonstrate the CNN model’s superiority in both appearance and performance over other models, indicating its efficiency in delivering exceptional results (49). In a previous study (34), researchers utilized a DNN model to combine three datasets for the first time in this domain. This novel approach has driven further advancements, making it an exciting area of study. Additionally, the survey highlights the adaptability of the DNN model, which has been successfully integrated with techniques such as the bilinear model and attention mechanisms to capture intricate inter-modality feature relationships and identify structural information between patients and multimodal data (35,36). Similarly, it functions as a concatenation method in (37).

Hybrid models incorporating heterogeneous base classifiers which are ranked third in predictive performance (36,38,48,51). Experimental results emphasize the importance of leveraging heterogeneous deep learning models for ensemble learning. Meanwhile, GNNs rank fourth in breast cancer prognosis using multimodal data. A study (34) introduces a novel multimodal GNN (MGNN) architecture, assessing its performance through ablation tests, parameter sensitivity analysis, and robustness verification across four datasets, demonstrating its effectiveness. Moreover, the DBN has been employed to train microarray data, effectively reducing high dimensionality and improving integration and predictive accuracy (39).

The survey results indicate that the domain has reached its pinnacle in state-of-the-art advancements, with several studies (48-51) published in 2023 achieving impressive results. One study, in particular, reported a perfect sensitivity score of 1.00. However, the lack of independent dataset validation to assess overfitting and generalization, as well as the absence of ablation and statistical tests to evaluate model reliability and robustness, necessitates further validation. The survey also identifies several research gaps in precision oncology for breast cancer patients, with the highest number of publications occurring in 2022 and 2023. Despite ongoing progress, the findings highlight the need for further research to enhance survival rates, quality of life, prognosis, and predictive accuracy for breast cancer patients. The following are the research gaps identified in the survey:

A study (40) suggested integrating more data to develop breast cancer subtype-specific analysis to explore further tumor heterogeneity’s impact on patient outcomes and treatment response. This would involve creating more comprehensive and dynamic predictive models for breast cancer’s diverse molecular and cellular profiles.

The survey suggests the need for more multimodal data in future research, with METABRIC and TCGA being the dominant data sources. Researchers should use more data for comprehensive analysis. The CNN model is the most experimented in the domain, and researchers can use its transfer learning strategies to fine-tune it on a breast cancer dataset. Deep transfer learning techniques like ResNet50, ResNet101, VGG16, and VGG19 can be used in multimodal settings for histopathological images to combine with genomics and clinical profile data. Data augmentation can resolve small data issues and improve model robustness and generalizability. Hyperparameter optimization can improve model performance by adjusting the learning rate, batch size, and epochs. These gaps are missing in the literature.

Many studies have reported the lack of interpretability in proposed deep learning models, highlighting the need for more explainable frameworks (44). Researchers emphasize the importance of developing models that integrate dynamic data, incorporate multi-task learning frameworks for cancer detection, enable subtype classification, facilitate survival prediction, and leverage active learning for iterative data selection. The challenge of interpreting breast cancer prognosis models has also been extensively discussed in the following studies (35,37,38).

- Another area of research is adapting the ensemble technique for other medical tasks like disease detection/segmentation and non-medical problems. A sensitivity analysis is conducted to evaluate the effect of different components on the proposed ensemble architecture.

- Future research should also focus on enhancing performance by combining multimodal data using neural network fusion methods and the CNN, considering multimodal correlations during model training, and incorporating multimodal correlations beyond feature extraction.

Conclusions

This survey provides a comprehensive analysis of multimodal breast cancer prognosis using deep learning models. The literature review identified 19 publications between 2016 and 2024, offering insights into the current landscape of multimodal architectures and concatenation approaches. A detailed search strategy was employed across multiple databases, including Google Scholar, Scopus, and Web of Science, to ensure a broad yet focused selection of relevant studies. Of the 90 initially identified papers, 71 were excluded for not addressing multimodal breast cancer prognosis using deep learning methods, resulting in a final selection of 19 publications that form the basis of this analysis. The discussion highlighted that deep learning models, particularly CNNs and DNNs, dominate this research area, with CNNs showing superior performance across many studies. The review also identified the increasing use of ensemble learning and hybrid models, such as those employing heterogeneous classifiers, to enhance multimodal breast cancer prognosis prediction. Notably, GNN and novel architectures, like MGNN, are emerging as powerful tools in this field, though they are less prevalent. Despite advancements, critical gaps remain, such as the need for more diverse datasets, improved model interpretability, and better multimodal fusion techniques. Addressing these gaps through enhanced data integration, development of explainable models, and application of transfer learning will further advance breast cancer prognosis prediction and precision oncology, ultimately improving patient outcomes.

Acknowledgments

None.

Footnote

Reporting Checklist: The authors have completed the Narrative Review reporting checklist. Available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-146/rc

Peer Review File: Available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-146/prf

Funding: None.

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-146/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Hansebout RR, Cornacchi SD, Haines T, et al. How to use an article about prognosis. Can J Surg 2009;52:328-36. [PubMed]

- Gupta C, Gill NS. Machine Learning Techniques and Extreme Learning Machine for Early Breast Cancer Prediction. Int J Innov Technol Explor Eng 2020;9:163-7. [Crossref]

- Guo YY, Huang YH, Wang Y, et al. Breast MRI Tumor Automatic Segmentation and Triple-Negative Breast Cancer Discrimination Algorithm Based on Deep Learning. Comput Math Methods Med 2022;2022:2541358. [Crossref] [PubMed]

- Garg V, Maggu S, Kapoor B. Breast Cancer Classification Using Neural Networks. 2023 International Conference on Intelligent and Innovative Technologies in Computing, Electrical and Electronics (IITCEE), Bengaluru, India; 2023;900-5.

- Chakkouch M. A Comparative Study of Machine Learning Techniques to Predict Types of Breast Cancer Recurrence. Int J Adv Comput Sci Appl 2023; [Crossref]

- Zeng H, Qiu S, Zhuang S, et al. A deep learning-based predictive model for a pathological complete response to neoadjuvant chemotherapy in breast cancer from biopsy pathological images: a multicenter study. Front Physiol 2024;15:1279982. [Crossref] [PubMed]

- Azeroual S, Ben-Bouazza FE, Naqi A, et al. Predicting disease recurrence in breast cancer patients using machine learning models with clinical and radiometric characteristics: a retrospective study. J Egypt Natl Canc Inst 2024;36:20. [Crossref] [PubMed]

- Oyelade ON, Irunokhai EA, Wang H. A twin convolutional neural network with a hybrid binary optimizer for multimodal breast cancer digital image classification. Sci Rep 2024;14:692. [Crossref] [PubMed]

- Yue W, Wang Z, Chen H, et al. Machine Learning with Applications in Breast Cancer Diagnosis and Prognosis. Designs 2018; [Crossref]

- Lamb R, Ablett MP, Spence K, et al. Wnt pathway activity in breast cancer sub-types and stem-like cells. PLoS One 2013;8:e67811. [Crossref] [PubMed]

- Tsuchiya M, Giuliani A, Hashimoto M, et al. Emergent Self-Organized Criticality in Gene Expression Dynamics: Temporal Development of Global Phase Transition Revealed in a Cancer Cell Line. PLoS One 2015;10:e0128565. [Crossref] [PubMed]

- Zhang H, Xi Q, Zhang F, et al. Application of Deep Learning in Cancer Prognosis Prediction Model. Technol Cancer Res Treat 2023;22:15330338231199287. [Crossref] [PubMed]

- Mook S, Schmidt MK, Weigelt B, et al. The 70-gene prognosis signature predicts early metastasis in breast cancer patients between 55 and 70 years of age. Ann Oncol 2010;21:717-22. [Crossref] [PubMed]

- Ferroni P, Zanzotto FM, Riondino S, et al. Breast Cancer Prognosis Using a Machine Learning Approach. Cancers (Basel) 2019;11:328. [Crossref] [PubMed]

- Ganggayah MD, Taib NA, Har YC, et al. Predicting factors for survival of breast cancer patients using machine learning techniques. BMC Med Inform Decis Mak 2019;19:48. [Crossref] [PubMed]

- Sharma N, Kang SS. Detection of Breast Cancer Using Machine Learning Approach. 2023 10th IEEE Uttar Pradesh Section International Conference on Electrical, Electronics and Computer Engineering (UPCON); 2023. doi:

10.1109/UPCON59197.2023.10434924 .10.1109/UPCON59197.2023.10434924 - van de Vijver MJ, He YD, van't Veer LJ, et al. A gene-expression signature as a predictor of survival in breast cancer. N Engl J Med 2002;347:1999-2009. [Crossref] [PubMed]

- Huang S, Yee C, Ching T, et al. A novel model to combine clinical and pathway-based transcriptomic information for the prognosis prediction of breast cancer. PLoS Comput Biol 2014;10:e1003851. [Crossref] [PubMed]

- Sharma D, Kumar R, Jain A. Breast cancer prediction based on neural networks and extra tree classifier using feature ensemble learning. Measur Sens 2022;24:100560. [Crossref]

- Xu X, Zhang Y, Zou L, et al. A Gene Signature for Breast Cancer Prognosis Using Support Vector Machine. 2012 5th International Conference on BioMedical Engineering and Informatics, Chongqing, China; 2012. doi:

10.1109/BMEI.2012.6513032 .10.1109/BMEI.2012.6513032 - Yu C, Qin N, Pu Z, et al. Integrating of genomic and transcriptomic profiles for the prognostic assessment of breast cancer. Breast Cancer Res Treat 2019;175:691-9. [Crossref] [PubMed]

- Pittman J, Huang E, Dressman H, et al. Integrated modeling of clinical and gene expression information for personalized prediction of disease outcomes. Proc Natl Acad Sci U S A 2004;101:8431-6. [Crossref] [PubMed]

- Gevaert O, De Smet F, Timmerman D, et al. Predicting the prognosis of breast cancer by integrating clinical and microarray data with Bayesian networks. Bioinformatics 2006;22:e184-90. [Crossref] [PubMed]

- Sun Y, Goodison S, Li J, et al. Improved breast cancer prognosis through the combination of clinical and genetic markers. Bioinformatics 2007;23:30-7. [Crossref] [PubMed]

- Hedjazi L, Le Lann MV, Kempowsky-Hamon T, et al. Improved breast cancer prognosis based on a hybrid marker selection approach. In Proceedings of the International Conference on Bioinformatics Models, Methods and Algorithms (BIOINFORMATICS-2011); 2011. doi:

10.5220/0003152301590164 .10.5220/0003152301590164 - Šilhavá J, Smrž P. Improved disease outcome prediction based on microarray and clinical data combination and pre-validation. In Proceedings of the First International Conference on Bioinformatics; 2010. doi:

10.5220/0002697601080113 .10.5220/0002697601080113 - Chen AH, Yang C. The improvement of breast cancer prognosis accuracy from integrated gene expression and clinical data. Expert Syst Appl 2012;39:4785-95. [Crossref]

- Kaur P, Singh A, Chana I, et al. BSense: A parallel Bayesian hyperparameter optimized Stacked ensemble model for breast cancer survival prediction. J Comput Sci 2022;60:101570. [Crossref]

- Arya N, Saha S, Mathur A, et al. Improving the robustness and stability of a machine learning model for breast cancer prognosis through the use of multi-modal classifiers. Sci Rep 2023;13:4079. [Crossref] [PubMed]

- Dhillon A, Singh A. eBreCaP: extreme learning-based model for breast cancer survival prediction. IET Syst Biol 2020;14:160-9. [Crossref] [PubMed]

- Guo W, Liang W, Deng Q, et al. A Multimodal Affinity Fusion Network for Predicting the Survival of Breast Cancer Patients. Front Genet 2021;12:709027. [Crossref] [PubMed]

- Tong L, Mitchel J, Chatlin K, et al. Deep learning-based feature-level integration of multi-omics data for breast cancer patients’ survival analysis. BMC Med Inform Decis Mak 2020;20:225. [Crossref] [PubMed]

- Iranmakani S, Morteza Zadeh T, Sajadian F, et al. A review of various modalities in breast imaging: technical aspects and clinical outcomes. Egypt J Radiol Nucl Med 2020; [Crossref]

- Gao J, Lyu T, Xiong F, et al. Predicting the Survival of Cancer Patients with Multimodal Graph Neural Network. IEEE/ACM Trans Comput Biol Bioinform 2022;19:699-709. [Crossref] [PubMed]

- Wang Z, Li R, Wang M, et al. GPDBN: deep bilinear network integrating both genomic data and pathological images for breast cancer prognosis prediction. Bioinformatics 2021;37:2963-70. [Crossref] [PubMed]

- Kaur P, Singh A, Chana I. BSense: A parallel Bayesian hyperparameter optimized Stacked ensemble model for breast cancer survival prediction. J Comput Sci 2022;60:101570. [Crossref]

- Li R, Wu X, Li A, et al. HFBSurv: hierarchical multimodal fusion with factorized bilinear models for cancer survival prediction. Bioinformatics 2022;38:2587-94. [Crossref] [PubMed]

- Liu H, Shi Y, Li A, et al. Multi-modal fusion network with intra- and inter-modality attention for prognosis prediction in breast cancer. Comput Biol Med 2024;168:107796. [Crossref] [PubMed]

- Khademi M, Nedialkov NS. Probabilistic Graphical Models and Deep Belief Networks for Prognosis of Breast Cancer. 2015 IEEE 14th International Conference on Machine Learning and Applications (ICMLA), Miami, FL, USA; 2015;727-32.

- Sun D, Wang M, Li A. A multimodal deep neural network for human breast cancer prognosis prediction by integrating multi-dimensional data. IEEE/ACM Trans Comput Biol Bioinform 2018; [Crossref] [PubMed]

- Yuan H, Xu H. Deep multi-modal fusion network with gated unit for breast cancer survival prediction. Comput Methods Biomech Biomed Engin 2024;27:883-96. [Crossref] [PubMed]

- Chen H, Gao M, Zhang Y, et al. Attention-Based Multi-NMF Deep Neural Network with Multimodality Data for Breast Cancer Prognosis Model. Biomed Res Int 2019;2019:9523719. [Crossref] [PubMed]

- Kayikci S, Khoshgoftaar TM. Breast cancer prediction using gated attentive multimodal deep learning. J Big Data 2023;10:62. [Crossref]

- Arya N, Saha S. Multi-modal advanced deep learning architectures for breast cancer survival prediction—Knowl Based Syst 2021;221:106965.

- Arya N, Saha S. Multi-Modal Classification for Human Breast Cancer Prognosis Prediction: Proposal of Deep-Learning Based Stacked Ensemble Model. IEEE/ACM Trans Comput Biol Bioinform 2022;19:1032-41. [Crossref] [PubMed]

- Arya N, Saha S. Deviation-support based fuzzy ensemble of multi-modal deep learning classifiers for breast cancer prognosis prediction. Sci Rep 2023;13:21326. [Crossref] [PubMed]

- Palmal S, Arya N, Saha S, et al. Breast cancer survival prognosis using the graph convolutional network with Choquet fuzzy integral. Sci Rep 2023;13:14757. [Crossref] [PubMed]

- Mustafa E, Jadoon EK, Khaliq-Uz-Zaman S, et al. An Ensemble Framework for Human Breast Cancer Survivability Prediction Using Deep Learning. Diagnostics (Basel) 2023;13:1688. [Crossref] [PubMed]

- Othman NA, Abdel-Fattah MA, Ali AT. A Hybrid Deep Learning Framework with Decision-Level Fusion for Breast Cancer Survival Prediction. Big Data Cogn Comput 2023;7:50. [Crossref]

- Du X, Zhao Y. Multimodal adversarial representation learning for breast cancer prognosis prediction. Comput Biol Med 2023;157:106765. [Crossref] [PubMed]

- Jadoon EK, Khan FG, Shah S, et al. Deep Learning-Based Multi-Modal Ensemble Classification Approach for Human Breast Cancer Prognosis. IEEE Access 2023;11:85760-9.

Cite this article as: Maigari A, XinYing C, Zainol Z. Multimodal deep learning breast cancer prognosis models: narrative review on multimodal architectures and concatenation approaches. J Med Artif Intell 2025;8:61.