Exploring the impacts of artificial intelligence interventions on providers’ practices: perspectives from a rural medical center

Highlight box

Key findings

• A high percentage (58%) of providers expressed a willingness to use artificial intelligence (AI) in their practice.

• The result infers that concerns about potential errors and inaccuracies in AI continue to challenge many providers.

What is known and what is new?

• A lack of trust in AI, particularly regarding data privacy, diagnostic accuracy, and patient safety, could prevent providers and patients from utilizing such applications.

• This study highlights the importance of system compatibility, usability, ethical considerations, and comprehensive training in building trust for AI adoption in rural healthcare settings, offering valuable insights for future research and practice.

What is the implication, and what should change now?

• The study findings help providers, especially key decision-makers, to understand which organizational capabilities are needed to facilitate AI utilization.

• Organizations should prioritize user-centered design in AI projects, as well as develop training programs and provide enhanced technical support specifically targeted at users who encounter difficulties using AI-reliant products or services.

Introduction

Background

Artificial intelligence (AI) technology has emerged as a powerful technology that has become a significant lever in the medical arena to help drive high-quality, affordable care (1), as well as improve health-care services and concomitantly reduce the workload of healthcare professionals and increasing efficiency (2). Areas in which AI technology can assist medical practices include clinical decision support (CDS) systems, diagnosis, predictive analysis, data visualization, natural language processing (NLP), patient monitoring, mobile technology, and telemedicine (3). In the context of diagnosis, AI aims to facilitate interpretation of clinical decisions through identifying particular patterns that would be difficult for humans to detect (4-10).

Rationale and knowledge gap

Despite the benefits of AI technology in medicine, some concerns have emerged concerning patient privacy and autonomy vis-a-vis AI applications (8-12). For example, a comprehensive literature review of 209 articles published between 2010 to 2020 revealed that patients’ privacy and patient autonomy rights were problems in AI applications (11). This is partly because AI systems heavily depend on patient data for training and operation across various stakeholders, including providers and public health officials. Failure to protect such data adequately poses a risk of breaches, potentially compromising patients’ privacy by exposing their sensitive health information to unauthorized parties (11). Another empirical effort ascertained that providers’ perceptions may influence actual usage and acceptance of AI technology in a clinical setting (5). Therefore, understanding variations in perspectives among providers is essential for creating a supportive environment that maximizes the benefits of AI in healthcare (4,5,13-20).

Although AI has marked potential to revolutionize healthcare, especially in rural or remote areas having limited resources—there is a significant gap in research regarding AI’s impact on providers’ practices and patient outcomes (5). Accordingly, additional empiricism is needed to improve understanding of how AI interventions can be effectively implemented to improve healthcare delivery and patient outcomes. Moreover, such work could help identify challenges or barriers that need to be addressed to ensure access to AI-driven healthcare solutions across different regions and communities. The foregoing situation motivated the current undertaking.

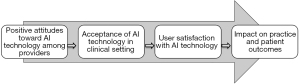

Framework for the study

The present study utilized a theoretical framework based on the work of Alanazi (3) (Figure 1). According to this framework, the impact of AI on practice and patient outcomes relies on user attitude, acceptance, and satisfaction with technology. The findings of this theoretical framework in the literature provide a unique contribution to assessing the impact of AI on the quality of care, focusing on structure (i.e., resources and infrastructure), process (i.e., delivery of care), and outcomes (i.e., patient health status) (3). Hence, this theoretical framework is well-suited for evaluating the impacts of AI interventions on both clinical practice and patient outcomes, as well as for examining the influence on healthcare providers—the foci of the current work.

Objectives and research questions

This study investigated healthcare providers’ perceptions of AI interventions’ influence on practice efficiency and patient outcomes, as well as the correlation between providers’ perceptions of AI and its effects on patient outcomes. The study sought to answer the following two research questions:

- What are providers’ perceptions of the impact of AI interventions on their practice and patient outcomes?

- Is there a significant correlation between providers’ perceptions of the efficiency of AI interventions and their impact on patient outcomes?

I present this article in accordance with the STROBE reporting checklist (available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-422/rc).

Methods

Setting

We chose a small hospital setting in rural Texas for three main reasons. Firs, though existing research has shown the benefits of AI for patients and providers, there is a lack of literature addressing the impact of AI technology interventions on providers’ practices and—particularly in small rural healthcare settings in Texas, where financial resources are scarce (5). Second, the limited access to capital and technology infrastructure in such settings may pose challenges to technology adoption and use. Third, providers in small rural healthcare facilities are experiencing a rise in hospital-acquired infection cases and workflow disruptions during the pandemic’s resurgence (5), concomitant with the psychological impact of the coronavirus disease 2019 (COVID-19) pandemic (6). Conceivably, AI technology could potentially mitigate some of these challenges. Therefore, utilizing a rural healthcare setting in Texas was deemed appropriate. Small rural healthcare facilities are commonly believed to face similar challenges regardless of location (7,8). Therefore, the sample in this study putatively represents comparable healthcare facilities both within Texas and nationally.

Background and demographics

The research was conducted in a rural medical center located in northeastern Texas. The facility has 52 beds and serves as a vital community-based healthcare institution catering to the needs of the area. Offering round-the-clock emergency, critical care, medical, surgical, and cardiac services, it offers such specialties as open-heart surgery, outpatient surgery, orthopedics, and imaging (5). Recently, the medical center implemented various AI-based applications, such as an AI-driven hand hygiene monitoring system, diagnostic AI, NLP, and CDS, with the goal of improving patient outcomes. However, healthcare providers at the medical center have differing opinions on the tangible effects of AI on clinical practice and patient outcomes.

After obtaining approval from the institutional review board, we acquired a roster of providers from the host medical center’s administration. Subsequently, on May 1, 2024, all 48 providers at the medical center were sent a self-administered questionnaire via email, along with an informed consent document explaining the study’s objectives. This specific time frame was selected because the medical center was facing several complex issues associated with AI usage, such as a crucial need for user-centered training to ensure that staff members could effectively utilize AI tools in their workflows. Respondents were guaranteed confidentiality and could decline participation in the study. Non-respondents were contacted via telephone between June 1 and July 31 of 2024 to prompt survey completion.

Questionnaire

The questionnaire items were adapted from Oh et al.’s online survey about physician’s confidence in AI (21). The first part of the instrument comprised demographic and medical center practice characteristics (i.e., years of practice, job role, employment status, familiarity with AI, types of AI systems utilized, prior understanding of technology for AI, and proficiency with digital technology).

The second part included statements assessing providers’ perceptions of AI and the impact of AI on patient outcomes. For providers’ perceptions of AI, respondents were asked to rate (using a 5-point scale, where 1 = strongly disagree and 5 = strongly agree) the overall efficiency of their practice subsequent to AI’s implementation. The items also assessed their future intentions to use AI when making medical decisions, as well as their concerns about possible errors and inaccuracies in AI. In regard to the impact of AI on patient outcomes, respondents were asked to rate the impact of AI interventions on patient outcomes on a 5-point scale (1 = strongly disagree and 5 = strongly agree), as well as their confidence in AI-driven patient-care decision-making and patients’ overall trust in AI-assisted medical decisions.

A final question was open-ended: “Is there anything else you would like to add about your concerns or perceptions regarding AI in healthcare?” Thematic analysis was utilized to identify key themes from the open-ended responses, chosen for its flexibility and suitability for qualitative data. The researcher initially read and reread the responses to gain a comprehensive understanding of the content. Then, the responses were broken into smaller segments of meaning, with corresponding codes assigned to each segment. The researcher subsequently refined and reviewed the emerging themes to ensure they accurately reflected the data. Finally, the themes were organized and analyzed to form a coherent narrative that supported the identified patterns in the responses. See Appendix 1 for the survey instrument.

Reliability and validity

As mentioned earlier, we utilized established measures from Oh et al. (21). These measures have demonstrated sufficient internal consistency and validity (21). Additionally, the questionnaire was tested on a panel of five individuals from the healthcare field familiar with AI systems. The validity of the questionnaire was confirmed based on that test. The reliability of the questionnaire was examined through a pilot study: Cronbach’s alpha was 0.96.

Statistical analyses

In the analysis, we initially focused on determining the percentage of respondents reporting their perceptions regarding the impact of AI interventions on their practice and patient outcomes. Second, the correlation between providers’ perceived efficiency of AI interventions (i.e., the independent variable) and their impact on patient outcomes (i.e., the dependent variable) was evaluated using Spearman’s rho correlation due to the data’s non-normal distribution (22,23). Third, factors associated with respondents’ sociodemographic data (i.e., age, gender, familiarity with AI, prior understanding of technology for AI, and proficiency with digital technology) and their perceptions and concerns about AI were analyzed using a Pearson’s chi-square test.

As noted above, a 5-point Likert scale ranging from strongly disagree to strongly agree was used to assess respondents’ perceptions of the benefits and concerns of AI, with scores ranging from 1 to 5, respectively. For perceived benefits—such as the efficiency of AI interventions—scores above 3 on the scale indicated “good” perceived benefits. Similarly, concerns were indicated using scores that exceeded 3 on the scale, denoting high levels of concern. Finally, data analysis was performed using the Statistical Package for the Social Sciences (SPSS) software version 28.0 (IBM Corp., Armonk, NY, USA). A significance level of P value <0.05 was adopted for determining statistical significance. A two-tailed test was used to assess the difference in both directions.

Ethical consideration

The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). Written informed consent was obtained from the participants for the study. This article does not include any studies involving the identities of human participants conducted by any of the authors. IRB approval was waived.

Results

Sample demographics

Reported in Table 1 is the demographic information of the respondents. Ultimately, 31 questionnaires were returned, resulting in a response rate of 65%. Most respondents were male (58%). Seventy-one percent were 39 years old or younger; 29%, 40 years or older. In addition, 68% were employed full-time and 32% part-time. More than one-half had been employed 10 years or more in the study’s medical center. Furthermore, approximately 68% were physicians (21 invited, all responded, a 100% response rate); 19%, registered nurses (17 invited and 6 responded, a 35% response rate); and the remainder, other kinds of providers (10 invited and 4 responded, a 40% response rate). Eighty-one percent indicated that they were familiar with AI systems. In addition, 61% reported that they had utilized an AI-based hand hygiene system in practice, while 39% had used CDS, diagnostic AI, and NLP. Last, 84% reported having a prior understanding of AI technology, and 71% rated their proficiency with digital technology as “significant”.

Table 1

| Characteristics | No. of respondents (n=31) | Percentage |

|---|---|---|

| Gender | ||

| Male | 18 | 58% |

| Female | 13 | 42% |

| Age | ||

| 39 years or less | 22 | 71% |

| 40–49 years | 4 | 13% |

| 50–59 years | 3 | 10% |

| 60+ years | 2 | 6% |

| Employment status | ||

| Full time | 21 | 68% |

| Part time | 10 | 32% |

| Years of experience | ||

| Less than 4 years | 4 | 13% |

| 5–9 years | 9 | 29% |

| 10–14 years | 12 | 39% |

| 15 years or more | 6 | 19% |

| Provider status | ||

| Physician | 21 | 68% |

| Registered nurse | 6 | 19% |

| Other kind of provider | 4 | 13% |

| Familiarity with AI | ||

| Yes | 25 | 81% |

| No | 6 | 19% |

| Types of AI interventions | ||

| AI-based hand hygiene | 19 | 61% |

| Clinical decision support | 6 | 19% |

| Diagnostic AI | 3 | 10% |

| NLP | 3 | 10% |

| Prior understanding of technology for AI | ||

| Significant | 14 | 45% |

| Some | 12 | 39% |

| None | 5 | 16% |

| Proficiency with digital technology | ||

| Significant | 22 | 71% |

| Some | 6 | 19% |

| None | 3 | 10% |

AI, artificial intelligence; NLP, natural language processing.

Providers’ perceptions of the impact of AI interventions on providers’ practice and patient outcomes

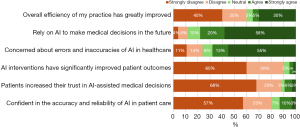

As portrayed in Figure 2, 40% of respondents strongly disagreed that AI interventions had significantly improved their practice’s efficiency, yet 58% expressed willingness to use AI to make medical decisions in the near future. Additionally, 55% showed concerns regarding errors and inaccuracies in AI. Moreover, 60% felt that AI interventions did not lead to improved patient outcomes, and 68% indicated that their patients did not feel that they could trust AI-assisted decision-making processes. Furthermore, 57% expressed a lack of confidence in the accuracy and reliability of AI in patient care.

Correlation between providers’ perceptions of the efficiency of AI interventions and their impact on patient outcomes

Respondents’ perceptions of the efficiency of AI interventions and the impact of AI on patient outcomes were statistically significantly correlated (Spearman’s rho =0.215, P<0.001).

Association between respondents’ sociodemographic data and their perceptions (awareness) and concerns about AI

As shown in Table 2, age, gender, prior understanding of technology for AI, and proficiency with digital technology were not significantly associated with poor or good perceptions of or with high or low concerns about AI. However, familiarity with AI was significantly associated with concerns as shown in Table 3. For instance, respondents who were very familiar with AI were more likely to have high concerns about AI (76%) compared to those who were not familiar with AI (33.3%) (P=0.001).

Table 2

| Variables | Perception poor (n=20), n (%) | Perception good (n=11), n (%) | Chi-square | P |

|---|---|---|---|---|

| Age, years | 2.879 | 0.22 | ||

| <39 | 15 (68.2) | 7 (31.8) | ||

| 40–49 | 2 (50.0) | 2 (50.0) | ||

| 50–59 | 2 (66.7) | 1 (33.3) | ||

| 60+ | 1 (50.0) | 1 (50.0) | ||

| Gender | 0.878 | 0.15 | ||

| Male | 13 (72.2) | 5 (27.8) | ||

| Female | 7 (53.8) | 6 (46.2) | ||

| A prior understanding of technology for AI | 1.246 | 0.65 | ||

| Significant experience | 10 (71.4) | 4 (28.6) | ||

| Some | 7 (58.3) | 5 (41.7) | ||

| None | 3 (60.0) | 2 (40.0) | ||

| Proficiency with digital technology | 1.075 | 0.35 | ||

| Significant experience | 15 (68.2) | 7 (31.8) | ||

| Some | 3 (50.0) | 3 (50.0) | ||

| None | 2 (66.7) | 1 (33.3) | ||

| Familiar with AI | 0.321 | 0.33 | ||

| Yes | 15 (60.0) | 10 (40.0) | ||

| No/not sure | 3 (50.0) | 3 (50.0) |

Perception (awareness) poor: based on scores of 3 or below using a 5-point Likert scale (1 =strongly disagree to 5 =strongly agree); perception (awareness) good: based on scores above 3 using a 5-point Likert scale (1, strongly disagree to 5, strongly agree). AI, artificial intelligence.

Table 3

| Variables | Concerns low (n=10), n (%) | Concerns high (n=21), n (%) | Chi-square | P |

|---|---|---|---|---|

| Age, years | 1.21 | 0.45 | ||

| <39 | 7 (31.8) | 15 (68.2) | ||

| 40–49 | 1 (25.0) | 3 (75.0) | ||

| 50–59 | 1 (33.3) | 2 (66.7) | ||

| 60+ | 1 (50.0) | 1 (50.0) | ||

| Gender | 1.197 | 0.28 | ||

| Male | 7 (38.9) | 11 (61.1) | ||

| Female | 3 (23.1) | 10 (76.9) | ||

| A prior understanding of technology for AI | 2.08 | 0.38 | ||

| Significant experience | 5 (35.7) | 9 (64.3) | ||

| Some | 4 (33.3) | 8 (66.7) | ||

| None | 1 (20.0) | 4 (80.0) | ||

| Proficiency with digital technology | 1.896 | 0.88 | ||

| Significant experience | 7 (31.8) | 15 (68.2) | ||

| Some | 2 (33.3) | 4 (66.7) | ||

| None | 1 (33.3) | 2 (66.7) | ||

| Familiar with AI | 8.37 | 0.001 | ||

| Yes | 6 (24.0) | 19 (76.0) | ||

| No/not sure | 4 (66.7) | 2 (33.3) |

Concerns low: based on scores of 3 or below using a 5-point Likert scale (1 = strongly disagree to 5 = strongly agree); concerns high: based on scores of 4 or above using a 5-point Likert scale (1 = strongly disagree to 5 = strongly agree). AI, artificial intelligence.

Analysis of open-ended question

Findings from a review of the open-ended questions revealed that 95% of providers indicated that a lack of reimbursement influenced their willingness to use of new AI technology, especially for smaller hospitals and physician practices. Additionally, 87% expressed concerns about patient privacy and data confidentiality in AI applications within medical and legal contexts. They also believed that AI compatibility with the existing technology and user-friendliness were crucial to avoid disruptions in their daily practice. Furthermore, 58% felt that ineffective and inflexible medical center administrators had not provided the necessary resources promptly, thus hindering use of the AI system.

Discussion

Key findings

This study explored providers’ perceptions of how AI interventions impact practice efficiency and patient outcomes, as well as their general views on AI and its effects. The findings revealed that, although 58% reported that they were willing to use AI systems, a lack of confidence in the technology’s ability to enhance their practice efficiency and patient care remains a major barrier to incorporating AI into providers’ practices. This result infers that concerns about potential errors and inaccuracies in AI continue to challenge many providers. In addition, providers who viewed AI as an efficient tool significantly associated it with improved patient care, particularly in reducing hospital readmissions.

We also found that sociodemographic factors did not significantly influence respondents’ perceptions or concerns about AI. However, those familiar with AI expressed greater concern regarding the accuracy and feasibility of AI systems. In addition, responses to an open-ended question highlighted that a lack of user-centered design and inadequate governance of patient confidentiality and data privacy were significant barriers to providers’ trust in AI for routine clinical practice. Ethical issues—particularly concerning privacy, trust, transparency, and accountability—remain major obstacles to utilizing AI in healthcare settings. Finally, more than one-half of respondents indicated that lack of support from the top level of the medical center administration in overcoming resistance to change related to AI was another significant barrier to effective AI utilization.

Strengths and limitations

The study provides valuable insights into the use of AI in healthcare, particularly for small rural facilities that face challenges such as workflow disruptions, technical limitations, and financial constraints. Its findings offer essential guidance for healthcare providers, especially decision-makers, on the organizational capabilities needed to implement AI effectively.

This study has a few limitations. First, it used a small sample (n=31) from just one rural hospital in Texas. Doing so likely limited the applicability of its findings to other clinical settings and regions. Nonetheless, the insights gained remain valuable for other healthcare facilities. Future research should involve larger samples from diverse locations and types of healthcare facilities to enhance generalizability.

Second, because the data were self-reported, there were likely inherent biases. Future studies should combine objective measures with self-reports to mitigate potential bias. Third, subsequent empirical research should focus on assessing the impact of AI interventions on patient outcomes and financial metrics. Specifically, scholars should target a particular patient outcome—such as reduced hospital readmission rates or improved diagnostic accuracy—that has been enhanced by AI technologies. The analysis should examine how these interventions have improved the chosen outcome and then evaluate the financial implications—including cost savings, revenue impact, and return on investment. Moreover, the study did not address potential biases in the datasets used to generate AI algorithms. Future studies should place greater emphasis on addressing potential biases in datasets used to train AI algorithms, as this is crucial for ensuring fairness, accuracy, and inclusivity in AI systems. Additionally, a comparative analysis should be conducted between organizations with and without AI, including case studies for practical insights to provide a comprehensive understanding of the benefits and financial impact of AI in healthcare.

Comparison with similar research

This study’s findings align with existing research showing that a lack of trust in AI diminishes provider satisfaction and enthusiasm for the technology (3,5,12). In addition, the finding that familiarity with AI correlates with increased concern about its feasibility and accuracy is consistent with research finding that providers who were more aware of AI tended to have higher levels of concern compared to those who were less familiar or had not heard of AI (24,25). Therefore, providing clear, accurate, and comprehensive information about AI and its potential applications is imperative for addressing such concerns and correcting misconceptions (25). Furthermore, the findings identify the absence of user-centered design and insufficient governance of patient confidentiality and data privacy as obstacles to providers’ trust in integrating AI into routine clinical practice. These findings corroborate previous research suggesting that strong data privacy policies and user-centered design are key to increasing provider confidence and trust in AI systems (25-27). Finally, the study findings indicate that ethical issues related to privacy, trust, transparency, and accountability remain significant obstacles to the use of AI, compounded by a lack of support from top-level medical center administration in overcoming resistance to AI-related change. This finding is compatible with previous research that has observed that leadership support is critical for driving digital transformation initiatives involving AI and enhancing provider satisfaction—especially in rural healthcare, where resources are scarce and providers are particularly concerned about management’s critical role in supporting them and healthcare systems to streamline the care pathways and provide timely, high-quality care for patients (25,28-30).

Explanations of findings

The study found that while 58% of healthcare providers were open to using AI, concerns about its accuracy, data privacy, and user-centered design hindered trust and adoption. Providers with more familiarity with AI expressed greater skepticism, and lack of leadership support from medical center administrations further impeded AI integration. These barriers highlight the need for better education, improved AI design, stronger data privacy policies, and leadership backing to increase trust and successful implementation in healthcare.

Implications and actions needed

The study has significant implications for AI use in healthcare, particularly for providers in small rural facilities facing challenges related to AI usage (e.g., workflow disruptions, technical issues, financial constraints) (5,7,8). Its findings help providers, especially key decision-makers, to understand which organizational capabilities are needed to facilitate AI utilization. Healthcare organizations and providers can use these insights as a starting point to plan their organizational resources accordingly and drive strategic decisions on AI use.

Based on our findings, there are some actionable recommendations that would be beneficial to organizations. First, organizations should prioritize user-centered design in AI projects because doing so significantly improves user experience by making systems more intuitive and effective, which, in turn, boosts adoption and reduces errors. This approach helps ensure that AI solutions are practical, reliable, and well-received. Second, organizations need to develop training programs specifically targeted at providers required to use AI systems and designed to address the multiple concerns resulting from unfamiliarity. By attending to the complex human factors involved, healthcare organizations can harness the power of AI while prioritizing the well-being and job satisfaction of the clinical workforce. Third, organizations should provide enhanced technical support to users who encounter difficulties using AI-reliant products or services. In this way, users will likely feel valued and appreciated, thus making them loyal to a product or service. For example, to increase providers’ trust in AI, organizations should provide comprehensive and transparent technology education to engage and attract provider interest in AI.

Finally, effective leadership should ensure that adequate financial resources, training, technical support, and change management strategies are in place. Leadership’s involvement not only aligns AI initiatives with organizational goals, but it also fosters a positive environment by addressing potential resistance and providing the necessary tools for success. When senior leaders actively engage in these areas, they help create a supportive environment for AI technologies to thrive, ultimately leading to improved patient care and health outcomes.

Conclusions

Although the rise of AI in healthcare is becoming increasingly prevalent, this study revealed a wide range of concerns among providers about AI’s role and implications. First, the use of AI in rural healthcare is hindered by a lack of trust among providers, concerns about the accuracy of AI tools, and the need for tailored solutions. Healthcare professionals are often skeptical about AI’s reliability and its potential to replace human judgment, especially in resource-limited rural settings. Additionally, there is a noticeable gap in the understanding of AI tools used in clinical practice among providers, which can hinder their effective use of new AI technologies. These concerns underscore the importance of a user-centered design approach, which prioritizes the needs and perspectives of healthcare professionals when utilizing AI technologies.

To effectively implement and use AI in rural medical centers, future strategies must address key elements such as system compatibility, usability, ethical considerations, and comprehensive training all contribute to establishing this trust. Moreover, engaging healthcare providers in the development of AI systems for clinical decision-making and diagnostic support is essential. Their insights can help identify and integrate additional critical factors that promote trust, ensuring that AI technologies align with the practical realities of medical practice. By fostering collaboration between AI developers and healthcare providers, patient outcomes from and support for the effective implementation of AI in the healthcare sector can be enhanced.

Acknowledgments

None.

Footnote

Reporting Checklist: The author has completed the STROBE reporting checklist. Available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-422/rc

Data Sharing Statement: Available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-422/dss

Peer Review File: Available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-422/prf

Funding: None.

Conflicts of Interest: The author has completed the ICMJE uniform disclosure form (available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-422/coif). The author has no conflicts of interest to declare.

Ethical Statement: The author is accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. The study was conducted in accordance with the Declaration of Helsinki (as revised in 2013). Written informed consent was obtained from the participants for the study. This article does not include any studies involving the identities of human participants conducted by any of the authors. IRB approval was waived.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Bizzo BC, Almeida RR, Michalski MH, et al. Artificial Intelligence and Clinical Decision Support for Radiologists and Referring Providers. J Am Coll Radiol 2019;16:1351-6. [Crossref] [PubMed]

- Strohm L, Hehakaya C, Ranschaert ER, et al. Implementation of artificial intelligence (AI) applications in radiology: hindering and facilitating factors. Eur Radiol 2020;30:5525-32. [Crossref] [PubMed]

- Alanazi A. Clinicians' Views on Using Artificial Intelligence in Healthcare: Opportunities, Challenges, and Beyond. Cureus 2023;15:e45255. [Crossref] [PubMed]

- Chen Y, Wu Z, Wang P, et al. Radiology Residents’ Perceptions of artificial intelligence: Nationwide cross-sectional survey study. J Med Internet Res 2023;25:e48249. [Crossref] [PubMed]

- Lintz J. Provider Satisfaction With Artificial Intelligence-Based Hand Hygiene Monitoring System During the COVID-19 Pandemic: Study of a Rural Medical Center. J Chiropr Med 2023;22:197-203. [Crossref] [PubMed]

- Lintz J. The psychological impact of the pandemic on primary health-care providers: Perspectives from a primary care clinic. J Public Health Prim Care 2024;5:48-54. [Crossref]

- Vaughan L, Edwards N. The problems of smaller, rural and remote hospitals: Separating facts from fiction. Future Healthc J 2020;7:38-45. [Crossref] [PubMed]

- Kacik A. Nearly a quarter of rural hospitals are on the brink of closure. Modern Healthcare 2019 [cited 2024 Nov 8].

- McKinney SM, Sieniek M, Godbole V, et al. International evaluation of an AI system for breast cancer screening. Nature 2020;577:89-94. [Crossref] [PubMed]

- Kim HE, Kim HH, Han BK, et al. Changes in cancer detection and false-positive recall in mammography using artificial intelligence: a retrospective, multireader study. Lancet Digit Health 2020;2:e138-48. [Crossref] [PubMed]

- Sunarti S, Fadzlul Rahman F, Naufal M, et al. Artificial intelligence in healthcare: opportunities and risk for future. Gac Sanit 2021;35:S67-70. [Crossref] [PubMed]

- Guan J. Artificial Intelligence in Healthcare and Medicine: Promises, Ethical Challenges and Governance. Chin Med Sci J 2019;34:76-83. [Crossref] [PubMed]

- Reddy S, Allan S, Coghlan S, et al. A governance model for the application of AI in health care. J Am Med Inform Assoc 2020;27:491-7. [Crossref] [PubMed]

- Sarwar S, Dent A, Faust K, et al. Physician perspectives on integration of artificial intelligence into diagnostic pathology. NPJ Digit Med 2019;2:28. [Crossref] [PubMed]

- Santos JC, Wong JHD, Pallath V, et al. The perceptions of medical physicists towards relevance and impact of artificial intelligence. Phys Eng Sci Med 2021;44:833-41. [Crossref] [PubMed]

- Ho M, Le NB, Mantello P, et al. Understanding the acceptance of emotional artificial intelligence in Japanese healthcare system: a cross-sectional survey of clinic visitors’ attitude. Technology in Society 2023;72:102166. [Crossref]

- Kansal R, Bawa A, Bansal A, et al. Differences in Knowledge and Perspectives on the Usage of Artificial Intelligence Among Doctors and Medical Students of a Developing Country: A Cross-Sectional Study. Cureus 2022;14:e21434. [Crossref] [PubMed]

- Bauer C, Thamm A. Six areas of healthcare where AI is effectively saving lives today. In: Glauner P, Plugmann P, Lerzynski G, editors. Digitalization in Healthcare: Implementing Innovation and Artificial Intelligence. Cham, Switzerland. Springer Cham; 2021;245-267.

- Reardon S. Rise of Robot Radiologists. Nature 2019;576:S54-8. [Crossref] [PubMed]

- Yin J, Ngiam KY, Teo HH. Role of Artificial Intelligence Applications in Real-Life Clinical Practice: Systematic Review. J Med Internet Res 2021;23:e25759. [Crossref] [PubMed]

- Oh S, Kim JH, Choi SW, et al. Physician Confidence in Artificial Intelligence: An Online Mobile Survey. J Med Internet Res 2019;21:e12422. [Crossref] [PubMed]

- Barreiro-Ares A, Morales-Santiago A, Sendra-Portero F, et al. Impact of the Rise of Artificial Intelligence in Radiology: What Do Students Think? Int J Environ Res Public Health 2023;20:1589. [Crossref] [PubMed]

- Adams KA, Lawrence EK. Research methods, statistics, and applications. 2nd rev ed. Thousand Oaks: California, 2019.

- Abu Hammour K, Alhamad H, Al-Ashwal FY, et al. ChatGPT in pharmacy practice: a cross-sectional exploration of Jordanian pharmacists' perception, practice, and concerns. J Pharm Policy Pract 2023;16:115. [Crossref] [PubMed]

- Ahmed MI, Spooner B, Isherwood J, et al. A Systematic Review of the Barriers to the Implementation of Artificial Intelligence in Healthcare. Cureus 2023;15:e46454. [Crossref] [PubMed]

- Marcu LG, Boyd C, Bezak E. Current issues regarding artificial intelligence in cancer and health care. Implications for medical physicists and biomedical engineers. Health Technol 2019;9:375-81. [Crossref]

- Romero-Brufau S, Wyatt KD, Boyum P, et al. A lesson in implementation: A pre-post study of providers' experience with artificial intelligence-based clinical decision support. Int J Med Inform 2020;137:104072. [Crossref] [PubMed]

- Lintz J. Adoption of telemedicine during the COVID-19 pandemic: Perspectives of primary healthcare providers. European Journal of Environment and Public Health 2022;6:em0106. [Crossref]

- Tursunbayeva A, Chalutz-Ben Gal H. Adoption of artificial intelligence: A TOP framework-based checklist for digital leaders. Business Horizons 2024;67:357-68. [Crossref]

- Rony MKK, Numan SM, Johra FT, et al. Perceptions and attitudes of nurse practitioners toward artificial intelligence adoption in health care. Health Sci Rep 2024;7:e70006. [Crossref] [PubMed]

Cite this article as: Lintz J. Exploring the impacts of artificial intelligence interventions on providers’ practices: perspectives from a rural medical center. J Med Artif Intell 2025;8:39.