X-rays in the AI age: revolutionizing pulmonary tuberculosis diagnosis—a systematic review & meta-analysis

Highlight box

Key findings

• Artificial intelligence (AI) models exhibited pooled accuracy, sensitivity, and specificity along with 95% confidence interval to be 95.0% (94.85–95.15%), 96.28 (96.14–96.42%), and 87.89 (87.75–88.03%) respectively, in screening for tuberculosis (TB) using the chest X-ray (CXR). TBNet was the most sensitive, accurate and specific of all the AI models.

What is known and what is new?

• AI is a relatively recent technological innovation, with potential application in medicine being actively explored and developed. One promising application is in Rapid Diagnostics, with evidence for AI models as useful aids in screening chest pathologies using the CXR. Our meta-analysis demonstrates AI models to be more sensitive, accurate, and specific than clinicians in screening for TB using a CXR. Convolutional neural networks, particularly TBNet, emerged as the most promising model, compared with traditional methods such as interferon-gamma release assay and enzyme-linked immunosorbent assay, in part due to the former’s lower cost and higher availability.

What is the implication and what should change now?

• While AI models have shown great promise in detecting TB using a CXR, further studies are needed particularly in TB endemic areas, with a focus on practical application of AI in population screening. In particular, training of AI models based not only on the CXR images but also incorporating patient’s clinical history signs and symptoms is warranted.

Introduction

Tuberculosis (TB) has left an indelible mark on human history, dating back millennia. Evidence of TB has been found in ancient Egyptian mummies, suggesting its occurrence as far back as 2400 BCE. Through millennia, TB has been referred to as “consumption” or “white plague”, reflecting the fear and devastating impact on individuals and communities. The 18th and 19th centuries witnessed TB epidemics across Europe and North America, fueled by overcrowded urban living conditions, poor sanitation, and inadequate healthcare. The disease ravaged populations, claiming countless lives and inspiring fear and stigma (1). Even in the industrial era, TB remained a prominent public health concern, exacerbated by industrialization and urbanization. It was not until the 20th century that scientific breakthroughs, such as the discovery of antibiotics and advancements in public health measures, led to significant progress in TB control (1). Despite these advancements, TB continues to pose challenges globally, particularly in regions with limited access to healthcare and resources.

The burden of TB has been significantly reduced in the developed nations, although it has made some resurgence in immunocompromised individuals, particularly acquired immunodeficiency syndrome (AIDS). However, it is in the developing world where it continues to ravage the population. As a highly infective airborne pathogen (2), it spreads far and wide in over-crowded, particularly poor socioeconomic conditions, affecting the most vulnerable populations (3). TB remains a significant contributor to morbidity and mortality in lower income countries; a survey done by World Health Organization (WHO) showed that in 2022 alone there were 10.6 million new cases of TB recorded, including 5.8 million men, 3.5 million women and 1.3 million children (4). The clinical manifestations display large inter-individual variation, ranging from latent TB to full blown pulmonary disease, presenting as isolated nodules, fibrosis, pleural effusion, increased nodularity or cavitation (5). These characteristics changes can be detected on chest X-rays (CXRs), rendering radiological investigation the mainstay of TB screening and diagnosis (5). In most countries’ CXRs interpreted by radiologists or the attending physician, demand a high level of resources, are time consuming, with accuracy heavily dependent on the skill of the interpreter as well as the quality of the CXR images (6). The advent of artificial intelligence (AI), particularly in the realm of radiology, has paved the way for faster, automated and probably more accurate diagnosis of a multitude of medical conditions (7). AI is being experimented in varying domains of medicine; one such possibility is the interpretation of radiological scans.

It has been proposed that AI may detect pulmonary TB early, by recognizing the typical characteristic features and patterns on the CXR (8) and the WHO has recommended computer-assisted detection of TB (9). Several studies have explored various AI models for TB detection, however no comprehensive analysis or review could be found that examined holistically all the studies and the different AI models used for this purpose. The aim of our study is to review these studies and by conducting a meta-analysis, summarize and critique the potential of currently available AI technology in TB detection. Furthermore, we aimed to compare TB detection using AI to traditional methods of TB screening and human CXR interpretation. We present this article in accordance with the PRISMA reporting checklist (available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-363/rc).

Methods

Search strategy

We chose The Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) guidelines (10). Using both Mesh command and Boolean terms, on PubMed and Embase, we performed an extensive and thorough literature search for papers published in the period, January, 2015 to January, 2024. The Mesh terms used for the search included “Artificial Intelligence”[Mesh] AND (“Tuberculosis”[Mesh] OR “Tuberculosis, Pulmonary”[Mesh]), while the simple Boolean terms used were “Artificial Intelligence”, “Pulmonary Tuberculosis” and “Pulmonary Tuberculosis Screening”. The detailed search strategy with all the relevant search terms has been provided in Table S1.

Selection of studies

Only published studies were selected for the analyses meeting the following inclusion criteria: (I) studies published in English; (II) studies performed using AI; (III) studies performed using CXR images; (IV) and studies where TB detection using AI software performance tool were included.

Studies that met the following exclusion criteria were excluded: (I) reviews and letters; (II) studies with overlapping, duplicate or insufficient data and; (III) studies with significant bias (per the PROBAST checklist).

Quality assessment

To ensure the quality of studies included, the PROBAST checklist (11), we used a validated tool for assessing studies for systematic review or meta-analysis. The tool consists of 4 components, each further divided into subcomponents as follows:

- Specification of the study question: it should clearly define the target population, predictors, and outcome.

- Classifying the type of prediction model for each study, including its development and validation

- Assessing the risk of bias and applicability for each study in the following domains:

- Participant: assesses if the sample represents the target population and techniques used for eliminating bias i.e., blinding.

- Predictor: evaluates relevance, consistency, and measurement of variables.

- Analysis: reviews statistical methods and handling of missing data.

- Outcome: checks if outcomes are clearly defined and measured without bias.

- Overall judgement for risk of bias for each study: Summarizes bias across domains to judge model reliability.

The PROBAST tool (Prediction model Risk of Bias Assessment Tool) is designed to assess the risk of bias and applicability of prediction model studies. Its relevance in evaluating AI tools, particularly in the context of using CXR for TB diagnosis, lies in several critical areas including bias in data selection and study populations; outcome measurement and labeling, development of the predictive model, validation and generalizability and finally reporting bias and transparency. The evaluation was performed by one reviewer (A.R.) and then cross-checked by a second reviewer (N.U.A). We excluded studies from the analysis which were identified to have a high risk of bias, based on the PROBAST criteria. However, studies with a low risk of bias, as well as those with an unclear risk of bias, were retained in the analysis to due to the limited availability of data on the topic. Furthermore, a sensitivity analysis was performed on the pooled outcomes to minimize the effect of outliers and the Egger and Beggs test to minimize the risk of publication bias.

Data extraction

The data from each study were extracted by the first reviewer (Z.A.S.) and cross-checked by the second reviewer (N.U.A), to ensure accuracy and eliminate errors and discrepancies. The data extracted included; the first author; the year of publication; the type of study; the country of publication; the sample size; the radiological modality used; the AI models used and the method of validation for each study. Outcomes included accuracy, sensitivity and specificity with corresponding 95% confidence intervals (CIs). Only studies with all measures of outcome were included in the analysis.

Statistical analyses

Pooled outcomes with 95% CIs were calculated by aggregating accuracy, sensitivity, and specificity data from included studies. Depending upon the heterogeneity, we used either a fixed-effect model [Cochran-Mantel-Haenszel method or a random effect mode (DerSimonian-Laird method)]. Heterogeneity was verified using Higgins I2 statistic, with the variability across studies classified as low (<25%), moderate (25–50%), or high (>50%). In cases of significant heterogeneity (P<0.01) or I2>50%, a random effect model was used for analysis, if not, then a fixed effect was employed. We chose the Higgins I2 statistic instead of the Cochran Q, as the latter is inaccurate when the number of studies is small. While the Higgins I2 statistic is relatively independent of the number of studies, it can conversely inflate heterogeneity estimates when the number of studies is small. To minimize the influence of heterogeneity, the included studies with similar characteristics were further subjected to a subgroup analysis. A sensitivity analysis using leave one out approach was also employed.

To estimate publication bias in our study, Begg’s and Egger’s tests were performed using a funnel plot with log of each outcome and their standard error. In our study, P values were calculated using a two-tailed test, with P<0.05 considered statistically significant. Statistical analysis was performed using Stata16 (StataCorp LP, College Station, Texas, United States) and Microsoft Excel (V.2010, Microsoft Corporation, Redmond, Washington, United States).

Results

Study characteristics

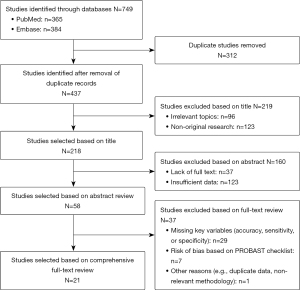

After an exhaustive and extensive literature search, we found 749 potentially relevant studies, of which 21 studies that met the eligibility criteria were selected for the analyses (Figure 1) (12-32), containing 98,997 CXR images, subjected to AI for TB detection. Characteristics of the studies included in the analyses are summarized in Table 1, with a mean age of patients ranging from 42 to 65 years, with 33.2% to 77.9% of being male. Nineteen of the twenty-one studies were conducted in Asian populations, with the remaining two conducted in non-Asians. Table 2 summarizes the accuracy, sensitivity, and specificity, showing the outcome for different studies as well as for different AI models used in each study. Table 3 shows the Risk of Bias using the PROBAST Checklist, categorizing the studies based on their risk of bias and applicability, with only the studies with low and uncertain risk of bias incorporated in the analyses.

Table 1

| First author (ref.), year | Country | Study type | Mean age (years) | Gender male, % [n] | Sample size | Validation | AI language |

|---|---|---|---|---|---|---|---|

| Mayidili Nijiati (12), 2022 | China | Retrospective study | 65 | 53.7 [5,170] | 9,628 | External validation | AlexNet |

| VGG | |||||||

| ResNet | |||||||

| Andrew J. Codlin (13), 2021 | Vietnam | Prospective study | 57 | 69 [712] | 1,032 | External validation | Qure.AI |

| DeepTek | |||||||

| Delft imaging | |||||||

| OXIPIT | |||||||

| Lunit | |||||||

| CAD | |||||||

| Chenggong Yan (14), 2022 | China | Prospective study | 48.5 | 60.8 [320] | 526 | Internal validation | CNN |

| Zhi Zhen Qin (15), 2021 | Bangladesh | Prospective study | 42 | 67.1 [16,073] | 23,954 | Internal validation | CAD |

| InferRead DR | |||||||

| Lunit | |||||||

| qXR | |||||||

| Zhi Zhen Qin (16), 2019 | Nepal | Retrospective study | 46 | 77 [397] | 515 | External validation | CAD |

| Lunit | |||||||

| qXR | |||||||

| Kosuke Okada (17), 2024 | Cambodia | Retrospective study | 55 | 50 [4,193] | 8,386 | Internal validation | CAD |

| C Geric (18), 2023 | Cameroon | Prospective study | – | – | 1,196 | External validation | CAD |

| Ye Ra Choi (20), 2023 | South Korea | Prospective study | 58 | 33.2 [185] | 558 | External validation | ResNet |

| Mayidili Nijiati (19), 2022 | China | Retrospective study | 65 | 42.5 [974] | 2,291 | Internal validation | VGG |

| EfficientNet | |||||||

| ResNet | |||||||

| Vasundhara Acharya (21), 2022 | India | Prospective study | – | – | 3,800 | External validation | ResNet |

| DenseNet | |||||||

| Inception | |||||||

| EfficientNet | |||||||

| Seowoo Lee (22), 2021 | Korea | Retrospective study | 55 | 56.6 [1,883] | 3,327 | Internal validation | EfficientNet |

| Yi Gao (23), 2023 | China | Retrospective study | 50 | 77.9 [721] | 925 | External validation | TBNet |

| ResNet | |||||||

| G Simi Margarat (24), 2022 | India | Retrospective study | – | – | 662 | External validation | DBN-AMBO |

| Yilin Xie (25), 2020 | China | Retrospective study | – | – | 247 | Internal validation | CAD |

| Anh L. Innes (26), 2023 | Vietnam | Prospective study | – | – | 5,826 | Internal validation | qXR |

| Muhammad Ayaz (27), 2021 | Pakistan | Retrospective study | – | – | 138 | Internal validation | Ensemble |

| Jong Hyuk Lee (28), 2021 | South Korea | Retrospective study | 55 | 56.6 [11,396] | 20,135 | Internal validation | Deep learning |

| So Yeon Choi (29), 2023 | South Korea | Retrospective study | – | – | 8,374 | Internal validation | Deep learning |

| Jaime Melendez (30), 2016 | South Africa | Prospective study | – | – | 392 | Internal validation | CAD |

| Eui Jin Hwang (31), 2019 | South Korea | Retrospective study | 57 | 56.5 [3,824] | 6,768 | External validation | Deep learning |

| Madlen Nash (32), 2020 | India | Retrospective study | 47 | 74.8 [237] | 317 | Internal validation | qXR |

AI, artificial intelligence; CAD, computer aided detection; CNN, convolutional neural network; DBN-AMBO, Deep Belief Network with Adaptive Monarch butterfly optimization; TB, tuberculosis.

Table 2

| First author | Year | AI language | Accuracy (%) (95% CI) | Sensitivity (%) (95% CI) | Specificity (%) (95% CI) |

|---|---|---|---|---|---|

| Mayidili Nijiati (12) | 2022 | AlexNet | 95.06 (93–97) | 93.2 (91–95.5) | 97.08 (96–98) |

| VGG | 94.96 (91–98) | 94.2 (92–96.2) | 95.78 (93.8–98) | ||

| ResNet | 96.73 (96.3–97) | 95.5 (94–97) | 98.05 (97–99) | ||

| Andrew J Codlin (13) | 2021 | Qure.AI | 54.7 (51.7–57.8) | 95.5 (90.4–98.3) | 48.7 (45.4–52) |

| DeepTek | 52.6 (49.5–55.7) | 95.5 (90.4–98.3) | 46.3 (43–49.6) | ||

| Delft imaging | 51.7 (48.7–54.8) | 95.5 (90.4–98.3) | 45.3 (42–48.6) | ||

| OXIPIT | 47.9 (44.8–51) | 95.5 (90.4–98.3) | 40.8 (37.6–44.1) | ||

| Lunit | 46 (43–49.1) | 95.5 (90.4–98.3) | 38.7 (35.5–42) | ||

| CAD | 22.9 (20.3–25.6) | 95.5 (90.4–98.3) | 12.1 (10.1–14.4) | ||

| Chenggong Yan (14) | 2022 | CNN | 73.32 (72–75) | 95.2 (94.6–96) | 68 (67–69) |

| Zhi Zhen Qin (15) | 2021 | CAD | 91.5 (90.5–92.4) | 90 (89–91) | 75.8 (75.2–76.4) |

| InferRead DR | 84 (82.8–85.2) | 90.3 (89.3–91.3) | 64.5 (63.8–65.1) | ||

| Lunit | 88.8 (87.8–89.8) | 90.1 (89–91) | 70.3 (69.7–71) | ||

| qXR | 92.6 (91.7–93.4) | 90.2 (89.2–91.1) | 76.7 (76.1–77.2) | ||

| Zhi Zhen Qin (16) | 2019 | CAD | 81 (79–83) | 95 (90–98) | 80 (77–82) |

| Lunit | 77 (75–79) | 95 (90–98) | 76 (73–78) | ||

| qXR | 83 (81–85) | 95 (90–98) | 82 (79–84) | ||

| Kosuke Okada (17) | 2024 | CAD | 82.1 (79.1–85.1) | 95.1 (93.1–96.7) | 74.7 (73.7–75.7) |

| C Geric (18) | 2023 | CAD | 82 (80–84) | 96.85 (89.1–98) | 78.6 (75.4–81.2) |

| Ye Ra Choi (19) | 2023 | ResNet | 97 (95–99) | 99 (98–100) | 95 (94–96) |

| Mayidili Nijiati (20) | 2022 | VGG | 82 (79–85) | 94 (92–96) | 79 (78–80) |

| EfficientNet | 84 (81–88) | 97 (96–98) | 75 (73–77) | ||

| ResNet | 83 (80–86) | 95 (94–96) | 77 (75.5–78.5) | ||

| Vasundhara A. (21) | 2022 | ResNet | 94.85 (92–97) | 87.02 (84–90) | 96.99 (96–98) |

| DenseNet | 92.64 (90–95) | 79.91 (78–81) | 97.18 (96.5–98) | ||

| Inception | 89.58 (87–91) | 69.4 (67–72) | 97.51 (97–98) | ||

| EfficientNet | 84.07 (81–87) | 51.78 (49.78–53.78) | 95.86 (93–97) | ||

| Seowoo Lee (22) | 2021 | EfficientNet | 32 (29.12–34.99) | 95 (88.72–98.36) | 25 (22.2–27.96) |

| Yi Gao (23) | 2023 | TBNet | 71 (69–73) | 71.2 (70.4–72) | 70.2 (68.2–72.2) |

| ResNet | 66 (64–68) | 65 (63–67) | 66.2 (64.2–68.2) | ||

| G Simi Margarat (24) | 2022 | DBN-AMBO | 99.2 (99–99.4) | 99.4 (99.2–99.6) | 99.1 (98.7–99.5) |

| Yilin Xie (25) | 2020 | CAD4TB | 97.4 (96.8–98) | 98.3 (98–98.6) | 96.2 (94.2–98.2) |

| Anh L Innes (26) | 2023 | qXR | 64.8 (60.7–69.2) | 96 (90–100) | 61 (56.3–65.9) |

| Muhammad Ayaz (27) | 2021 | Ensemble | 90.6 (85.4–95) | 95 (92–98) | 76 (73–79) |

| Jong Hyuk Lee (28) | 2021 | Deep learning | 96 (94–98) | 82.1 (64.4–92.1) | 96 (95.7–96.2) |

| So Yeon Choi (29) | 2023 | Deep learning | 48 (38–58) | 70.6 (62.4–78.8) | 71.2 (70–72.3) |

| Jaime Melendez (30) | 2016 | CAD | 46 (44–48) | 73 (67–79) | 24 (5–39) |

| Eui Jin Hwang (31) | 2019 | Deep learning | 96.2 (91.4–98.8) | 95.2 (88.1–98.7) | 100 (96.4–100) |

| Madlen Nash (32) | 2020 | qXR | 38 (35–42) | 94 (91–97) | 21 (18–24) |

AI, artificial intelligence; CAD, computer aided detection; CI, confidence interval; CNN, convolutional neural network; DBN-AMBO, Deep Belief Network with Adaptive Monarch butterfly optimization; TB, tuberculosis.

Table 3

| Study | Risk of bias | Applicability | Overall | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Participant | Predictors | Analysis | Outcome | Participant | Predictors | Analysis | Outcome | RoB | Applicability | |||

| (12) | + | + | + | + | + | + | + | + | + | + | ||

| (13) | ? | + | + | + | + | + | + | + | ? | + | ||

| (14) | + | ? | + | + | + | + | + | + | ? | + | ||

| (15) | + | + | + | + | ? | + | + | + | + | ? | ||

| (16) | + | + | + | + | + | + | + | + | + | + | ||

| (17) | + | + | + | + | + | + | + | + | + | + | ||

| (18) | ? | + | + | + | + | + | + | + | ? | + | ||

| (19) | + | + | + | + | + | + | + | + | + | + | ||

| (20) | + | + | + | + | + | + | + | + | + | + | ||

| (21) | + | + | + | + | + | + | + | + | + | + | ||

| (22) | + | + | + | + | + | + | + | + | + | + | ||

| (23) | + | ? | + | + | + | + | + | + | ? | + | ||

| (24) | + | + | + | + | + | + | + | + | + | + | ||

| (25) | + | + | + | + | + | + | + | + | + | + | ||

| (26) | + | + | + | + | + | + | + | + | + | + | ||

| (27) | + | + | + | + | + | + | + | + | + | + | ||

| (28) | + | + | + | + | + | + | + | + | + | + | ||

| (29) | + | + | + | + | + | + | + | + | + | + | ||

| (30) | ? | + | + | + | + | + | + | + | ? | + | ||

| (31) | + | + | + | + | + | + | + | + | + | + | ||

| (32) | + | + | + | + | + | + | + | + | + | + | ||

+, low risk of bias/low concern regarding applicability; ?, unclear risk of bias/ unclear concern regarding applicability. AI, artificial intelligence; TB, tuberculosis.

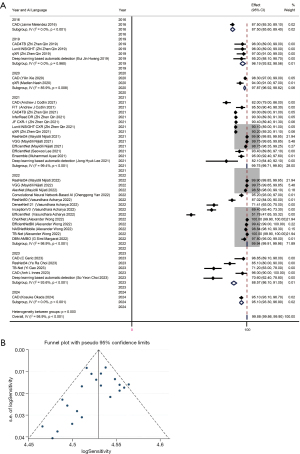

Due to the significant heterogeneity (I2=99.8%, P>0.01), we used a random-effect model. The pooled accuracy was 83.44% (83.13–83.75%) (Figure 2A). The sensitivity analysis showed that none of the studies significantly skewed our analyses with no effect on the outcome when removed (Figure S1). A funnel plot was plotted using the standard error of the 95% CI and the log of accuracy of the studies, showing that most studies fell within the plotted funnel, indicating non-significant publication bias as shown in Figure 2B. Egger and Beggs test showed P values of 0.151 and 0.293 respectively, indicating that any publication bias observed was likely due to chance and not significant.

The pooled sensitivity as shown in forest plot in Figure 3A was 99.88% (99.86–99.90%), with significant heterogeneity, I2=98.9%, P<0.001. The sensitivity analysis conducted further showed no significant bias (Figure S2). There was no significant publication bias as shown by the funnel plot in Figure 3B and Eggers and Beggs test with P=0.194 and 0.655 respectively.

To calculate specificity, we used a random-effect model as significant heterogeneity was observed (I2=99.9%, P<0.01). The pooled specificity with the 95% CI: 68.19% (67.95–68.43%) (Figure 4A). The sensitivity analysis of our study showed no significant bias (Figure S3). There was no publication bias as shown by the funnel plot (Figure 4B) and The Egger and Beggs test, with P values of 0.142 and 0.474 respectively.

Subgroup analysis

Subgroup analysis was performed by categorizing the studies based on the type of study design, method of validation, study time frame and prevalence of TB in the study population. Random effect model had to be used for this analysis, as heterogeneity remained significant among the studies. The results are summarized in Table 4.

Table 4

| Sub group analysis | No. of studies | No. of X-rays | Pooled accuracy (%) (95% CI) | Pooled sensitivity (%)(95% CI) | Pooled specificity (%)(95% CI) |

|---|---|---|---|---|---|

| Type of study | |||||

| Retrospective | 13 | 61,713 | 97.19 (97.02–97.35) | 97.47 (97.31–97.62) | 77.75 (75.65–79.85) |

| Prospective | 8 | 37,284 | 82.61 (82.22–82.98) | 90.56 (90.22–90.90) | 68.12 (64.12–72.12) |

| Validation | |||||

| External | 9 | 25,086 | 96.51 (96.35–96.66) | 96.55 (96.37–96.74) | 74.14 (73.91–74.37) |

| Internal | 12 | 73,911 | 88.58 (88.24–88.92) | 95.88 (95.66–96.11) | 64.25 (64.07–64.43) |

| Country | |||||

| TB endemic | 14 | 58,639 | 95.19 (95.04–95.34) | 96.25 (96.11–96.39) | 64.47 (64.29–64.65) |

| TB non-endemic | 7 | 40,358 | 84.66 (83.55–85.77) | 97.56 (96.72–98.41) | 73.48 (73.25–73.71) |

| AI models | |||||

| CAD | 7 | 41,301 | 89.29 (88.84–89.74) | 97.48 (97.20–97.77) | 73.82 (73.35–74.29) |

| ResNet | 4 | 16,277 | 95.67 (95.34–96.01) | 93.52 (92.92–94.11) | 82.25 (81.73–82.77) |

| qXR | 3 | 24,786 | 87.86 (87.11–88.61) | 90.72 (89.83–91.61) | 75.04 (74.51–75.56) |

| Lunit | 2 | 24,469 | 83.24 (82.38–84.10) # | 90.43 (89.46–91.40) # | 69.51 (68.89–70.12) # |

| EfficientNET | 4 | 16,357 | 95.54 (94.97–96.11) | 99.41 (99.39–99.43) | 79.04 (78.25–79.82) |

| TBNet | 2 | 7,864 | 99.79 (99.69–99.89)* | 99.89 (99.84–99.94)* | 99.41 (99.21–99.61)* |

| VGG | 2 | 11,919 | 87.49 (85.21–89.77) | 94.10 (92.65–95.54) | 72.10 (71.20–73.00) |

#, the lowest accuracy, sensitivity and specificity; *, the highest accuracy, sensitivity and specificity. AI, artificial intelligence; CAD, computer aided detection; CI, confidence interval; TB, tuberculosis.

Analyzed separately, the thirteen retrospective studies showed higher values of pooled accuracy, specificity and sensitivity, compared to the eight prospective studies. Studies were also assessed in accordance with the type of validation that had been used, with 9 studies having used an external dataset for the validation of the AI model i.e., an external validation while 12 studies had used the same dataset as they used for testing of the AI model i.e., internal validation. AI models with external validation demonstrated greater pooled accuracy [96.51% (96.35–96.66%) vs. 88.58% (88.24–88.92%)] and specificity [74.14% (73.91–74.37%) vs. 64.25% (64.07–64.43%)] with internal validation, with no statistically significant difference in sensitivity between the two modes of validation.

Using the WHO criteria, we compared studies with high (n=14) vs. low TB prevalence (n=7). The AI tool had greater pooled accuracy but lower pooled specificity in TB Endemic countries with similar pooled sensitivity.

We also compared 7 AI models using pooled accuracy, sensitivity and specificity; TBNet demonstrated the best outcome with a pooled accuracy of 99.79% (99.69–99.89%), pooled sensitivity of 99.89% (99.84–99.94%) and a pooled specificity of 99.41% (99.21–99.61%). Meanwhile Lunit was found to be the least accurate, sensitive and specific with an accuracy of 83.24% (82.38–84.10%), sensitivity, 90.43% (89.46–91.40%) and specificity, 69.51% (68.89–70.12%) respectively. However, we could not asses several AI models individually, due to insufficient number of studies.

Discussion

Our study shows that AI outperformed both a radiologist and a physician, with higher pooled estimates of accuracy; 83.44% (83.13–83.75%); sensitivity, 99.88% (99.86–99.90%) and specificity of 68.19% (67.95–68.43%). The subgroup analysis revealed external validation to be more accurate and specific, with no significant difference in sensitivity between external and internal validation. Compared with prospective studies, retrospective ones demonstrated higher accuracy, sensitivity and specificity. Studies in TB endemic areas showed higher accuracy, similar sensitivity and lower specificity compared with studies conducted in non-endemic areas. Finally, of all the AI models, TBNet exhibited the highest accuracy, specificity and sensitivity.

While the AI tools have a higher accuracy and sensitivity, the specificity is low. A plausible explanation may be that current AI algorithms are highly sensitive in detecting CXR abnormalities accurately but so far do not possess enough discriminatory power to distinguish between abnormalities of TB from other lung pathologies. Even in CXR of patients with TB, the features observed can vary greatly and present huge overlap with other infectious and non-infectious pathologies. TB can present with a myriad of typical CXR features such as infiltrates and nodules, with a predilection for the upper lobe; plural effusions or lymphadenopathy, particularly in the mediastinal region, with i.e., cavitation, resulting from damage of the surrounding pulmonary tissue being the hallmark of pulmonary TB (33). However, similar findings are also observed in other lung pathologies, including, pneumonia and cancer. Therefore, for the definitive diagnosis of TB, clinical picture and sputum culture and interferon-gamma release assay (IGRA) will continue to be important (34). However, it is extremely pertinent to appreciate that despite specificity being lower in cases of AI tools, it still outperformed human interpreters, not only by more frequent detection of CXR abnormalities but also in making the diagnoses earlier. With the future integration of AI, diagnostic approaches for TB are expected to evolve significantly. Beyond analyzing CXRs, incorporating clinical profiles and laboratory investigations into a unified, computer-assisted dashboard could enhance diagnostic specificity and accuracy.

We found the method of validation to be an important determinant of the performance of AI; while twelve studies used internal validation, using the same CXR images of diagnosed TB cases as used in the training set, the other nine employed external validation; i.e., using an independent dataset of patients or CXRs. Studies with external validation demonstrated higher accuracy compared to those with internal validation, achieving 96.51% (95% CI: 96.35–96.66%) vs. 88.58% (95% CI: 88.24–88.92%), respectively. Similarly, studies with external validation exhibited greater specificity; 74.14% (95% CI: 73.91–74.37%) compared to 64.25% (95% CI: 64.07–64.43%) for internally validated studies. The independent nature of external validation reduces the risk of bias and overfitting, providing a more objective assessment of model performance. This highlights the generalizability of AI models, indicating their potential for application in diverse clinical settings. Interestingly, the sensitivity of AI models was comparable between both validation methods, suggesting that these models are consistently capable of detecting signs of TB regardless of the validation approach used.

We found significant heterogeneity, comparing the commercially available software used in the studies, namely; CAD4TB, ResNet34, qXR, Lunit insight, EfficientNet, VGG and TBNet. Out of the seven studied models, TBNet demonstrated the best performance, with pooled accuracy of 99.79% (99.69–99.89%), pooled sensitivity of 99.89% (99.84–99.94%) and pooled specificity of 99.41% (99.21–99.61%), whereas Lunit had the lowest accuracy [83.24% (82.38–84.10%)], sensitivity [90.43% (89.46–91.40%)] and specificity [69.51% (68.89–70.12%)] of all. Deep learning models, for e.g., convolutional neural network (CNN) outperformed others; Currie et al. argued that the classification of variety of images is more accurate with CNN, as these use a multilayered approach, extracting multiple types of data (35), construct heat maps and follow a series of complex codes and algorithms to reach a conclusion, unlike human interpreters. While AI is limited by information fed to it, it is still able to interpret and analyze this information much more efficiently and accurately. AI programs can do this by learning from images from already diagnosed TB patients in the training stage, to develop their own precise patterns and algorithms which they can apply on real-life CXRs to calculate the likelihood of TB (36). All AI models analyze these images differently; the most accurate, CNN, divides each image into a number of different slices analyzing each slice separately, rendering the process more precise, accurate and efficient (37).

Of all AI models tested for TB detection using CXR, TBNet is superior not only in recognizing characteristic TB patterns using a CNN model, it also leverages generative synthesis to reach its conclusion. However, the fact that TBNet has been trained to specifically recognize pulmonary findings of TB, renders it as a better predictor of the disease compared with physicians’ interpretation of CXR and other AI models. Wong et al. (38) showed that TBNet demonstrates a higher accuracy, sensitivity and specificity when compared with other AI models as confirmed in our analysis.

To compare the sensitivity and specificity of AI for TB detection against traditional methods, we conducted an in-depth literature review, focusing on various meta-analyses that employed various TB detection techniques. We selected nine meta-analyses for our review (39-47), with the key findings summarized in Table 5. Notably, the results demonstrated that AI-based CXR interpretation yielded sensitivity and specificity comparable to those of the GeneXpert test (40,41,45). However, AI offers a significant advantage over expensive tests like GeneXpert in resource-limited settings, where TB is often more prevalent, making it a more feasible option in such regions.

Table 5

| First author | Year | No. of studies | Diagnosing method(s) | Sensitivity (%) | Specificity (%) |

|---|---|---|---|---|---|

| Josef Yayan (39) | 2024 | 13 | Microscopy | 55 | 80 |

| Culture | 70 | 87.5 | |||

| X-ray | 72.5 | 72.5 | |||

| PET-CT | 82.6 | 67.3 | |||

| Shima Mahmoudi (40) | 2024 | 6 | Interferon (QFT) Assay | 99 | 94 |

| L T Allan-Blitz (41) | 2024 | 11 | Ultrasound | 72 | 77 |

| E Chandler Church (42) | 2024 | 20 | NAAT | 36–91 | 66–100 |

| Mikashmi Kohli (43) | 2018 | 66 | Xpert (pleural tissue) | 31 | 99 |

| Xpert (bone) | 97 | 82 | |||

| Xpert (joints) | 80 | 99 | |||

| Priya B. Shete (44) | 2019 | 13 | TB-LAMP | 77.7 | 98 |

| Xue Gong (45) | 2023 | 144 | Xpert (BALF) | 88 | 94 |

| Xpert (Sputum) | 95 | 96 | |||

| Xpert (Gastric Juice) | 94 | 96 | |||

| Xpert (Stool) | 79 | 98 | |||

| Xpert (Biopsy) | 77 | 86 | |||

| Xpert (identifying tuberculous lymphadenitis) | 84 | 97 | |||

| Xpert (identifying tuberculous meningitis) | 6 | 98 | |||

| Identifying pleural TB | 30 | 99 | |||

| Unclassified extrapulmonary TB | 90 | 98 | |||

| Identifying other types of TB | 69 | 100 | |||

| Stool sample in intestinal TB | 36 | 75 | |||

| Yanqin Shen (46) | 2023 | 64 | NAATs | 80 | 96 |

| PCR | 56 | 98 | |||

| Multiplex PCR | 82 | 99 | |||

| Xpert | 45 | 100 | |||

| Alex J Scott (47) | 2024 | 5 | CAD | 87 | 74 |

CAD, computer aided detection; NAAT, Nucleic Acid Amplification Test; PCR, polymerase chain reaction; TB, tuberculosis.

Our analyses have some limitations. Our results showed significant heterogeneity which persisted even after subgroup analysis, suggesting that the heterogeneity may be the result of unknown confounders. To account for heterogeneity, a random-effects model was utilized for the analysis. Additionally, a sensitivity analysis was performed by excluding one study at a time. This analytical approach revealed no significant difference in the pooled outcomes with no substantial reduction in heterogeneity, suggesting that the latter was not attributable to a specific study. To reduce heterogeneity further, a subgroup analysis was performed, dividing the study based on various criteria. However, while this approach did reduce heterogeneity, it still remained significant.

Despite our exhaustive literature search, we may have overlooked potentially eligible studies. As only a few of the studies we have included reported population characteristics, we could not perform a subgroup analysis adjusting for co-morbid conditions. This is particularly relevant if the CXR abnormalities were attributable to other pathologies and not TB. We did perform subgroup analysis on some of the AI models, but not all, due either to the limited number of studies or lack of information about the AI model used in the particular study. Lastly, the analysis was only done on CXRs due to the limited number of studies available using CT scans, which is a more accurate imaging technique compared with the CXR (48). However, CXR will continue to be the only available radiological investigation for TB diagnosis in the developing countries, where AI can play a crucial role in automated, accurate and efficient mass screening, particularly in endemic zones.

Although AI models show promise in TB detection, their clinical applicability requires further work with future studies incorporating the clinical picture, demographics, comorbidities and the algorithms. This would allow for the possibility of AI models to be integrated into computer assisted diagnostic dashboards within hospitals and screening camps. Once sufficient evidence was available, AI approaches could be incorporated in practice guidelines, allowing for a less resource intensive approach for TB screening. Future studies should also focus more on patient populations from TB endemic areas. In the studies we assessed, the various AI algorithms employed different criteria to recognize TB, highlighting the need for uniformity in TB recognition protocols used by the AI models. Studies with AI tools using the CT scan images for TB detection should also be conducted, as the latter is more accurate than CXR. While AI is a valuable screening tool, so far it is not able to replace the physician’s clinical acumen, or tests like Gene Xpert and sputum culture for a definite diagnosis of TB.

Although AI can be applied in various settings, its implementation and usage may vary depending on factors such as the prevalence of TB cases, resource availability, the presence of specialists and doctors, and the proximity of healthcare facilities equipped to perform confirmatory TB tests. Centers with limited resources, a high influx of patients with suspected TB, and significant distances to healthcare facilities would derive the greatest benefit from such an AI model.

Conclusions

Our study shows that AI models demonstrate impressive accuracy and sensitivity in identifying TB from CXR images, compared with radiological diagnosis, albeit with great variability in performance of the various AI models. AI holds promise as a screening tool for TB, particularly in high-prevalence areas with low resources. These AI models can be integrated into computer-assisted dashboards, to assist healthcare providers in accurately and efficiently identifying individuals with TB. This approach could potentially enhance the efficiency and effectiveness of TB detection efforts, contributing to improved healthcare outcomes in the developing world.

Acknowledgments

None.

Footnote

Reporting Checklist: The authors have completed the PRISMA reporting checklist. Available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-363/rc

Peer Review File: Available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-363/prf

Funding: None.

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at https://jmai.amegroups.com/article/view/10.21037/jmai-24-363/coif). The authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

- Barbier M, Wirth T. The Evolutionary History, Demography, and Spread of the Mycobacterium tuberculosis Complex. Microbiol Spectr 2016; [Crossref] [PubMed]

- Lange C, Chesov D, Heyckendorf J, et al. Drug-resistant tuberculosis: An update on disease burden, diagnosis and treatment. Respirology 2018;23:656-73. [Crossref] [PubMed]

- Comas I. Genomic Epidemiology of Tuberculosis. Adv Exp Med Biol 2017;1019:79-93. [Crossref] [PubMed]

- Bagcchi S. WHO's Global Tuberculosis Report 2022. Lancet Microbe 2023;4:e20. [Crossref] [PubMed]

- Bomanji JB, Gupta N, Gulati P, et al. Imaging in tuberculosis. Cold Spring Harb Perspect Med 2015;5:a017814. [Crossref] [PubMed]

- Dheda K, Perumal T, Moultrie H, et al. The intersecting pandemics of tuberculosis and COVID-19: population-level and patient-level impact, clinical presentation, and corrective interventions. Lancet Respir Med 2022;10:603-22. [Crossref] [PubMed]

- Schwalbe N, Wahl B. Artificial intelligence and the future of global health. Lancet 2020;395:1579-86. [Crossref] [PubMed]

- Kulkarni S, Jha S. Artificial Intelligence, Radiology, and Tuberculosis: A Review. Acad Radiol 2020;27:71-5. [Crossref] [PubMed]

- World Health Organization. (2021, January 5). A toolkit to support the effective use of CAD for TB screening. Available online: https://iris.who.int/bitstream/handle/10665/345925/9789240028616-eng.pdf

- Page MJ, McKenzie JE, Bossuyt PM, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 2021;372: [PubMed]

- Wolff RF, Moons KGM, Riley RD, et al. PROBAST: A Tool to Assess the Risk of Bias and Applicability of Prediction Model Studies. Ann Intern Med 2019;170:51-8. [Crossref] [PubMed]

- Nijiati M, Ma J, Hu C, et al. Artificial Intelligence Assisting the Early Detection of Active Pulmonary Tuberculosis From Chest X-Rays: A Population-Based Study. Front Mol Biosci 2022;9:874475. [Crossref] [PubMed]

- Codlin AJ, Dao TP, Vo LNQ, et al. Independent evaluation of 12 artificial intelligence solutions for the detection of tuberculosis. Sci Rep 2021;11:23895. [Crossref] [PubMed]

- Yan C, Wang L, Lin J, et al. A fully automatic artificial intelligence-based CT image analysis system for accurate detection, diagnosis, and quantitative severity evaluation of pulmonary tuberculosis. Eur Radiol 2022;32:2188-99. [Crossref] [PubMed]

- Qin ZZ, Ahmed S, Sarker MS, et al. Tuberculosis detection from chest x-rays for triaging in a high tuberculosis-burden setting: an evaluation of five artificial intelligence algorithms. Lancet Digit Health 2021;3:e543-54. [Crossref] [PubMed]

- Qin ZZ, Sander MS, Rai B, et al. Using artificial intelligence to read chest radiographs for tuberculosis detection: A multi-site evaluation of the diagnostic accuracy of three deep learning systems. Sci Rep 2019;9:15000. [Crossref] [PubMed]

- Okada K, Yamada N, Takayanagi K, et al. Applicability of artificial intelligence-based computer-aided detection (AI-CAD) for pulmonary tuberculosis to community-based active case finding. Trop Med Health 2024;52:2. [Crossref] [PubMed]

- Geric C, Qin ZZ, Denkinger CM, et al. The rise of artificial intelligence reading of chest X-rays for enhanced TB diagnosis and elimination. Int J Tuberc Lung Dis 2023;27:367-72. [Crossref] [PubMed]

- Nijiati M, Zhou R, Damaola M, et al. Deep learning based CT images automatic analysis model for active/non-active pulmonary tuberculosis differential diagnosis. Front Mol Biosci 2022;9:1086047. [Crossref] [PubMed]

- Choi YR, Yoon SH, Kim J, et al. Chest Radiography of Tuberculosis: Determination of Activity Using Deep Learning Algorithm. Tuberc Respir Dis (Seoul) 2023;86:226-33. [Crossref] [PubMed]

- Acharya V, Dhiman G, Prakasha K, et al. AI-Assisted Tuberculosis Detection and Classification from Chest X-Rays Using a Deep Learning Normalization-Free Network Model. Comput Intell Neurosci 2022;2022:2399428. [Crossref] [PubMed]

- Lee S, Yim JJ, Kwak N, et al. Deep Learning to Determine the Activity of Pulmonary Tuberculosis on Chest Radiographs. Radiology 2021;301:435-42. [Crossref] [PubMed]

- Gao Y, Zhang Y, Hu C, et al. Distinguishing infectivity in patients with pulmonary tuberculosis using deep learning. Front Public Health 2023;11:1247141. [Crossref] [PubMed]

- Simi Margarat G, Hemalatha G, Mishra A, et al. Early Diagnosis of Tuberculosis Using Deep Learning Approach for IOT Based Healthcare Applications. Comput Intell Neurosci 2022;2022:3357508. [Crossref] [PubMed]

- Xie Y, Wu Z, Han X, et al. Computer-Aided System for the Detection of Multicategory Pulmonary Tuberculosis in Radiographs. J Healthc Eng 2020;2020:9205082. [Crossref] [PubMed]

- Innes AL, Martinez A, Gao X, et al. Computer-Aided Detection for Chest Radiography to Improve the Quality of Tuberculosis Diagnosis in Vietnam's District Health Facilities: An Implementation Study. Trop Med Infect Dis 2023;8:488. [Crossref] [PubMed]

- Ayaz M, Shaukat F, Raja G. Ensemble learning based automatic detection of tuberculosis in chest X-ray images using hybrid feature descriptors. Phys Eng Sci Med 2021;44:183-94. [Crossref] [PubMed]

- Lee JH, Park S, Hwang EJ, et al. Deep learning-based automated detection algorithm for active pulmonary tuberculosis on chest radiographs: diagnostic performance in systematic screening of asymptomatic individuals. Eur Radiol 2021;31:1069-80. [Crossref] [PubMed]

- Choi SY, Choi A, Baek SE, et al. Effect of multimodal diagnostic approach using deep learning-based automated detection algorithm for active pulmonary tuberculosis. Sci Rep 2023;13:19794. [Crossref] [PubMed]

- Melendez J, Sánchez CI, Philipsen RH, et al. An automated tuberculosis screening strategy combining X-ray-based computer-aided detection and clinical information. Sci Rep 2016;6:25265. [Crossref] [PubMed]

- Hwang EJ, Park S, Jin KN, et al. Development and Validation of a Deep Learning-based Automatic Detection Algorithm for Active Pulmonary Tuberculosis on Chest Radiographs. Clin Infect Dis 2019;69:739-47. [Crossref] [PubMed]

- Nash M, Kadavigere R, Andrade J, et al. Deep learning, computer-aided radiography reading for tuberculosis: a diagnostic accuracy study from a tertiary hospital in India. Sci Rep 2020;10:210. [Crossref] [PubMed]

- Skoura E, Zumla A, Bomanji J. Imaging in tuberculosis. Int J Infect Dis 2015;32:87-93. [Crossref] [PubMed]

- Singh P, Saket VK, Kachhi R. Diagnosis of TB: From conventional to modern molecular protocols. Front Biosci (Elite Ed) 2019;11:38-60. [Crossref] [PubMed]

- Currie G, Hawk KE, Rohren E, et al. Machine Learning and Deep Learning in Medical Imaging: Intelligent Imaging. J Med Imaging Radiat Sci 2019;50:477-87. [Crossref] [PubMed]

- Mintz Y, Brodie R. Introduction to artificial intelligence in medicine. Minim Invasive Ther Allied Technol 2019;28:73-81. [Crossref] [PubMed]

- Wang P, Qiao J, Liu N. An Improved Convolutional Neural Network-Based Scene Image Recognition Method. Comput Intell Neurosci 2022;2022:3464984. [Crossref] [PubMed]

- Wong A, Lee JRH, Rahmat-Khah H, et al. TB-Net: A Tailored, Self-Attention Deep Convolutional Neural Network Design for Detection of Tuberculosis Cases From Chest X-Ray Images. Front Artif Intell 2022;5:827299. [Crossref] [PubMed]

- Yayan J, Rasche K, Franke KJ, et al. FDG-PET-CT as an early detection method for tuberculosis: a systematic review and meta-analysis. BMC Public Health 2024;24:2022. [Crossref] [PubMed]

- Mahmoudi S, Nourazar S. Evaluating the diagnostic accuracy of QIAreach QuantiFERON-TB compared to QuantiFERON-TB Gold Plus for tuberculosis: a systematic review and meta-analysis. Sci Rep 2024;14:14455. [Crossref] [PubMed]

- Allan-Blitz LT, Yarbrough C, Ndayizigiye M, et al. Point-of-care ultrasound for diagnosing extrapulmonary TB. Int J Tuberc Lung Dis 2024;28:217-24. [Crossref] [PubMed]

- Church EC, Steingart KR, Cangelosi GA, et al. Oral swabs with a rapid molecular diagnostic test for pulmonary tuberculosis in adults and children: a systematic review. Lancet Glob Health 2024;12:e45-54. [Crossref] [PubMed]

- Kohli M, Schiller I, Dendukuri N, et al. Xpert(®) MTB/RIF assay for extrapulmonary tuberculosis and rifampicin resistance. Cochrane Database Syst Rev 2018;8:CD012768. [Crossref] [PubMed]

- Shete PB, Farr K, Strnad L, et al. Diagnostic accuracy of TB-LAMP for pulmonary tuberculosis: a systematic review and meta-analysis. BMC Infect Dis 2019;19:268. [Crossref] [PubMed]

- Gong X, He Y, Zhou K, et al. Efficacy of Xpert in tuberculosis diagnosis based on various specimens: a systematic review and meta-analysis. Front Cell Infect Microbiol 2023;13:1149741. [Crossref] [PubMed]

- Shen Y, Fang L, Ye B, Yu G. Meta-analysis of diagnostic accuracy of nucleic acid amplification tests for abdominal tuberculosis: A protocol. PLoS One 2020;15:e0243765. [Crossref] [PubMed]

- Scott AJ, Perumal T, Hohlfeld A, et al. Diagnostic Accuracy of Computer-Aided Detection During Active Case Finding for Pulmonary Tuberculosis in Africa: A Systematic Review and Meta-analysis. Open Forum Infect Dis 2024;11:ofae020. [Crossref] [PubMed]

- Buonsenso D, Pata D, Visconti E, et al. Chest CT Scan for the Diagnosis of Pediatric Pulmonary TB: Radiological Findings and Its Diagnostic Significance. Front Pediatr 2021;9:583197. [Crossref] [PubMed]

Cite this article as: Suchal ZA, Ain NU, Rehman A, Mahmud A. X-rays in the AI age: revolutionizing pulmonary tuberculosis diagnosis—a systematic review & meta-analysis. J Med Artif Intell 2025;8:36.